THE MATHEMATICIAN YOU DON’T KNOW

March 19, 2019

Okay, there will be a test after you read this post. Here we go. Do you know these people?

- Beyoncé

- Jennifer Lopez

- Mariah Cary

- Lady Gaga

- Ariana Grande

- Katy Perry

- Miley Cyrus

- Karen Uhlenbeck

Don’t feel bad. I didn’t know either. This is Karen Uhlenbeck—the mathematician we do not know. For some unknown reason we all (even me) know the “pop” stars by name; who their significant other or others are, their children, their latest hit single, who they recently “dumped”, where they vacationed, etc. etc. We know this. I would propose the lady whose picture shown below has contributed more to “human kind” that all the individuals listed above. Then again, that’s just me.

For the first time, one of the top prizes in mathematics has been given to a woman. I find this hard to believe because we all know that “girls” can’t do math. Your mamas told you that and you remembered it. (I suppose Dr. Uhlenbeck mom was doing her nails and forgot to mention that to her.)

This past Tuesday, the Norwegian Academy of Science and Letters announced it has awarded this year’s Abel Prize — an award modeled on the Nobel Prizes — to Karen Uhlenbeck, an emeritus professor at the University of Texas at Austin. The award cites “the fundamental impact of her work on analysis, geometry and mathematical physics.” Uhlenbeck won for her foundational work in geometric analysis, which combines the technical power of analysis—a branch of math that extends and generalizes calculus—with the more conceptual areas of geometry and topology. She is the first woman to receive the prize since the award of six (6) million Norwegian kroner (approximately $700,000) was first given in 2003.

One of Dr. Uhlenbeck’s advances in essence described the complex shapes of soap films not in a bubble bath but in abstract, high-dimensional curved spaces. In later work, she helped put a rigorous mathematical underpinning to techniques widely used by physicists in quantum field theory to describe fundamental interactions between particles and forces. (How many think Beyoncé could do that?)

In the process, she helped pioneer a field known as geometric analysis, and she developed techniques now commonly used by many mathematicians. As a matter of fact, she invented the field.

“She did things nobody thought about doing,” said Sun-Yung Alice Chang, a mathematician at Princeton University who served on the five-member prize committee, “and after she did, she laid the foundations for that branch of mathematics.”

An example of objects studied in geometric analysis is a minimal surface. Analogous to a geodesic, a curve that minimizes path length, a minimal surface minimizes area; think of a soap film, a minimal surface that minimizes energy. Analysis focuses on the differential equations governing variations of surface area, whereas geometry and topology focus on the minimal surface representing a solution to the equations. Geometric analysis weaves together both approaches, resulting in new insights.

The field did not exist when Uhlenbeck began graduate school in the mid-1960s, but tantalizing results linking analysis and topology had begun to emerge. In the early 1980s, Uhlenbeck and her collaborators did ground-breaking work in minimal surfaces. They showed how to deal with singular points, that is, points where the minimal surface is no longer smooth or where the solution to the equations is not defined. They proved that there are only finitely many singular points and showed how to study them by expanding them into “bubbles.” As a technique, bubbling made a deep impact and is now a standard tool.

Born in 1942 to an engineer and an artist, Uhlenbeck is a mountain-loving hiker who learned to surf at the age of forty (40). As a child she was a voracious reader and “was interested in everything,” she said in an interview last year with Celebratio.org. “I was always tense, wanting to know what was going on and asking questions.”

She initially majored in physics as an undergraduate at the University of Michigan. But her impatience with lab work and a growing love for math led her to switch majors. She nevertheless retained a lifelong passion for physics, and centered much of her research on problems from that field. In physics, a gauge theory is a kind of field theory, formulated in the language of the geometry of fiber bundles; the simplest example is electromagnetism. One of the most important gauge theories from the 20th century is Yang-Mills theory, which underlies the standard model of elementary particle physics. Uhlenbeck and other mathematicians began to realize that the Yang-Mills equations have deep connections to problems in geometry and topology. By the early 1980s, she laid the analytic foundations for mathematical investigation of the Yang-Mills equations.

Dr. Uhlenbeck, who lives in Princeton, N.J., learned that she won the prize on Sunday morning.

“When I came out of church, I noticed that I had a text message from Alice Chang that said, Would I please accept a call from Norway?” Dr. Uhlenbeck said. “When I got home, I called Norway back and they told me.”

Who said women can’t do math?

SMARTS

March 17, 2019

Who was the smartest person in the history of our species? Solomon, Albert Einstein, Jesus, Nikola Tesla, Isaac Newton, Leonardo de Vinci, Stephen Hawking—who would you name. We’ve had several individuals who broke the curve relative to intelligence. As defined by the Oxford Dictionary of the English Language, IQ:

“an intelligence test score that is obtained by dividing mental age, which reflects the age-graded level of performance as derived from population norms, by chronological age and multiplying by100: a score of100 thus indicates a performance at exactly the normal level for that age group. Abbreviation: IQ”

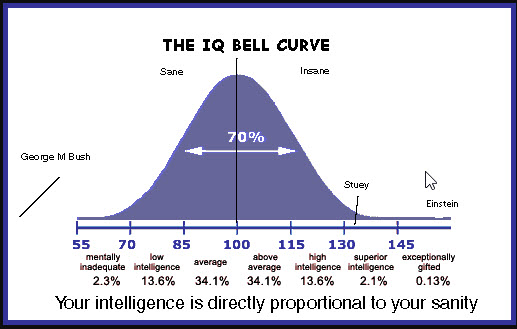

An intelligence quotient or IQ is a score derived from one of several different intelligence measures. Standardized tests are designed to measure intelligence. The term “IQ” is a translation of the German Intellizenz Quotient and was coined by the German psychologist William Stern in 1912. This was a method proposed by Dr. Stern to score early modern children’s intelligence tests such as those developed by Alfred Binet and Theodore Simin in the early twentieth century. Although the term “IQ” is still in use, the scoring of modern IQ tests such as the Wechsler Adult Intelligence Scale is not based on a projection of the subject’s measured rank on the Gaussian Bell curve with a center value of one hundred (100) and a standard deviation of fifteen (15). The Stanford-Binet IQ test has a standard deviation of sixteen (16). As you can see from the graphic below, seventy percent (70%) of the human population has an IQ between eighty-five and one hundred and fifteen. From one hundred and fifteen to one hundred and thirty you are considered to be highly intelligent. Above one hundred and thirty you are exceptionally gifted.

What are several qualities of highly intelligent people? Let’s look.

QUALITIES:

- A great deal of self-control.

- Very curious

- They are avid readers

- They are intuitive

- They love learning

- They are adaptable

- They are risk-takers

- They are NOT over-confident

- They are open-minded

- They are somewhat introverted

You probably know individuals who fit this profile. We are going to look at one right now: John von Neumann.

JON von NEUMANN:

The Financial Times of London celebrated John von Neumann as “The Man of the Century” on Dec. 24, 1999. The headline hailed him as the “architect of the computer age,” not only the “most striking” person of the 20th century, but its “pattern-card”—the pattern from which modern man, like the newest fashion collection, is cut.

The Financial Times and others characterize von Neumann’s importance for the development of modern thinking by what are termed his three great accomplishments, namely:

(1) Von Neumann is the inventor of the computer. All computers in use today have the “architecture” von Neumann developed, which makes it possible to store the program, together with data, in working memory.

(2) By comparing human intelligence to computers, von Neumann laid the foundation for “Artificial Intelligence,” which is taken to be one of the most important areas of research today.

(3) Von Neumann used his “game theory,” to develop a dominant tool for economic analysis, which gained recognition in 1994 when the Nobel Prize for economic sciences was awarded to John C. Harsanyi, John F. Nash, and Richard Selten.

John von Neumann, original name János Neumann, (born December 28, 1903, Budapest, Hungary—died February 8, 1957, Washington, D.C. Hungarian-born American mathematician. As an adult, he appended von to his surname; the hereditary title had been granted his father in 1913. Von Neumann grew from child prodigy to one of the world’s foremost mathematicians by his mid-twenties. Important work in set theory inaugurated a career that touched nearly every major branch of mathematics. Von Neumann’s gift for applied mathematics took his work in directions that influenced quantum theory theory of automation, economics, and defense planning. Von Neumann pioneered game theory, and, along with Alan Turing and Claude Shannon was one of the conceptual inventors of the stored-program digital computer .

Von Neumann did exhibit signs of genius in early childhood: he could joke in Classical Greek and, for a family stunt, he could quickly memorize a page from a telephone book and recite its numbers and addresses. Von Neumann learned languages and math from tutors and attended Budapest’s most prestigious secondary school, the Lutheran Gymnasium . The Neumann family fled Bela Kun’s short-lived communist regime in 1919 for a brief and relatively comfortable exile split between Vienna and the Adriatic resort of Abbazia. Upon completion of von Neumann’s secondary schooling in 1921, his father discouraged him from pursuing a career in mathematics, fearing that there was not enough money in the field. As a compromise, von Neumann simultaneously studied chemistry and mathematics. He earned a degree in chemical engineering from the Swiss Federal Institute in Zurich and a doctorate in mathematics (1926) from the University of Budapest.

OK, that all well and good but do we know the IQ of Dr. John von Neumann?

John Von Neumann IQ is 190, which is considered as a super genius and in top 0.1% of the population in the world.

With his marvelous IQ, he wrote one hundred and fifty (150) published papers in his life; sixty (60) in pure mathematics, twenty (20) in physics, and sixty (60) in applied mathematics. His last work, an unfinished manuscript written while in the hospital and later published in book form as The Computer and the Brain, gives an indication of the direction of his interests at the time of his death. It discusses how the brain can be viewed as a computing machine. The book is speculative in nature, but discusses several important differences between brains and computers of his day (such as processing speed and parallelism), as well as suggesting directions for future research. Memory is one of the central themes in his book.

I told you he was smart!

BENDABLE BATTERIES

February 1, 2019

I always marvel at the pace of technology and how that technology fills a definite need for products only dreamt of previously. We all have heard that “necessity is the mother of invention” well, I believe that to a tee. We need it, we can’t find it, no one makes it, let’s invent it. This is the way adults solve problems. Every week technology improves our lives giving us labor-saving devices that “tomorrow” will become commonplace. All electro-mechanical devices run on amperage provided by voltage impressed. Many of these devices use battery power for portability. Lithium-ion batteries seem to be the batteries of choice right now due to their ability to hold a charge and their ability to fast-charge.

Pioneer work with the lithium battery began in 1912 under G.N. Lewis but it was not until the early 1970s when the first non-rechargeable lithium batteries became commercially available. lithium is the lightest of all metals, has the greatest electrochemical potential and provides the largest energy density for weight.

The energy density of lithium-ion is typically twice that of the standard nickel-cadmium. This is a huge advantage recognized by engineers and scientists the world over. There is potential for higher energy densities. The load characteristics are reasonably good and behave similarly to nickel-cadmium in terms of discharge. The high cell voltage of 3.6 volts allows battery pack designs with only one cell. Most of today’s mobile phones run on a single cell. A nickel-based pack would require three 1.2-volt cells connected in series.

Lithium-ion is a low maintenance battery, an advantage that most other chemistries cannot claim. There is no memory and no scheduled cycling is required to prolong the battery’s life. In addition, the self-discharge is less than half compared to nickel-cadmium, making lithium-ion well suited for modern fuel gauge applications. lithium-ion cells cause little harm when disposed.

If we look at advantages and disadvantages, we see the following:

Advantages

- High energy density – potential for yet higher capacities.

- Does not need prolonged priming when new. One regular charge is all that’s needed.

- Relatively low self-discharge – self-discharge is less than half that of nickel-based batteries.

- Low Maintenance – no periodic discharge is needed; there is no memory.

- Specialty cells can provide very high current to applications such as power tools.

Limitations

- Requires protection circuit to maintain voltage and current within safe limits.

- Subject to aging, even if not in use – storage in a cool place at 40% charge reduces the aging effect.

- Transportation restrictions – shipment of larger quantities may be subject to regulatory control. This restriction does not apply to personal carry-on batteries.

- Expensive to manufacture – about 40 percent higher in cost than nickel-cadmium.

- Not fully mature – metals and chemicals are changing on a continuing basis.

One amazing property of Li-Ion batteries is their ability to be formed. Let’s take a look.

Researchers have just published documentation relative to a new technology that will definitely fill a need.

ULSAN NATIONAL INSTITUTE OF SCIENCE AND TECHNOLOGY:

Researchers at the Ulsan National Institute of Science and Technology in Korea have developed an imprintable and bendable lithium-ion battery they claim is the world’s first, and could hasten the introduction of flexible smart phones that leverage flexible display technology, such as Samsung’s Youm flexible OLED.

Samsung first demonstrated this display technology at CES 2013 as the next step in the evolution of mobile-device displays. The battery could also potentially be used in other flexible devices that debuted at the show, such as a wristwatch and a tablet.

Ulsan researchers had help on the technology from Professor John A. Rogers of the University of Illinois, researchers Young-Gi Lee and Gwangman Kim of Korea’s Electronics and Telecommunications Research Institute, and researcher Eunhae Gil of Kangwon National University. Rogers was also part of the team that developed a breakthrough in transient electronics, or electronics that dissolve inside the body.

The Korea JoongAng Daily newspaper first reported the story, citing the South Korea Ministry of Education, Science and Technology, which co-funded the research with the National Research Foundation of Korea.

The key to the flexible battery technology lies in nanomaterials that can be applied to any surface to create fluid-like polymer electrolytes that are solid, not liquid, according to Ulsan researchers. This is in contrast to typical device lithium-ion batteries, which use liquefied electrolytes that are put in square-shaped cases. Researchers say this also makes the flexible battery more stable and less prone to overheating.

“Conventional lithium-ion batteries that use liquefied electrolytes had problems with safety as the film that separates the electrolytes may melt under heat, in which case the positive and negative may come in contact, causing an explosion,” Lee told the Korean newspaper. “Because the new battery uses flexible but solid materials, and not liquids, it can be expected to show a much higher level of stability than conventional rechargeable batteries.”

This potential explosiveness of the materials in lithium-ion batteries — which in the past received attention because of exploding mobile devices — has been in the news again recently in the case of the Boeing 787 Dreamliner, which has had several instances of liquid leaking lithium-ion batteries. The problems have grounded Boeing’s next-generation jumbo jet until they are investigated and resolved.

This is a very short posting but one I felt would be of great interest to my readers. New technology; i.e. cutting-edge stuff, etc. is fun to write about and possibly useful to learn. Hope you enjoy this one.

Please send me your comments: bobjengr@comcast.net.

COMPUTER SIMULATION

January 20, 2019

More and more engineers, systems analysist, biochemists, city planners, medical practitioners, individuals in entertainment fields are moving towards computer simulation. Let’s take a quick look at simulation then we will discover several examples of how very powerful this technology can be.

WHAT IS COMPUTER SIMULATION?

Simulation modelling is an excellent tool for analyzing and optimizing dynamic processes. Specifically, when mathematical optimization of complex systems becomes infeasible, and when conducting experiments within real systems is too expensive, time consuming, or dangerous, simulation becomes a powerful tool. The aim of simulation is to support objective decision making by means of dynamic analysis, to enable managers to safely plan their operations, and to save costs.

A computer simulation or a computer model is a computer program that attempts to simulate an abstract model of a particular system. … Computer simulations build on and are useful adjuncts to purely mathematical models in science, technology and entertainment.

Computer simulations have become a useful part of mathematical modelling of many natural systems in physics, chemistry and biology, human systems in economics, psychology, and social science and in the process of engineering new technology, to gain insight into the operation of those systems. They are also widely used in the entertainment fields.

Traditionally, the formal modeling of systems has been possible using mathematical models, which attempts to find analytical solutions to problems enabling the prediction of behavior of the system from a set of parameters and initial conditions. The word prediction is a very important word in the overall process. One very critical part of the predictive process is designating the parameters properly. Not only the upper and lower specifications but parameters that define intermediate processes.

The reliability and the trust people put in computer simulations depends on the validity of the simulation model. The degree of trust is directly related to the software itself and the reputation of the company producing the software. There will considerably more in this course regarding vendors providing software to companies wishing to simulate processes and solve complex problems.

Computer simulations find use in the study of dynamic behavior in an environment that may be difficult or dangerous to implement in real life. Say, a nuclear blast may be represented with a mathematical model that takes into consideration various elements such as velocity, heat and radioactive emissions. Additionally, one may implement changes to the equation by changing certain other variables, like the amount of fissionable material used in the blast. Another application involves predictive efforts relative to weather systems. Mathematics involving these determinations are significantly complex and usually involve a branch of math called “chaos theory”.

Simulations largely help in determining behaviors when individual components of a system are altered. Simulations can also be used in engineering to determine potential effects, such as that of river systems for the construction of dams. Some companies call these behaviors “what-if” scenarios because they allow the engineer or scientist to apply differing parameters to discern cause-effect interaction.

One great advantage a computer simulation has over a mathematical model is allowing a visual representation of events and time line. You can actually see the action and chain of events with simulation and investigate the parameters for acceptance. You can examine the limits of acceptability using simulation. All components and assemblies have upper and lower specification limits a and must perform within those limits.

Computer simulation is the discipline of designing a model of an actual or theoretical physical system, executing the model on a digital computer, and analyzing the execution output. Simulation embodies the principle of “learning by doing” — to learn about the system we must first build a model of some sort and then operate the model. The use of simulation is an activity that is as natural as a child who role plays. Children understand the world around them by simulating (with toys and figurines) most of their interactions with other people, animals and objects. As adults, we lose some of this childlike behavior but recapture it later on through computer simulation. To understand reality and all of its complexity, we must build artificial objects and dynamically act out roles with them. Computer simulation is the electronic equivalent of this type of role playing and it serves to drive synthetic environments and virtual worlds. Within the overall task of simulation, there are three primary sub-fields: model design, model execution and model analysis.

REAL-WORLD SIMULATION:

The following examples are taken from computer screen representing real-world situations and/or problems that need solutions. As mentioned earlier, “what-ifs” may be realized by animating the computer model providing cause-effect and responses to desired inputs. Let’s take a look.

A great host of mechanical and structural problems may be solved by using computer simulation. The example above shows how the diameter of two matching holes may be affected by applying heat to the bracket

The Newtonian and non-Newtonian flow of fluids, i.e. liquids and gases, has always been a subject of concern within piping systems. Flow related to pressure and temperature may be approximated by simulation.

The Newtonian and non-Newtonian flow of fluids, i.e. liquids and gases, has always been a subject of concern within piping systems. Flow related to pressure and temperature may be approximated by simulation.

Electromagnetics is an extremely complex field. The digital above strives to show how a magnetic field reacts to applied voltage.

Chemical engineers are very concerned with reaction time when chemicals are mixed. One example might be the ignition time when an oxidizer comes in contact with fuel.

Acoustics or how sound propagates through a physical device or structure.

The transfer of heat from a colder surface to a warmer surface has always come into question. Simulation programs are extremely valuable in visualizing this transfer.

Equation-based modeling can be simulated showing how a structure, in this case a metal plate, can be affected when forces are applied.

In addition to computer simulation, we have AR or augmented reality and VR virtual reality. Those subjects are fascinating but will require another post for another day. Hope you enjoy this one.

ASTROPHYSICS FOR PEOPLE IN A HURRY

March 15, 2018

Astrophysics for People in a Hurry was written by Neil deGrasse Tyson. I think the best place to start is with a brief bio of Dr. Tyson.

NEIL de GRASSE TYSON was borne October 5, 1968 in New York City. When he was nine years old, his interest in astronomy was sparked by a trip to the Hayden Planetarium at the American Museum of Natural History in New York. Tyson followed that passion and received a bachelor’s degree in physics from Harvard University in Cambridge, Massachusetts, in 1980 and a master’s degree in astronomy from the University of Texas at Austin in 1983. He began writing a question-and-answer column for the University of Texas’s popular astronomy magazine StarDate, and material from that column later appeared in his books Merlin’s Tour of the Universe (1989) and Just Visiting This Planet (1998).

Tyson then earned a master’s (1989) and a doctorate in astrophysics (1991) from Columbia University, New York City. He was a postdoctoral research associate at Princeton University from 1991 to 1994, when he joined the Hayden Planetarium as a staff scientist. His research dealt with problems relating to galactic structure and evolution. He became acting director of the Hayden Planetarium in 1995 and director in 1996. From 1995 to 2005 he wrote monthly essays for Natural History magazine, some of which were collected in Death by Black Hole: And Other Cosmic Quandaries (2007), and in 2000 he wrote an autobiography, The Sky Is Not the Limit: Adventures of an Urban Astrophysicist. His later books include Astrophysics for People in a Hurry (2017).

You can see from his biography Dr. Tyson is a “heavy hitter” and knows his subject in and out. His newest book “Astrophysics for People in a Hurry” treats his readers with respect relative to their time. During the summer of 2017, it was on the New York Times best seller list at number one for four (4) consecutive months and has never been unlisted from that list since its publication. The book is small and contains only two hundred and nine (209) pages, but please do not let this short book fool you. It is extremely well written and “loaded” with facts relevant to the subject matter. Very concise and to the point. I would like now to give you some idea as to the content by coping several passages from the book. Short passages that will indicate what you will be dealing with as a reader.

- In the beginning, nearly fourteen billion years ago, all the space and all the matter and all the energy of the knows universe was contained in a volume less than one-trillionth the size of the period that ends this sentence.

- As the universe aged through 10^-55 seconds, it continued to expand, diluting all concentrations of energy, and what remained of the unified forces split into the “electroweak” and the “strong nuclear” forces.

- As the cosmos continued to expand and cool, growing larger that the size of our solar system, the temperature dropped rapidly below a trillion degrees Kelvin.

- After cooling, one electron for every proton has been “frozen” into existence. As the cosmos continues to cool-dropping below a hundred million degrees-protons fuse with other protons as well as with neutrons, forming atomic nuclei and hatching a universe in which ninety percent of these nuclei are hydrogen and ten percent are helium, along with trace amounts of deuterium (heavy hydrogen), tritium (even heavier than hydrogen), and lithium.

- For the first billion years, the universe continued to expand and cool as matter gravitated into the massive concentrations we call galaxies. Nearly a hundred billion of them formed, each containing hundreds of billions of stars that undergo thermonuclear fusion in their cores.

Dr. Tyson also discusses, Dark Matter, Dark Energy, Invisible Light, the Exoplanet Earth and many other fascinating subjects that can be absorbed in “quick time”. It is a GREAT read and one I can definitely recommend to you.

ARECIBO

September 27, 2017

Hurricane Maria, as you well know, has caused massive damage to the island of Puerto Rico. At this writing, the entire island is without power and is struggling to exist without water, telephone communication, health and sanitation facilities. The digital pictures below will give some indication as to the devastation.

Maria made landfall in the southeastern part of the U.S. territory Wednesday with winds reaching 155 miles per hour, knocking out electricity across the island. An amazingly strong wind devastated the storm flooded parts of downtown San Juan, downed trees and ripped the roofs from homes. Puerto Rico has little financial wherewithal to navigate a major catastrophe, given its decision in May to seek protection from creditors after a decade of economic decline, excessive borrowing and the loss of residents to the U.S. mainland. Right now, PR is totally dependent upon the United States for recovery.

Imagine winds strong enough to damage and position an automobile in the fashion shown above. I cannot even tell the make of this car but we must assume it weighs at least two thousand pounds and yet it is thrown in the air like a paper plane.

One huge issue is clearing roads so supplies for relief and medical attention can be delivered to the people. This is a huge task.

One question I had—how about Arecibo? Did the radio telescope survive and if so, what damages were sustained? The digital below will show Arecibo Radio Telescope during “better times”.

Five decades ago, scientists sought a radio telescope that was close to the equator, according to Arecibo’s website. This location would allow the telescope to track planets passing overhead, while also probing the nature of the ionosphere — the layer of the atmosphere in which charged particles produce the northern lights. The telescope is part of the National Astronomy and Ionosphere Center. The National Science Foundation has a co-operative agreement with the three entities that operate it: SRI International, the Universities Space Research Association and UMET (Metropolitan University.) That radio telescope has provided an absolute wealth of information about our solar system and surrounding and bodies outside our solar system.

The Arecibo Observatory contains the second-largest radio telescope in the world, and that telescope has been out of service ever since Hurricane Maria hit Puerto Rico on Sept. 20. Maria hit the island as a Category 4 hurricane.

While Puerto Rico suffered catastrophic damage across the island, the Arecibo Observatory suffered “relatively minor damages,” Francisco Córdova, the director of the observatory, said in a Facebook post on Sunday (Sept. 24).

In the words of Mr. Cordova: “Still standing after #HurricaneMaria! We suffered some damages, but nothing that can’t be repaired or replaced! More updates to follow in the coming days as we complete our detailed inspections. We stand together with Puerto Rico as we recover from this storm.#PRStrong”.

Despite Córdova’s optimistic message, staff members and other residents of Puerto Rico are in a pretty bad situation. Power has yet to be restored to the island since the storm hit, and people are running out of fuel for generators. With roads still blocked by fallen trees and debris, transporting supplies to people in need is no simple task.

National Geographic’s Nadia Drake, who has been in contact with the observatory and has provided extensive updates via Twitter, reported that “some staff who have lost homes in town are moving on-site” to the facility, which weathered the storm pretty well overall. Drake also reported that the observatory “will likely be serving as a FEMA emergency center,” helping out members of the community who lost their homes in the storm.

The mission of Arecibo will continue but it may be a long time before the radio telescope is fully functional. Let’s just hope the lives of the people manning the telescope can be put back in order quickly so important and continued work may again be accomplished.

ROBONAUGHTS

September 4, 2016

OK, if you are like me, your sitting there asking yourself just what on Earth is a robonaught? A robot is an electromechanical device used primarily to take the labor and sometimes danger from human activity. As you well know, robotic systems have been in use for many years with each year providing systems of increasing sophistication. An astronaut is an individual operating in outer space. Let’s take a proper definition for ROBONAUGHT as provided by NASA.

“A Robonaut is a dexterous humanoid robot built and designed at NASA Johnson Space Center in Houston, Texas. Our challenge is to build machines that can help humans work and explore in space. Working side by side with humans, or going where the risks are too great for people, Robonauts will expand our ability for construction and discovery. Central to that effort is a capability we call dexterous manipulation, embodied by an ability to use one’s hand to do work, and our challenge has been to build machines with dexterity that exceeds that of a suited astronaut.”

My information is derived from “NASA Tech Briefs”, Vol 40, No 7, July 2016 publication.

If you had your own personal robotic system, what would you ask that system to do? Several options surface in my world as follows: 1.) Mow the lawn, 2.) Trim hedges, 3.) Wash my cars, 4.) Clean the gutters, 5.) Vacuum floors in our house, 6.) Wash windows, and 7.) Do the laundry. (As you can see, I’m not really into yard work or even house work.) Just about all of the tasks I do on a regular basis are home-grown, outdoor jobs and time-consuming.

For NASA, the International Space Station (ISS) has become a marvelous test-bed for developing the world’s most advanced robotic technology—technology that definitely represents the cutting-edge in space exploration and ground research. The ISS now hosts a significant array of state-of-the are robotic projects including human-scale dexterous robots and free-flying robots. (NOTE: The vendor is Astrobee and they have developed for NASA a free-flyer robotic system consists of structure, propulsion, power, guidance, navigation and control (GN&C), command and data handling (C&DH), avionics, communications, dock mechanism, and perching arm subsystems. The Astrobee element is designed to be self-contained and capable of autonomous localization, orientation, navigation and holonomic motion as well as autonomous resupply of consumables while operating inside the USOS.) These robotic systems are not only enabling the future of human-robot space exploration but promising extraordinary benefits for Earth-bound applications.

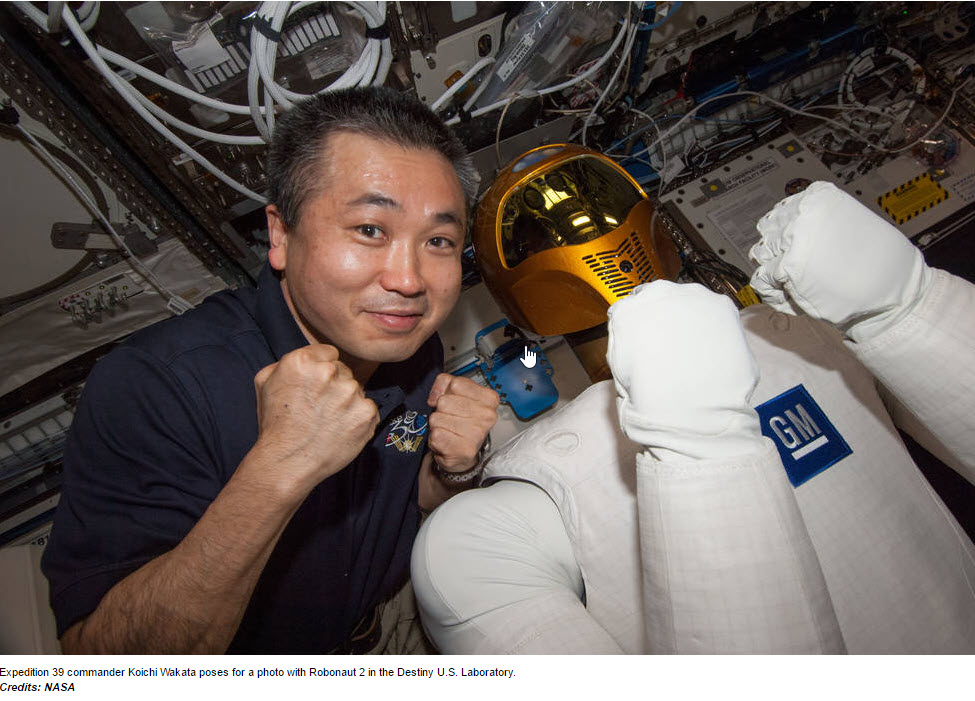

The initial purpose for exploring the design and fabrication of a human robotic system was to assist astronauts in completing tasks in which an additional pair or pairs of hands would be very helpful or to perform jobs either too hazardous or too mundane for crewmembers. For this reason, the Robonaut 2, was NASA’s first humanoid robot in space and was selected as the NASA Government Invention of the Year for 2014. Many outstanding inventions were considered for this award but Robonaut 2 was chosen after a challenging review by the NASA selection committee that evaluated the robot in the following areas: 1.) Aerospace Significance, 2.) Industry Significance, 3.) Humanitarian Significance, 4.) Technology Readiness Level, 5.) NASA Use, and 6.) Industry Use and Creativity. Robonaut 2 technologies have resulted in thirty-nine (39) issued patents, with several more under review. The NASA Invention of the Year is a first for a humanoid robot and with another in a series of firsts for Robonaut 2 that include: first robot inside a human space vehicle operating without a cage, and first robot to work with human-rated tools in space. The R2 system developed by NASA is shown in the following JPEGs:

Robonaut 2, NASA’s first humanoid robot in space, was selected as the NASA Government Invention of the Year for 2014. Many outstanding inventions were considered for this award, and Robonaut 2 was chosen after a challenging review by the NASA selection committee that evaluated the robot in the following areas: Aerospace Significance, Industry Significance, Humanitarian Significance, Technology Readiness Level, NASA Use, Industry Use and Creativity. Robonaut 2 technologies have resulted in thirty-nine (39) issued patents, with several more under review. The NASA Invention of the Year is a first for a humanoid robot and another in a series of firsts for Robonaut 2 that include: first robot inside a human space vehicle operating without a cage, and first robot to work with human-rated tools in space.

R2 first powered up for the first time in August 2011. Since that time, robotics engineers have tested R2 on ISS, completing tasks ranging from velocity air measurements to handrail cleaning—simple but necessary tasks that require a great deal of crew time. R2 also has an on-board task of flipping switches and pushing buttons, each time controlled by space station crew members through the use of virtual reality gear. According to Steve Gaddis, “we are currently working on teaching him how to look for handrails and avoid obstacles.”

The Robonaut project has been conducting research in robotics technology on board the International Space Station (ISS) since 2012. Recently, the original upper body humanoid robot was upgraded by the addition of two climbing manipulators (“legs”), more capable processors, and new sensors. While Robonaut 2 (R2) has been working through checkout exercises on orbit following the upgrade, technology development on the ground has continued to advance. Through the Active Reduced Gravity Offload System (ARGOS), the Robonaut team has been able to develop technologies that will enable full operation of the robotic testbed on orbit using similar robots located at the Johnson Space Center. Once these technologies have been vetted in this way, they will be implemented and tested on the R2 unit on board the ISS. The goal of this work is to create a fully-featured robotics research platform on board the ISS to increase the technology readiness level of technologies that will aid in future exploration missions.

One advantage of a humanoid design is that Robonaut can take over simple, repetitive, or especially dangerous tasks on places such as the International Space Station. Because R2 is approaching human dexterity, tasks such as changing out an air filter can be performed without modifications to the existing design.

More and more we are seeing robotic systems do the work of humans. It is just a matter of time before we see their usage here on terra-ferma. I mean human-type robotic systems used to serve man. Let’s just hope we do not evolve into the “age of the machines”. I think I may take another look at the movie Terminator.

SMARTS

August 2, 2016

On 13 October 2014 at 9:32 A.M. my ninety-two (92) year old mother died of Alzheimer’s. It was a very peaceful passing but as her only son it was very painful to witness her gradual memory loss and the demise of all cognitive skills. Even though there is no cure, there are certain medications that can arrest progression to a point. None were effective in her case.

Her condition once again piqued my interest in intelligence (I.Q.), smarts, intellect. Are we born with an I. Q. we cannot improve? How do cultural and family environment affect intelligence? What activities diminish I.Q., if any? Just how much of our brain’s abilities does the average working-class person need and use each day? Obviously, some professions require greater intellect than others. How is I.Q. distributed over our species in general?

IQ tests are the most reliable (e.g. consistent) and valid (e.g. accurate and meaningful) type of psychometric test that psychologists make use of. They are well-established as a good measure of a general intelligence or G. IQ tests are widely used in many contexts – educational, professional and for leisure. Universities use IQ tests (e.g. SAT entrance exams) to select students, companies use IQ tests (job aptitude tests) to screen applicants, and high IQ societies such as Mensa use IQ test scores as membership criteria.

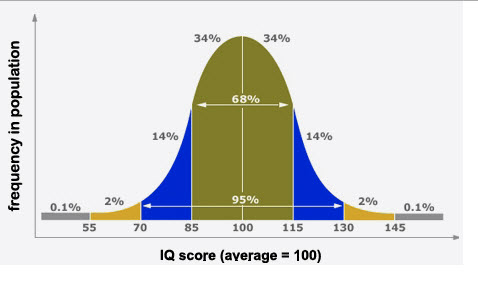

The following bell-shaped curve will demonstrate approximate distribution of intellect for our species.

The area under the curve between scores corresponds to the percentage (%) in the population. The scores on this IQ bell curve are color-coded in ‘standard deviation units’. A standard deviation is a measure of the spread of the distribution with fifteen (15) points representing one standard deviation for most IQ tests. Nearly seventy percent (70%) of the population score between eighty-five (85) and one hundred and fifteen (115) – i.e. plus and minus one standard deviation. A very small percentage of the population (about 0.1% or 1 in 1000) have scores less than fifty-five (55) or greater than one hundred and forty-five (145) – that is, more than three (3 )standard deviations out!

As you can see, the mean I.Q. is approximately one hundred, with ninety-five percent (95%) of the general population lying between seventy (70) and one hundred and fifteen percent (115%). Only two percent (2%) of the population score greater than one hundred and thirty (130) and a tremendously small 0.01% score in the genius range, greater than one hundred forty-five percent (145%).

OK, who’s smart? Let’s look.

PRESENT AND LIVING:

- Gary Kasparov—190. Born in 1963 in Baku, in what is now Azerbaijan, Garry Kasparov is arguably the most famous chess player of all time. When he was seven, Kasparov enrolled at Baku’s Young Pioneer Palace; then at ten he started to train at the school of legendary Soviet chess player Mikhail Botvinnik. In 1980 Kasparov qualified as a grandmaster, and five years later he became the then youngest-ever outright world champion. He retained the championship title until 1993, and has held the position of world number one-ranked player for three times longer than anyone else. In 1996 he famously took on IBM computer Deep Blue, winning with a score of 4–2 – although he lost to a much upgraded version of the machine the following year. In 2005 Kasparov retired from chess to focus on politics and writing. He has a reported IQ of 190.

- Philip Emeagwali-190. Dr. Philip Emeagwali, who has been called the “Bill Gates of Africa,” was born in Nigeria in 1954. Like many African schoolchildren, he dropped out of school at age 14 because his father could not continue paying Emeagwali’s school fees. However, his father continued teaching him at home, and everyday Emeagwali performed mental exercises such as solving 100 math problems in one hour. His father taught him until Philip “knew more than he did.”

- Marlyn vos Savant—228. Marilyn vos Savant’s intelligence quotient (I.Q.) score of 228, is certainly one of the highest ever recorded. This very high I.Q. gave the St. Louis-born writer instant celebrity and earned her the sobriquet “the smartest person in the world.” Although vos Savant’s family was aware of her exceptionally high I.Q. scores on the Stanford-Benet test when she was ten (10) years old (she is also recognized as having the highest I.Q. score ever recorded by a child), her parents decided to withhold the information from the public in order to avoid commercial exploitation and assure her a normal childhood.

- Mislav Predavec—192. Mislav Predavec is a Croatian mathematics professor with a reported IQ of 190. “I always felt I was a step ahead of others. As material in school increased, I just solved the problems faster and better,” he has explained. Predavec was born in Zagreb in 1967, and his unique abilities were obvious from a young age. As for his adult achievements, since 2009 Predavec has taught at Zagreb’s Schola Medica Zagrabiensis. In addition, he runs trading company Preminis, having done so since 1989. And in 2002 Predavec founded exclusive IQ society GenerIQ, which forms part of his wider IQ society network. “Very difficult intelligence tests are my favorite hobby,” he has said. In 2012 the World Genius Directory ranked Predavec as the third smartest person in the world.

- Rick Rosner—191. U.S. television writer and pseudo-celebrity Richard Rosner is an unusual case. Born in 1960, he has led a somewhat checkered professional life: as well as writing for Jimmy Kimmel Live! and other TV shows, Rosner has, he says, been employed as a stripper, doorman, male model and waiter. In 2000 he infamously appeared on Who Wants to Be a Millionaire? answering a question about the altitude of capital cities incorrectly and reacting by suing the show, albeit unsuccessfully. Rosner placed second in the World Genius Directory’s 2013 Genius of the Year Awards; the site lists his IQ at 192, which places him just behind Greek psychiatrist Evangelos Katsioulis. Rosner reportedly hit the books for 20 hours a day to try and outdo Katsioulis, but to no avail.

- Christopher Langan—210. Born in San Francisco in 1952, self-educated Christopher Langan is a special kind of genius. By the time he turned four, he’d already taught himself how to read. At high school, according to Langan, he tutored himself in “advanced math, physics, philosophy, Latin and Greek, all that.” What’s more, he allegedly got 100 percent on his SAT test, even though he slept through some of it. Langan attended Montana State University but dropped out. Rather like the titular character in 1997 movie Good Will Hunting, Langan didn’t choose an academic career; instead, he worked as a doorman and developed his Cognitive-Theoretic Model of the Universe during his downtime. In 1999, on TV newsmagazine 20/20, neuropsychologist Robert Novelly stated that Langan’s IQ – said to be between 195 and 210 – was the highest he’d ever measured. Langan has been dubbed “the smartest man in America.”

- Evangelos Katsioulis—198. Katsioulis is known for his high intelligence test scores. There are several reports that he has achieved the highest scores ever recorded on IQ tests designed to measure exceptional intelligence. Katsioulis has a reported IQ 205 on the Stanford-Binet scale with standard deviation of 16, which is equivalent to an IQ 198.4.

- Kim Ung-Young—210. Before The Guinness Book of World Records withdrew its Highest IQ category in 1990, South Korean former child prodigy Kim Ung-Yong made the list with a score of 210. Kim was born in Seoul in 1963, and by the time he turned three, he could already read Korean, Japanese, English and German. When he was just eight years old, Kim moved to America to work at NASA. “At that time, I led my life like a machine. I woke up, solved the daily assigned equation, ate, slept, and so forth,” he has explained. “I was lonely and had no friends.” While he was in the States, Kim allegedly obtained a doctorate degree in physics, although this is unconfirmed. In any case, in 1978 he moved back to South Korea and went on to earn a Ph.D. in civil engineering.

- Christopher Hirata—225. Astrophysicist Chris Hirata was born in Michigan in 1982, and at the age of 13 he became the youngest U.S. citizen to receive an International Physics Olympiad gold medal. When he turned 14, Hirata apparently began studying at the California Institute of Technology, and he would go on to earn a bachelor’s degree in physics from the school in 2001. At 16 – with a reported IQ of 225 – he started doing work for NASA, investigating whether it would be feasible for humans to settle on Mars. Then in 2005 he went on to obtain a Ph.D. in physics from Princeton. Hirata is currently a physics and astronomy professor at The Ohio State University. His specialist fields include dark energy, gravitational lensing, the cosmic microwave background, galaxy clustering, and general relativity. “If I were to say Chris Hirata is one in a million, that would understate his intellectual ability,” said a member of staff at his high school in 1997.

- Terrance Tao—230. Born in Adelaide in 1975, Australian former child prodigy Terence Tao didn’t waste any time flexing his educational muscles. When he was two years old, he was able to perform simple arithmetic. By the time he was nine, he was studying college-level math courses. And in 1988, aged just 13, he became the youngest gold medal recipient in International Mathematical Olympiad history – a record that still stands today. In 1992 Tao achieved a master’s degree in mathematics from Flinders University in Adelaide, the institution from which he’d attained his B.Sc. the year before. Then in 1996, aged 20, he earned a Ph.D. from Princeton, turning in a thesis entitled “Three Regularity Results in Harmonic Analysis.” Tao’s long list of awards includes a 2006 Fields Medal, and he is currently a mathematics professor at the University of California, Los Angeles.

- Stephen Hawkin—235. Guest appearances on TV shows such as The Simpsons, Futurama and Star Trek: The Next Generation have helped cement English astrophysicist Stephen Hawking’s place in the pop cultural domain. Hawking was born in 1942; and in 1959, when he was 17 years old; he received a scholarship to read physics and chemistry at Oxford University. He earned a bachelor’s degree in 1962 and then moved on to Cambridge to study cosmology. Diagnosed with motor neuron disease at the age of 21, Hawking became depressed and almost gave up on his studies. However, inspired by his relationship with his fiancé – and soon to be first wife – Jane Wilde, he returned to his academic pursuits and obtained his Ph.D. in 1965. Hawking is perhaps best known for his pioneering theories on black holes and his bestselling 1988 book A Brief History of Time.

PAST GENIUS:

The individuals above are living. Let’s take a very quick look at several past geniuses. I’m sure you know the names.

- Johann Goethe—210-225

- Albert Einstein—205-225

- Leonardo da vinci-180-220

- Isaac Newton-190-200

- James Maxwell-190-205

- Copernicus—160-200

- Gottfried Leibniz—182-205

- William Sidis—200-300

- Carl Gauss—250-300

- Voltaire—190-200

As you can see, these guys are heavy hitters. I strongly suspect there are many that we have not mentioned. Individuals, who have achieved but never gotten the opportunity to, let’s just say, shine. OK, where does that leave the rest of us? There is GOOD news. Calvin Coolidge said it best with the following quote:

“Nothing in this world can take the place of persistence. Talent will not: nothing is more common than unsuccessful men with talent. Genius will not; unrewarded genius is almost a proverb. Education will not: the world is full of educated derelicts. Persistence and determination alone are omnipotent. “

President Calvin Coolidge.

I think this says it all. As always, I welcome your comments.

JUNO SPACECRAFT

July 21, 2016

The following information was taken from the NASA web site and the Machine Design Magazine.

BACKGROUND:

After an almost five-year journey to the solar system’s largest planet, NASA’s Juno spacecraft successfully entered Jupiter’s orbit during a thirty-five (35) minute engine burn. Confirmation the burn was successful was received on Earth at 8:53 p.m. PDT (11:53 p.m. EDT) Monday, July 4. A message from NASA is as follows:

“Independence Day always is something to celebrate, but today we can add to America’s birthday another reason to cheer — Juno is at Jupiter,” said NASA administrator Charlie Bolden. “And what is more American than a NASA mission going boldly where no spacecraft has gone before? With Juno, we will investigate the unknowns of Jupiter’s massive radiation belts to delve deep into not only the planet’s interior, but into how Jupiter was born and how our entire solar system evolved.”

Confirmation of a successful orbit insertion was received from Juno tracking data monitored at the navigation facility at NASA’s Jet Propulsion Laboratory (JPL) in Pasadena, California, as well as at the Lockheed Martin Juno operations center in Littleton, Colorado. The telemetry and tracking data were received by NASA’s Deep Space Network antennas in Goldstone, California, and Canberra, Australia.

“This is the one time I don’t mind being stuck in a windowless room on the night of the 4th of July,” said Scott Bolton, principal investigator of Juno from Southwest Research Institute in San Antonio. “The mission team did great. The spacecraft did great. We are looking great. It’s a great day.”

Preplanned events leading up to the orbital insertion engine burn included changing the spacecraft’s attitude to point the main engine in the desired direction and then increasing the spacecraft’s rotation rate from 2 to 5 revolutions per minute (RPM) to help stabilize it..

The burn of Juno’s 645-Newton Leros-1b main engine began on time at 8:18 p.m. PDT (11:18 p.m. EDT), decreasing the spacecraft’s velocity by 1,212 miles per hour (542 meters per second) and allowing Juno to be captured in orbit around Jupiter. Soon after the burn was completed, Juno turned so that the sun’s rays could once again reach the 18,698 individual solar cells that give Juno its energy.

“The spacecraft worked perfectly, which is always nice when you’re driving a vehicle with 1.7 billion miles on the odometer,” said Rick Nybakken, Juno project manager from JPL. “Jupiter orbit insertion was a big step and the most challenging remaining in our mission plan, but there are others that have to occur before we can give the science team the mission they are looking for.”

Can you imagine a 1.7 billion (yes that’s with a “B”) mile journey AND the ability to monitor the process? This is truly an engineering feat that should make history. (Too bad our politicians are busy getting themselves elected and reelected.)

Over the next few months, Juno’s mission and science teams will perform final testing on the spacecraft’s subsystems, final calibration of science instruments and some science collection.

“Our official science collection phase begins in October, but we’ve figured out a way to collect data a lot earlier than that,” said Bolton. “Which when you’re talking about the single biggest planetary body in the solar system is a really good thing. There is a lot to see and do here.”

Juno’s principal goal is to understand the origin and evolution of Jupiter. With its suite of nine science instruments, Juno will investigate the existence of a solid planetary core, map Jupiter’s intense magnetic field, measure the amount of water and ammonia in the deep atmosphere, and observe the planet’s auroras. The mission also will let us take a giant step forward in our understanding of how giant planets form and the role these titans played in putting together the rest of the solar system. As our primary example of a giant planet, Jupiter also can provide critical knowledge for understanding the planetary systems being discovered around other stars.

The Juno spacecraft launched on Aug. 5, 2011 from Cape Canaveral Air Force Station in Florida. JPL manages the Juno mission for NASA. Juno is part of NASA’s New Frontiers Program, managed at NASA’s Marshall Space Flight Center in Huntsville, Alabama, for the agency’s Science Mission Directorate. Lockheed Martin Space Systems in Denver built the spacecraft. The California Institute of Technology in Pasadena manages JPL for NASA.

SYSTEMS:

Before we list the systems, let’s take a look at the physical “machine”.

As you can see, the design is truly remarkable and includes the following modules:

- SOLAR PANELS—Juno requires 18,000 solar cells to gather enough energy for it’s journey, 508 million miles from our sun. In January, Juno broke the record as the first solar-powered spacecraft to fly further than 493 million miles from the sun.

- RADIATION VAULT—During its polar orbit, Juno will repeatedly pass through the intense radiation belt that surrounds Jupiter’s equator, charged by ions and particles from Jupiter’s atmosphere and moons suspended in Juno’s colossal magnetic field. The magnetic belt, which measures 1,000 times the human toxicity level, has a radio frequency that can be detected from Earth and extends into earth’s orbit.

- GRAVITY SCIENCE EXPERIMENT—Using advanced gravity science tools; Juno will create a detailed map of Jupiter’s gravitational field to infer Jupiter’s mass distribution and internal structure.

- VECTOR MAGNETOMETER (MAG)—Juno’s next mission is to map Jupiter’s massive magnetic field, which extends approximately two (2) million miles toward the sun, shielding Jupiter from solar flares. It also tails out for more than six hundred (600) million miles in solar orbit. The dynamo is more than 20,000 times greater than that of the Earth.

- MICROWAVE RADIOMETERS–Microwave radiomometers (MWR) will detect six (6) microwave and radio frequencies generated by the atmosphere’s thermal emissions. This will aid in determining the depths of various cloud forms.

- DETAILED MAPPING OF AURORA BOREALIS AND PLASMA CONTENT—As Juno passes Jupiter’s poles, cameral will capture high-resolution images of aurora borealis, and particle detectors will analyze the plasmas responsible for them. Not only are Jupiter’s auroras much larger than those of Earth, they are also much more frequent because they are created by atmospheric plasma rather than solar flares.

- JEDI MEASURES HIGH-ENERGY PARTICLES–Three Jupiter energetic particle detector instruments (JEDIs) will measure the angular distribution of high-energy particles as they interact with Jupiter’s upper atmospheres and inner magnetospheres to contribute to Jupiter’s northern and southern lights.

- JADE MEASURE OF LOW-ENERGY PARTICLES—JADE, the Jovian Aurora Distributions Experiment, works in conjunction with DEDI to measure the angular distribution of lower-energy electrons and ions ranging from zero (0) to thirty (30) electron volts.

- WAVES MEASURES PLASMA MOVEMENT—The radio/plasma wave experiment, called WAVES, will be used to measure radio frequencies (50 Hz to 40 MHz) generated by the plasma in the magnetospheres.

- UVS,JIRAM CAPTURE NORTHERN/SOUTHERN LIGHTS—By capturing wavelength of seventy (70) to two hundred and five (205) nm, an ultraviolet imager/spectrometer (UVS) will generate images of the auroras UV spectrum to view the auroras during the Jovian day.

- HIGH-RESOLUTION CAMERA—JunoCam, a high-resolution color camera, will capture red, green and blue wavelengths photos of Jupiter’s atmosphere and aurora. The NASA team expects the camera to last about seven orbits before being destroyed by radiation.

CONCLUSION:

This technology is truly amazing to me. Think of the planning, the engineering design, the testing, the computer programming needed to bring this program to fruition. Amazing!

JAMES WEBB TELESCOPE

February 4, 2022

Several years ago, our blender bit the dust. It served us well over the years but as with all electromechanical things its time was up. I won’t mention the retail outlet we visited for a new one, that’s not the point. Brought the new medium-priced blender home, read all of the instructions, plugged it in and nothing. The motor would not start. Back to the retailer; another blender; back home; nothing. Back to the retailer for a third machine. This time with success. (Please note the blender is still working.)

What if you had a remarkably difficult engineering project which had to be absolutely, dead-on perfect the first time? No margin for error. No do-overs. No ability to bring about a “fix”. That’s exactly what we have with the James Webb Telescope. The James Webb Space Telescope, the most powerful telescope ever built, has reached its final destination in space. Now comes the fun part.

Thirty days after its launch, the tennis court-size telescope made its way into a parking spot over a million miles away from Earth. From there, it will begin its ambitious mission to better understand the early days of our universe, peer at distant exoplanets and their atmospheres and help answer large-scale questions such as how quickly the universe is expanding. Having departed on December 25, 2021, Webb has so far completed over ninety-six percent (96%) of its journey and scientists are ready to initiate the second Lagrange point (L2), which is one point five (1.5) million kilometers from the Earth. This is as of 22 January 2022.

Controllers expect to spend the next three months adjusting the infrared mirror segments and testing the Webb instruments, added Bill Ochs, Webb project manager at NASA’s Goddard Space Flight Center. WST, as the telescope is called, is more sophisticated than the Hubble Space Telescope and will be capturing pictures of the very first stars created in the universe. Scientists say it will also study the atmospheres of planets orbiting stars outside our own solar system to see if they might be habitable — or even inhabited. “The very first stars and galaxies formed are hurtling away from Earth so fast that the light is shifted from visible wavelengths into the infrared. So the Hubble telescope couldn’t see that light, but JWST can,” NPR’s Joe Palca explained. In its final form, the telescope is about three stories tall with a mirror that’s twenty-one (21) feet across — much too big to fly into space fully assembled. Instead, it was folded into a rocket and painstakingly unfurled by teams sending commands from Earth. Though the monthlong process was a nerve-wracking one, it appeared to have been completed flawlessly. I would restate “flawlessly.” The digital pictures below will give you some idea as to the overall package and components assembled.

If you look at the Human (to scale) portion of the picture at the upper left, you can see just how large the mirrors are. Imagine folding all of these components into one package to be deployed along the trajectory and completely deployed when reaching the end of its trajectory. A truly amazing engineering feat. The JPEG below will give a better picture as to how big the mirror is.

Please note the sunshade. This component is critical to the design to preclude significant overheating of the primary mirror.

As mentioned earlier L2, or the final destination, is approximately one-point five million miles above the Earth.

The cost for the total project is approximately ten billion US dollars. In my opinion, this cost is well worth it because the results will possibly take us back to the time of the “big bang”. Scientists will be examining the results of the investigations years from now and what may be determined is tremendously exciting.

As always, I welcome your comments.

Share this: