GLOBAL IT SALARY SURVEY

November 11, 2020

Several months ago, before the COVID-19 virus hit, I was talking to a young man interested in the STEM (Science, Technology, Engineering, Mathematics) professions. He was a graduating high school senior from Nashville and wondered what might be the future of technology as a profession. He asked me about IT and what I thought that course of work might provide in the way of a challenge, salary, stability, etc. In other words, all of those qualities we look for in a job. At that time, I did not have the information you are about to see regarding salary. My advice to him was go for the challenge and the money will follow. As it turns out, the bucks are there also. I’m going to make this a two-part post because the 2020 IT Skills and Salary Survey is very detailed. The first part involves salary. The second part discusses job satisfaction, certifications, and rate of advancement. Let’s now take a look.

The 2020 IT Skills and Salary Survey was conducted online from September 2019 through November 2019 using the Qualtrics XM Platform. Global Knowledge and technology companies distributed survey invitations to millions of professionals around the world, primarily in their databases. The survey was made available in web articles, online newsletters and social media. The survey yielded 9,505 completed responses. The survey was tabulated using the Qualtrics XM Platform.

An individual’s salary is determined by a combination of factors, including education, responsibility level, job role, certification, tenure, industry, company size and location. In the 2020 IT Skills and Salary Survey, the respondents were about these factors. Respondents came from all over the globe, consequently the results reflect their answers. Participants were required to convert their salaries into U.S. dollars while taking the survey to enable relevant comparisons.

BASE SALARY:

The average annual salary for global IT professionals is $85,115.

RAISES AND BONUSES: Nearly sixty percent (60%) of IT professionals received a raise in the past year. Raise percentages were consistent across all regions—four to six percent. The only outlier is decision-makers in Latin America whose salaries, on average, dropped two percent year over year. Thirty-seven percent (37%) of IT professionals who received a raise attribute it to job performance, while nearly thirty percent (30%) received a pay bump as part of a standard company increase. Fifteen percent (15%) saw their salary increase as part of a promotion. The reason for a raise impacts the amount of the raise. Twelve percent (12%) of individuals who received a raise attribute it to new skills—those same respondents earned nearly $12,000 more this year. IT professionals who obtained a new certification saw their salary increase nearly $13,000. Both of these numbers are strong indications that training pays off. Certifications are very important in the IT industry and form the basis for promotions.

Factors for increases salary are fairly standard and as you would expect with performance being the very first.

RECEIVED A BONUS:

Worldwide, sixty-six percent (66%) of eligible decision-makers and fifty-five percent (55%) of staff earned a bonus this year—both are up noticeably from 2019. In North America, seventy percent (70%) of decision-makers and fifty-seven percent (57%) of staff received bonuses. EMEA had the lowest numbers in this category, as only half of the eligible staff received a bonus.

SALARY BY RESPONSIBILITY LEVEL:

Even though the more senior IT personnel received the largest salaries, take a look at non-management “average” salary for North America. A whopping $105,736. That number goes down for other regions of the globe but still a big number.

CAREER EXPERIENCE:

Unsurprisingly, more tenured IT professionals have the highest salaries. Those with twenty-six (26) or more years of experience earn $120,098 a year—that’s more than double the average salary for first-year IT professionals. In North America, IT professionals cross the $100,000 threshold in years eleven to fifteen (11 to 15). The most tenured earn almost $140,000 a year. Among our respondents, the highest percentage has between six and fifteen (15) years of experience. Only one percent are in their first year, while thirteen percent (13%) have worked in tech for over a quarter of a century.

JOB FUNCTION:

SALARIES BY INDUSTRY:

U.S. SALARIES BY STATE:

IT professionals in the U.S. have an average annual salary of $120,491. U.S. decision-makers earn $138,200 a year. While tenure, job function and industry affect pay, geography is also a major salary influencer. The cost of living in the Mid-Atlantic or New England, for example, is higher than the Midwest. Washington, D.C. has the highest IT salaries in the country at $151,896—a nineteen percent (19%) increase from 2019. New Jersey, California, Maryland and New York round out the top five U.S. salaries by state. California had the highest U.S. salaries in 2019. South Dakota has the lowest average salary ($61,867) this year.

CANADIAN SALARIES:

The average annual salary in Canada is $77,580. IT professionals in British Columbia have the highest average salaries in the country at $85,801. The other top-paying Canadian provinces by salary are Quebec ($81,573), Ontario ($78,887), Alberta ($78,040) and Saskatchewan ($70,811). Provinces with fewer than 10 respondents were omitted from the list.

EUROPEAN SALARIES:

For the second straight year, Switzerland dominates European salaries with an annual average of $133,344. The average annual IT salary in Europe is $71,796. Germany has the second-highest salary at $88,195. Rounding out the top five is Ireland ($87,154), Belgium ($85,899) and the United Kingdom ($82,792). European countries with fewer than 30 respondents were omitted from the list.

CONCLUSIONS:

The next post will be tomorrow and will be Certifications and Job Satisfaction.

AMUSING OURSELVES TO DEATH

October 18, 2020

Amusing Ourselves to Death is the title of an incredibly good book by Mr. Neil Postman. The book is a marvelous look at the differences between Orwell and Huxley and their forecast as to conditions in the early 20th century. Now, you may think this book, and consequently the theme of this book, will be completely uninteresting and a bit far-fetched but it actually describes our social condition right now.

I’m going to do something a little different with this post. I’m going to present, in bullet form, lines of text and passages from the book so you will get a flavor of what Mr. Postman is trying to say. Keep in mind, these passages are in the book and do not necessarily represent my opinions—although very close and right on in some cases. (Please see the quotes about our Presidential elections.) Here we go.

- What Orwell feared were those who would ban books. What Huxley feared was that there would be no one who wanted to read one.

- The news of the day is a figment of our technological imagination. It is, quite precisely, a media event. We tend to watch fragments of events from all over the world because we have multiple media whose forms are well suited to fragmented conversations. Without a medium to create its form, the news of the day does not exist.

- Beginning in the fourteenth century, the clock made us into time-keepers, and then time-savers, and now time-servers. With the invention of the clock, eternity ceased to serve as the measure and focus of human events.

- A great media shift has taken place in America, with the result that the content of much of our public discourse has become dangerous nonsense. Under the governance of the printing press, discourse in America was different from what it is now—generally coherent, serious and rational; and then how, under the governance of television, it has become shrivelled and absurd. Even the best things on television are its junk and no one and nothing seems to be seriously threatened by it.

- Since intelligence is primarily defined as one’s capacity to grasp the truth of things, it follows that what a culture means by intelligence is derived from the character of its important forms of communication.

- Intelligence implies that one can dwell comfortably without pictures, in a field of concepts and generalizations.

- Epistemology is defined as follows: the theory of knowledge, especially with regard to its methods, validity, and scope. Epistemology is the investigation of what distinguishes justified belief from opinion. With that being the case, epistemology created by television not only is inferior to a print-based epistemology but is dangerous and inferior.

- In the “colonies”, literacy rates were notoriously difficult to assess, but there is sufficient evidence (mostly drawn from signatures) that between 1640 and 1700, the literacy rate for men in Massachusetts and Connecticut was somewhere between eighty-nine (89%) percent and ninety-five (95%) percent. The literacy rate for women in those colonies is estimated to have run as high as sixty-two (62%) percent. The Bible was the central reading matter in all households, for these people were primarily Protestants who shared Martin Luther’s belief that printing was God’s highest and most extreme act of Grace.

- The writers of our Constitution assumed that participation in public life required the capacity to negotiate the printed word. Mature citizenship was not conceivable without sophisticated literacy, which is why the voting age in most states was set at twenty-one and why Jefferson saw in universal education America’s best hope.

- Towards the end of the nineteenth century, the Age of Exposition began to pass, and the early signs of its replacement could be discerned. Its replacement was to be the Age of Show Business.

- The Age of Show Business was facilitated by the advent of photography. The name photography was given by the famous astronomer Sir John F. W. Herschel. It is an odd name since it literally meant “writing with light”.

- Conversations provided by television promote incoherence and triviality: the phrase “serious television” is a contradiction in terms; and that television speaks in only one persistent voice-the voice of entertainment.

- Television has found a significant free-market audience. One result has been that American television programs are in demand all over the world. The total estimate of U.S. television exports is approximately one hundred thousand (100,000) to two hundred thousand (200,000) hours, equally divided among Latin America, Asia and Europe. All of this has occurred simultaneously with the decline of America’s moral and political prestige, worldwide.

- Politicians in today’s world, are less concerned with giving arguments than with giving off impressions, which is what television does best. Post-debate commentary largely avoids any evaluation of the candidate’s ideas, since there were none to evaluate. (Does this sound familiar?)

- The results of too much television—Americans are the best entertained and quite likely the least-informed people in the Western world.

- The New York Times and the The Washington Post are not Pravda; the Associated Press is not Tass. There is no Newspeak here. Lies have not been defined as truth, no truth as lies. All that has happened is that the public has adjusted to incoherence and amused into indifference.

- In the world of television, Big Brother turns out to be Howdy Doody.

- We delude ourselves into believing that most everything a teacher normally does can be replicated with greater efficiency by a micro-computer.

- Most believe that Christianity is a demanding and serious religion. When it is delivered as easy and amusing, it is another kind of religion altogether. It has been estimated that the total revenue of the electric church exceeds five hundred million U.S. Dollars. ($500 million).

- The selling of an American president is an astonishing and degrading thing, it is only part of a larger point: in America, the fundamental metaphor for political discourse is the television commercial. We are not permitted to know who is the best President, or Governor, or Senator, but whose image is best in toughing and soothing the deep reaches of our discontent. “Mirror mirror on the wall, who is the fairest of them all?”

- A perplexed learner is a learner who will turn to another station.

- Television viewing does not significantly increase learning, is inferior to and less likely than print to cultivate higher-order, inferential thinking.

CONCLUSION:

You may agree with some of this, none of this or all of this, but it is Mr. Postman’s opinion after years of research. He has a more-recent book dealing with social media and the effect it has on our population at large. I wanted to purchase and read this book first to get a feel for his beliefs.

A LITTLE MORE INDEPENDENCE

October 7, 2020

Are you married to your digital equipment: i.e. e-mail, cell phones, YouTube, Instagram, Facebook, Twitter, etc etc.? OK, you cannot stand to put your cellphone down during dinner or leave it alone when going to sleep. Right? Many can say yes and many wish this were not true. In her book “Trampled by Unicorns”, Maelle Gavet gives us a plan of attack for breaking the habit or at least not being addicted to the habit. Not only does she mention the addiction but she is very much aware of how these digital giants encroach on our every “click” and know our habits and favorite sites. She indicates we are recorded every second we are online. Let’s take a look at her suggestions on how to take action:

- Make DuckDuckGo our default search engine rather than Google. As you know, Google tracks our every move and saves that data for future use. DuckDuckGo does not. There may be others that do not track, so investigate.

- Swap out Gmail account(s) to ensure privacy-preserving alternatives like Proton-Mail, Tutanota, Runbox or Postero.

- Explore Fairbnb.coop, Innclusive, Homestay, or Vacasa as alternatives to Airbnb and HomeAway. Both owned by Expedia.

- Buy new books from a local independent bookstore and not online. Buying online is a great convenience and during this COVID-19 pandemic probably the best way to go but Amazon, Books-A-Million, Barnes and Nobel all keep track of what we purchase as well as the search engine we use to buy through.

- Call restaurants directly when we want to make a reservation and/or do curb-side pickup. Don’t go online through Grub Hub or another online service. They track your requests.

- Shop with local retail outlets as opposed to shopping online. Sorry Amazon. Once again, really tough during this pandemic but desirable when possible.

- Look for ethical online shopping centers such as Green America or Ethical Consumer.

- Vary the use of Uber or Lyft with myriad local ride-sharing possibilities.

- Use alternative messaging apps like Signal, Wire, or Wickr.

- When social distancing rules are relaxed, shop at local brick and mortar stores.

- Escape the tech up-grade cycle by maintaining and repairing hardware as much as possible. You probably do not have to buy the latest and greatest electronic device offered. I-Phone can wait.

- Declutter mailboxes and empty junk e-mail folders to reduce energy consumed.

- Delete or deactivate old e-mail accounts, social network accounts, apps, and shopping accounts. If you don’t use them-lose them.

- Don’t shop on Black Friday.

- Promote ethical and responsible digital citizenship with children.

- Actively limit data collection

- Support human-centric technology.

- Fight fake news and unethical digital behaviors.

- Pressure big tech into more empathetic behavior

- Lobby for more and better legislation.

I personally would like to add several others as follows:

- As best you can, monitor what your children access online and prohibit, i.e. block, questionable sites.

- Demand time without digital equipment. Breakfast, lunch and certainly dinner should be family time and not time spent on looking at e-mail.

- I think Twitter is complete garbage. It’s equivalent to writing on a bathroom wall, at a third-rate truck stop on Highway 66 between Chicago to San Diageo. I’m really embarrassed that our President uses Twitter for much of his communication. Embarrassing!!!!!!

- Be very careful as to information found on Wikipedia. I have found some of it to be very unreliable and down-right incorrect.

- Use multiple sites when accessing the news. News outlets tell us what they want us to know and not always factual information comes from their broadcasts. Mix it up.

- Have a specific limit for yourself and certainly your children relative to time spent on digital equipment. (Tough one here.)

We all can cut back and probably should. Does anyone read any more? Give it a try.

CAUTIOUS OPTIMISM

September 29, 2020

NOTE: Data from the September 2020 issue of “Microwaves & RF” is used for this post.

Already, it’s been a very tough year. Even though a complete post-COVID-19-recovery is expected, most design engineering groups and manufacturing firms feel “life after COVID” will be greatly different. The following data represents key findings from a new study of the Design Engineering group of Endeavor Business Media. This group includes the publications, Electronic Design, Electronic Sourcebook, Evaluation Engineering, Hydraulics & Pneumatics, Machine Design, Microwaves & RF, and Source Today. The following questions were asked with answers given:

- How optimistic do you feel about the next six (6) months as businesses start to reopen and recover from the COVID-caused recession?

- Optimistic—21.00%

- Somewhat Optimistic—50.80%

- Not Very Optimistic—27.80%

- Since COVID-19-related guidelines, including “stay-at-home” mandates have gone into effect, how has your level of business activity changed?

- Increased—15.40%

- The Same—27.30%

- Slowed, yet picking up—22.40%

- Decreased—35.00%

- How has COVID-19 affected your personal current working status?

- Normal—30.60%

- Already at Home—8.20%

- Part-time at home because of COVID—54.20%

- Out of work—7.10%

- What changes do you anticipate for your company as a result of COVID-19?

- New COVID-related products/services—19.70%

- Social distancing in production—51.90%

- Re-evaluating supply chain—36.00%

- Virtual customer service model—19.10%

- Increased sanitation at work—61.70%

- Work at home policy—38.20%

- Investment in automation or IoT technology—15.30%

- Which of the following cost reductions is your company making in 2020?

- No new equipment purchases—41.50%

- Reducing parts and maintenance—27.90%

- Suspend contract services—25.70%

- Staff reductions—31.10%

- Budget cuts—43.70%

- Marketing budget cuts—27.90%

- Reducing prices—7.60%

- Would you attend a virtual event or digital programming (i.e. webinar, conference, meeting etc.) in lieu of traveling?

- YES—88.30%

- NO—11.70%

One huge area relative to changes for the near future is the way engineering and manufacturing firms seek out and obtain information. While sixty-two-point eight percent (62.80%) said they have no travel plans for the next six (6) months and just eight-point nine percent (8.90%) are back to their pre-COVID travel schedule, eighty-eight-point three percent (88.30%) of the respondents indicated they would definitely attend digital meetings instead of traveling. I do not think this will change over the next few years and maybe never change within this decade. Obviously, those individuals and companies involved with organizing seminars, product shows and meetings centered around dispensing product and service information will be greatly affected by stay-at-home thinking. Airlines, hotels, car rentals will thus be affected directly also. Great changes in process for our country and the world at large.

LAUGHTER

September 16, 2020

Let’s look at 2020 so far this year. (Don’t get discouraged and start drinking.)

- North American Wildfire Season: We all have to admit, this has been a disastrous year for fires in the mid-west and the far west. Really tough.

- 2020 Atlantic Hurricane Season. The 2020 Atlantic hurricane season is an ongoing tropical cyclone season. So far, it has featured a total of 21 tropical cyclones, 20 tropical storms, seven hurricanes, and one major hurricane.

- Rohingya Refugee Crisis. The 2015 Rohingya refugee crisis refers to the forcible displacement of Hindu & Muslim Myanmar nationals from the Arrakkan & Rakhine state of Myanmar to neighboring Bangladesh, Malaysia, Indonesia, Cambodia, Laos and Thailand in 2015, collectively dubbed “boat people” by international media. The reason I have included this crisis—it’s still happening.

- Sudan Flooding

- Venezuelan Humanitarian Crisis

- Southern Border Humanitarian Crisis

- COVID-19 Pandemic. (We all know about this one.)

- Civil Unrest in the United States

- Yemen Humanitarian Crisis

- Midland, Michigan Dam Breaches

- Puerto Rico Earthquakes

- 2020 Spring Tornados

- Amazon Wildfires

- Tropical Storm Imelda

- 2019-2020 Australian Brushfires

- Tropical Cyclone Idai

- Shooting of Police Officers in the United States

- Drive-by Shootings in Chicago, Baltimore, etc etc.

Okay, enough is enough. So, you are sitting there are saying why is there anything to laugh about? Just why? Well there is a definite reason we MUST laugh, even if it’s at ourselves.

Epigenetic research and modern medicine support the claim that laughter is often the best medicine. Research has shown that individuals with a deeper sense of happiness possess lower levels of inflammatory gene responses and higher levels of antiviral gene responses. Happy individuals typically possess healthier blood fat profiles, lower blood pressure, and a stronger immune system. The Tutu Project helps individuals cope with life threatening diseases and funds research through awareness campaigns built upon laughter. Other benefits are as follows:

- GOOD MOOD THROUGH THE DAY. Laughter can change your mood within minutes by releasing endorphins from your brain cells. This makes you feel good and if you are in a good mood you do everything well. It makes you cheerful all throughout the day.

- STRONGER IMMUNE SYSTEM: Laughter reduces stress and strengthens the immune system. If your immune system is strong you will not catch any infection easily.

- IMPROVES BRAIN FUNCTION: Our brain needs twenty-five percent (25 %) more oxygen for optimal functioning. Laughter exercises can increase net supply of oxygen to our body and brain which helps to improve efficiency and performance. You will feel energetic and can work more than you normally do without getting tired.

- HIGHER EMOTIONAL INTELLIGENCE FOR SUCCESS IN LIFE: Success in life depends upon eighty-five percent (85%) Emotional Intelligence EQ) and only fifteen percent (15%) Intelligence Quotient (IQ) Research shows that laughter increases the Emotional Intelligence.

- DEVELOPS SELF-CONFIDENCE: Laughter reduces inhibitions and shyness leading to more self-confidence in public speaking and other stage performances.

- REDUCES STRESS HORMONE LEVELS: By reducing the level of stress hormones, you’re simultaneously cutting the anxiety and stress that impacts your body. Additionally, the reduction of stress hormones may result in higher immune system performance. Just think: Laughing along as a co-worker tells a funny joke can relieve some of the day’s stress and help you reap the health benefits of laughter.

- WORKS YOUR ABS: One of the benefits of laughter is that it can help you tone your abs. When you are laughing, the muscles in your stomach expand and contract, similar to when you intentionally exercise your abs. Meanwhile, the muscles you are not using to laugh are getting an opportunity to relax. Add laughter to your ab routine and make getting a toned tummy more enjoyable.

- IMPROVES CARDIAC HEALTH: Laughter is a great cardio workout, especially for those who are incapable of doing other physical activity due to injury or illness. It gets your heart pumping and burns a similar number of calories per hour as walking at a slow to moderate pace. So, laugh your heart into health.

- BOOSTS T-CELLS: T-cells are specialized immune system cells just waiting in your body for activation. When you laugh, you activate T-cells that immediately begin to help you fight off sickness. Next time you feel a cold coming on, add chuckling to your illness prevention plan.

- TRIGGERS THE RELEASE OF ENDORPHINS: Endorphins are the body’s natural painkillers. By laughing, you can release endorphins, which can help ease chronic pain and make you feel good all over.

- PRODUCES A GENERAL SENSE OF WELL-BEING: Laughter can increase your overall sense of well-being. Doctors have found that people who have a positive outlook on life tend to fight diseases better than people who tend to be more negative. So, smile, laugh, and live longer!

CONCLUSIONS: Tough to laugh right now at the “human condition” and situations in the world, but laughter is definitely the very best medicine. Better to laugh and “soldier on” than to cry and do nothing. We must become part of the answer instead of part of the problem.

CHURCHILL

September 1, 2020

I just finished reading the most intimidating book I have ever read: “CHURCHILL, Walking With Destiny” by Andrew Roberts. When I say intimidating, I mean the book is eleven hundred and five (1105) pages in length with two hundred and twenty-two pages of references and notes supporting the document. It took me several weeks to read and gave remarkable detail as to the character and activities of one of the most fascinating characters in history. Quite frankly, a man destined to fulfill a critical role in history and a man who changed history for the betterment of his people and the Crown.

I’m quoting from page nine hundred and eighty -one (981): “He despised school, never attended university or worked in trade or the Civil Service or the colonies, served in six regiments (so never became slavishly attached to any of them), was blackballed from one club and forced to resign from another, left both the Conservative and Liberal parties and was not in any meaningful sense a Christian. Despite being the son of a chancellor of the Exchequer and the grandson of a duke, he was a contrarian and an outsider. “

He won the Nobel Prize for Literature, was knighted by the King of England (Order of the Garter), wrote thirty-seven books and thousands of individual papers, delivered over one million speeches, served in two wars, and was on a speaking level with the Eisenhower, King George, Gandhi, Stalin, Hitler, Mussolini, Nevil Chamberlin, Charles de Gaulle, and Onassis just to name a few. He rejected the Order of the Garter three times before accepting the honor. The Most Noble Order of the Garter is an order of chivalry founded by King Edward III of England in 1348. It is the most senior order of knighthood in the British honors system, outranked in precedence only by the Victoria Cross and the George Cross.

BIOGRAPHY of ANDREW ROBERTS:

The book “Churchill” is written to be a scholarly account and was written by a scholar.

In his own words: “Churchill: Walking with Destiny, is being published in October in the UK and November in the USA. Since the last major biography of him, forty-one sets of papers have been deposited at the Churchill Archives in Cambridge, and Her Majesty The Queen has graciously allowed me to be the first Churchill biographer to read her late father King George VI’s diary records of Churchill’s weekly audiences with him during the Second World War. I discovered the verbatim reports of the War Cabinet meetings over which he presided, which had never been used by any historian before, and I have worked on Churchill’s children’s papers by kind permission of his family, some of which are closed to other researchers. So, there is a lot more to say about Churchill.”

Prof Andrew Roberts, who was born in 1963, took a first-class honors degree in Modern History at Gonville & Caius College, Cambridge, from where he is an honorary senior scholar and a Doctor of Philosophy (PhD). He is presently a Visiting Professor at the War Studies Department at King’s College, London and the Lehrman Institute Lecturer at the New-York Historical Society. He has written or edited nineteen books, which have been translated into 23 languages, and appears regularly on radio and television around the world. Based in London, he is an accomplished public speaker (see Speaking Engagements and Speaking Testimonials), and has delivered the White House Lecture, as well as speaking at Oxford, Cambridge, Yale, Princeton and Stanford Universities, and at The British Academy, the Foreign and Commonwealth Office, Sandhurst, Shrivenham and the US Army War College in Carlisle, Pennsylvania. Professor Roberts has two children; Henry, who was born in 1997 and Cassia, who was born in 1999. He lives in London with his wife, Susan Gilchrist, who is the Chief of Global Clients of the corporate communications firm Brunswick Group, and the Chair of the Southbank Centre.

REVIEWS:

I always like to see what others think about the book I have just completed. Here we go:

CONCLUSION:

If you love to read history, you will love “Churchill”, but you will need time to do so. During this COVID-19 problem just might be the time to do just that. I can definitely recommend it to you.

INFLUENCERS

June 6, 2020

Some of the most remarkably written articles are found in the publication “Building Design + Construction”. This monthly magazine highlights architecture, engineering and construction (AEC) describes building projects and designs around the world. Many projects underway are re-construction and/or refurbishment of existing structures; i.e. schools, churches, office buildings, etc. The point I’m trying to make, the writing is superb, innovative and certainly relevant. The April edition featured INFLUENCERS.

If you investigate websites, you will find an ever-increasing number of articles related to Influencer Marketing. Influencer marketing is becoming, or I should say, is a significant factor in a person choosing one product over another. One of our granddaughters is an influencer and her job is fascinating. Let’s look.

DEFINITION:

- the power to affect the purchasing decisions of others because of his or her authority, knowledge, position, or relationship with his or her audience.

- a following in a distinct niche, with whom he or she actively engages. The size of the following depends on the size of his/her topic of the niche.

CLASSIFICATIONS:

There are various classifications depending upon circumstances. Those are given below.

Mega-Influencers— Mega influencers are the people with a vast number of followers on their social networks. Facebook, Instagram, Twitter, Snapchat, Utube, etc. are social instruments upon which influencers ply their trade. Although there are no fixed rules on the boundaries between the different types of followers, a common view is that mega-influencers have more than 1 million followers on at least one social platform. President Donald Trump, Kim Kardashian, Hillary Clinton and of course several others may be classified as Mega-influencers.

Macro-Influencers—Macro-influencers are one step down from the mega-influencers, and maybe more accessible as influencer marketers. You would consider people with followers in the range between 40,000 and one million followers on a social network to be macro-influencers.

This group tends to consist of two types of people. They are either B-grade celebrities, who haven’t yet made it to the big time. Or they are successful online experts, who have built up more significant followings than the typical micro-influencers. The latter type of macro-influencer is likely to be more useful for firms engaging in influencer marketing.

Micro-Influencers— Micro-influencers are ordinary everyday people who have become known for their knowledge about some specialist niche. As such, they have usually gained a sizable social media following amongst devotees of that niche. Of course, it is not just the number of followers that indicates a level of influence; it is the relationship and interaction that a micro-influencer has with his or her followers.

Nano-Influencers—The newest influencer-type to gain recognition is the nano-influencer. These people only have a small number of followers, but they tend to be experts in an obscure or highly specialized field. You can think of nano-influencers as being the proverbial big fish in a small pond. In many cases, they have fewer than one thousand (1,000) followers – but they will be keen and interested followers, willing to engage with the nano-influencer, and listen to his/her opinions.

If we look further, we can “drill down” to the various internet providers hosting the influencer packages.

Bloggers— Bloggers and influencers in social media have the most authentic and active relationships with their fans. Brands are now recognizing and encouraging this. Blogging has been connected to influencer marketing for some time now. There are many highly influential blogs on the internet. If a popular blogger positively mentions your product in a post, it can lead to the blogger’s supporters wanting to try out the specific product.

YouTubers—Rather than each video maker having their own site, most create a channel on YouTube. Brands often align with popular YouTube content creators.

Podcasts— Podcasting is a relatively recent form of online content that is growing in great popularity. It has made quite a few household names now, possibly best epitomized by John Lee Dumas of Entrepreneurs on Fire. If you have not yet had the opportunity to enjoy podcasts, Digital Trends has put together a comprehensive list of the best podcasts of 2019. Our youngest son has a podcast called CalmCash. He does a great job and is remarkably creative.

Social Posts Only— The vast majority of influencers now make their name on social media. While you will find influencers on all leading social channels, the standout network in recent years has been Instagram, where many influencers craft their posts around various stunning images.

Now, if we go back to “Building Design + Construction”, they interviewed five influencers that apply their skills to the AEC profession. I will give you, through their comments, the thrust of their efforts:

CHRISTINE WILLIAMSON— “My goal is to help teach architects about building science and construction. I want to show how the “AEC” parts fit together.”

BOB BORSON—He is the cohost of the Life of an Architect podcast which gets about two hundred and sixty (260) downloads per day. He would be a nano-influencer. “Influencer” is a ridiculous word. If you have to tell people you’re an influencer, you’re not”. His words only.

AMY BAKER—Launched her Instagram account in 2018 and is the host for SpecFunFacts. She discusses specifications and contracts and has around one thousand (1,000) followers.

CATHERINE MENG– Ms. Meng is the host of the Design Voice podcast.

MATT RISENGER—Mr. Risenger hosts “Buildshownetwork”. He first published Matt Risinger’s Green Building blog in 2006. This was the manner in which he publicized his new homebuilding company in Austin, Texas. To date, he has seven hundred (700) plus videos on YouTube. Right now, he has six hundred thousand (600,000) subscribers.

CONCLUSIONS: From the above descriptions and the five individual influencers detailed in the AEC magazine, you can get some idea as to how influencers ply their trade and support design and building endeavors. Hope you enjoyed this one.

DESIGNING HIGH-TECH K-12 SCHOOLS

March 28, 2019

We all wish for our children and grandchildren the very best education available to them whether it’s public or private. Local school districts many times struggle with maintaining older schools and providing the upgrades necessary to make and keep schools safe and functional. There have been tremendous changes to needs demanded by this digital age as well as security so necessary. Let’s take a look at what The Consulting-Specifying Engineer Magazine tells us they have discovered relative to NEW school trends and designs that fulfill needs of modern-day students.

- Technology is touching all aspects of modern school systems and is a key component of content display and communication within the classroom. Teachers and students are no longer static within the classroom. They are very mobile and flexible which creates the necessity for robust, flexible, and in most cases wireless infrastructure that responds to and does not distract from learning.

- Multiple-purpose use facilities with large central areas which can serve as cafeteria, theater and even gymnasium are key to this trend. Individual classrooms are quickly becoming a thing of the past. The mechanical, electrical and plumbing equipment must be flexible for the many-purposed uses as well as being able to quickly transition from one to the next.

- SECURITY is an absolute must when considering a new school building. Site access must be limited with movement throughout the building being secure with in-service cameras and a card access. This must be accomplished without the school looking like a prison.

- Color tuning, a new word for me, is accomplished by painting and lighting and creates an atmosphere for maximum learning. These efforts facilitate a more natural atmosphere and are more in line with circadian rhythms. Warmer color temperature paints can increase relaxation and reduce stressful learning.

- IAQ-Indoor Air Quality. According to the EPA:

- Fifty percent (50%) of the schools in the U.S. today have issues linked to deficient or failing IAQ.

- Deficient IAQ increases asthma risk by fifty percent (50%)

- Test scores can drop by twenty-one percent (21%) with insufficient IAQ.

- Schools with deficient IAQ have lower average student attendance rates

- Cleaner indoor air promotes better health for students and teachers.

- Implementing IAQ management can boost test scores by over fifteen percent (15%)

- Greater ventilation can reduce absenteeism by ten (10) absences per one thousand students.

- School administrators and school boards demand facilities that are equipped with sufficient lighting and sufficient fire protection. Heating and air conditioning as well as the electrical systems necessary to drive these pieces of hardware must be energy efficient. Emergency generators are becoming a basic requirement to facilitate card readers and emergency door access.

- Voice evacuation fire alarm and performance sound and telecommunication systems must be provided and must be kept active by emergency generators if power failures occur.

- More and more high schools offer advanced placement generating college credits required for admission to universities and colleges. State-of-the art equipment facilitates this possibility. We are talking about laboratories, compressed air systems, medical and dental equipment, IT facilities, natural gas distribution systems, environment systems supporting biodiesel, solar and wind turbines, and other specialized equipment. Many schools offer education at night as well as in the daytime.

- All codes, local, state, federal and international MUST be adhered to with no exceptions.

- Construction costs account for twenty to forty percent (20-40%) of the total life-cycle costs so maintenance and replacement must be considered when designing facilities.

- Control systems providing for energy savings during off-peak hours must be designed into school building facilities.

- LED lighting is becoming a must with dimmable controls, occupancy/vacancy sensors and daylight harvesting is certainly desirable.

- For schools in the mid-west and other areas of our country, tornado shelters must be considered and certainly could save lives when available.

These are just a few of the requirements architects and design engineers face when quoting a package to school boards and regional school systems. Much more sophisticated that ever before with requirements never thought of before. Times are changing—and for the better.

SMARTS

March 17, 2019

Who was the smartest person in the history of our species? Solomon, Albert Einstein, Jesus, Nikola Tesla, Isaac Newton, Leonardo de Vinci, Stephen Hawking—who would you name. We’ve had several individuals who broke the curve relative to intelligence. As defined by the Oxford Dictionary of the English Language, IQ:

“an intelligence test score that is obtained by dividing mental age, which reflects the age-graded level of performance as derived from population norms, by chronological age and multiplying by100: a score of100 thus indicates a performance at exactly the normal level for that age group. Abbreviation: IQ”

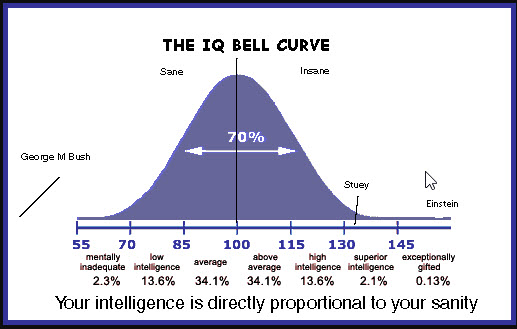

An intelligence quotient or IQ is a score derived from one of several different intelligence measures. Standardized tests are designed to measure intelligence. The term “IQ” is a translation of the German Intellizenz Quotient and was coined by the German psychologist William Stern in 1912. This was a method proposed by Dr. Stern to score early modern children’s intelligence tests such as those developed by Alfred Binet and Theodore Simin in the early twentieth century. Although the term “IQ” is still in use, the scoring of modern IQ tests such as the Wechsler Adult Intelligence Scale is not based on a projection of the subject’s measured rank on the Gaussian Bell curve with a center value of one hundred (100) and a standard deviation of fifteen (15). The Stanford-Binet IQ test has a standard deviation of sixteen (16). As you can see from the graphic below, seventy percent (70%) of the human population has an IQ between eighty-five and one hundred and fifteen. From one hundred and fifteen to one hundred and thirty you are considered to be highly intelligent. Above one hundred and thirty you are exceptionally gifted.

What are several qualities of highly intelligent people? Let’s look.

QUALITIES:

- A great deal of self-control.

- Very curious

- They are avid readers

- They are intuitive

- They love learning

- They are adaptable

- They are risk-takers

- They are NOT over-confident

- They are open-minded

- They are somewhat introverted

You probably know individuals who fit this profile. We are going to look at one right now: John von Neumann.

JON von NEUMANN:

The Financial Times of London celebrated John von Neumann as “The Man of the Century” on Dec. 24, 1999. The headline hailed him as the “architect of the computer age,” not only the “most striking” person of the 20th century, but its “pattern-card”—the pattern from which modern man, like the newest fashion collection, is cut.

The Financial Times and others characterize von Neumann’s importance for the development of modern thinking by what are termed his three great accomplishments, namely:

(1) Von Neumann is the inventor of the computer. All computers in use today have the “architecture” von Neumann developed, which makes it possible to store the program, together with data, in working memory.

(2) By comparing human intelligence to computers, von Neumann laid the foundation for “Artificial Intelligence,” which is taken to be one of the most important areas of research today.

(3) Von Neumann used his “game theory,” to develop a dominant tool for economic analysis, which gained recognition in 1994 when the Nobel Prize for economic sciences was awarded to John C. Harsanyi, John F. Nash, and Richard Selten.

John von Neumann, original name János Neumann, (born December 28, 1903, Budapest, Hungary—died February 8, 1957, Washington, D.C. Hungarian-born American mathematician. As an adult, he appended von to his surname; the hereditary title had been granted his father in 1913. Von Neumann grew from child prodigy to one of the world’s foremost mathematicians by his mid-twenties. Important work in set theory inaugurated a career that touched nearly every major branch of mathematics. Von Neumann’s gift for applied mathematics took his work in directions that influenced quantum theory theory of automation, economics, and defense planning. Von Neumann pioneered game theory, and, along with Alan Turing and Claude Shannon was one of the conceptual inventors of the stored-program digital computer .

Von Neumann did exhibit signs of genius in early childhood: he could joke in Classical Greek and, for a family stunt, he could quickly memorize a page from a telephone book and recite its numbers and addresses. Von Neumann learned languages and math from tutors and attended Budapest’s most prestigious secondary school, the Lutheran Gymnasium . The Neumann family fled Bela Kun’s short-lived communist regime in 1919 for a brief and relatively comfortable exile split between Vienna and the Adriatic resort of Abbazia. Upon completion of von Neumann’s secondary schooling in 1921, his father discouraged him from pursuing a career in mathematics, fearing that there was not enough money in the field. As a compromise, von Neumann simultaneously studied chemistry and mathematics. He earned a degree in chemical engineering from the Swiss Federal Institute in Zurich and a doctorate in mathematics (1926) from the University of Budapest.

OK, that all well and good but do we know the IQ of Dr. John von Neumann?

John Von Neumann IQ is 190, which is considered as a super genius and in top 0.1% of the population in the world.

With his marvelous IQ, he wrote one hundred and fifty (150) published papers in his life; sixty (60) in pure mathematics, twenty (20) in physics, and sixty (60) in applied mathematics. His last work, an unfinished manuscript written while in the hospital and later published in book form as The Computer and the Brain, gives an indication of the direction of his interests at the time of his death. It discusses how the brain can be viewed as a computing machine. The book is speculative in nature, but discusses several important differences between brains and computers of his day (such as processing speed and parallelism), as well as suggesting directions for future research. Memory is one of the central themes in his book.

I told you he was smart!

OUR SHRINKING WORLD

March 16, 2019

We sometimes do not realize how miniaturization has affected our every-day lives. Electromechanical products have become smaller and smaller with one great example being the cell phone we carry and use every day. Before we look at several examples, let’s get a definition of miniaturization.

Miniaturization is the trend to manufacture ever smaller mechanical, optical and electronic products and devices. Examples include miniaturization of mobile phones, computers and vehicle engine downsizing. In electronics, Moore’s Law predicted that the number of transistors on an integrated circuit for minimum component cost doubles every eighteen (18) months. This enables processors to be built in smaller sizes. We can tell that miniaturization refers to the evolution of primarily electronic devices as they become smaller, faster and more efficient. Miniaturization also includes mechanical components although it sometimes is very difficult to reduce the size of a functioning part.

The revolution of electronic miniaturization began during World War II and is continuing to change the world till now. Miniaturization of computer technology has been the source of a seemingly endless battle between technology giants over the world. The market has become so competitive that the companies developing microprocessors are constantly working towards erecting a smaller microchip than that of their competitor, and as a result, computers become obsolete almost as soon as they are commercialized. The concept that underlies technological miniaturization is “the smaller the better”; smaller is faster, smaller is cheaper, smaller is more profitable. It is not just companies that profit from miniaturization advances, but entire nations reap rewards through the capitalization of new developments. Devices such as personal computers, cellular telephones, portable radios, and camcorders have created massive markets through miniaturization, and brought billions of dollars to the countries where they were designed and built. In the 21st century, almost every electronic device has a computer chip inside. The goal of miniaturization is to make these devices smaller and more powerful, and thus made available everywhere. It has been said, however, that the time for continued miniaturization is limited – the smaller the computer chip gets, the more difficult it becomes to shrink the components that fit on the chip. I personally do not think this is the case but I am a mechanical engineer and not an electronic or electrical engineer. I use the products but I do not develop the products.

The world of miniaturization would not be possible at all if it were not for semiconductor technology. Devices made of semiconductors, notably silicon, are essential components of most electronic circuits. A process of lithography is used to create circuitry layered over a silicon substrate. A transistor is a semiconductor device with three connections capable of amplification in addition to rectification. Miniaturization entails increasing the number of transistors that can hold on a single chip, while shrinking the size of the chip. As the surface area of a chip decreases, the task of designing newer and faster circuit designs becomes more difficult, as there is less room left for the components that make the computer run faster and store more data.

There is no better example of miniaturization than cell phone development. The digital picture you see below will give some indication as to the development of the cell phone and how the physical size has decreased over the years. The cell phone to the far left is where it all started. To the right, where we are today. If you look at the modern-day cell phone you see a remarkable difference in size AND ability to communicate. This is all possible due to shrinking computer chips.

One of the most striking changes due to miniaturization is the application of digital equipment into a modern-day aircraft cockpit. The JPEG below is a mockup of an actual Convair 880. With analog gauges, an engineering panel and an exterior shell, this cockpit reads 1960/1970 style design and fabrication. In fact, this is the actual cockpit mock up that was used in the classic comedy film “Airplane”.

Now, let us take a look at a digital cockpit. Notice any differences? Cleaner and fewer. The GUI or graphical user interface can take the place of numerous dials and gauges that clutter and possibly confuse a pilot’s vision.

I think you have the picture so I would challenge you to take a look this upcoming week to discover those electromechanical items, we take for granted, to discover how they have been reduced in size. You just may be surprised.