MEMORY LOSS AND STATISTICS

April 3, 2022

A good friend of mine sent me this very very interesting group of statistics. We both are considerable older that sixty-five years and constantly try to keep our spirits up relative to getting older. I thought these facts would interest you also. Here we go:

Considering a class size of one hundred (100) persons in the world,

- Only 8 live or exceed the age of sixty-five (65) years of age. Think about that one!!!!!!

World Population vs Memory Loss, (NOTE: Be sure to take the memory test at the end.)

Earth’s Population Statistics in Perspective. The population of Earth is around seven-point-eight (7.8) Billion. For most people, it is a large figure however, if you condensed seven-point-eight (7.8) billion into one hundred (100) persons, and then into various percentage statistics the resulting analysis is relatively much easier to comprehend.

Out of 100 :

11 are in Europe

5 are in North America

9 are in South America

15 are in Africa

60 are in Asia

49 live in the countryside

51 live in cities

75 have mobile phones

25 do not.

30 have internet access

70 do not have the availability to go online

7 received university education

93 did not attend college.

83 can read

17 are illiterate.

33 are Christians

22 are Muslims

14 are Hindus

7 are Buddhists

12 are other religions

12 have no religious beliefs.

26 live less than 14 years

66 died between 15 – 64 years of age

8 are over 65 years old.

If you have your own home, eat full meals & drink clean water, have a mobile phone, can surf the internet, and have gone to college, you are in the minuscule privileged lot. (in the less than seven percent (7%) category)

Among one hundred (100) people in the world, only eight (8) live or exceed the age of sixty-five (65).

If you are over sixty-five (65) years old, be content and very grateful. Cherish life, grasp the moment.

If you did not leave this world before the age of sixty-four (64) like the ninety-two (92) people who have gone before you, you are already the blessed amongst mankind.

Take good care of your own health. Cherish every remaining moment.

If you think you are suffering memory loss……. Anosognosia, very interesting…

In the following analysis the French Professor Bruno Dubois, Director of the Institute of Memory and Alzheimer’s Disease (IMMA) at La Pitié-Salpêtrière – Paris Hospitals, addresses the subject in a rather reassuring way:

“If anyone is aware of their memory problems, they do not have Alzheimer’s.”

1. Forget the names of families.

2. Do not remember where I put some things.

It often happens in people sixty (60) years and older that they complain that they lack memory. “The information is always in the brain, it is the “processor” that is lacking.”

This is “Anosognosia” or temporary forgetfulness.

Half of people sixty (60) and older have some symptoms that are due to age rather than disease. The most common cases are:

– forgetting the name of a person,

– going to a room in the house and not remembering why we were going there,

– a blank memory for a movie title or actor, an actress,

– a waste of time searching where we left our glasses or keys ..

After sixty (60) years of age, most people have such a difficulty, which indicates that it is not a disease but rather a characteristic due to the passage of years. Many people are concerned about these oversights hence the importance of the following statements:

1.”Those who are conscious of being forgetful have no serious problem of memory.”

2. “Those who suffer from a memory illness or Alzheimer’s, are not aware of what is happening.”

Professor Bruno Dubois, Director of IMMA, reassures the majority of people concerned about their oversights:

“The more we complain about memory loss, the less likely we are to suffer from memory sickness.”

Now for a little neurological test:

Only use your eyes!

1- Find the C in the table below!

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOCOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

2- If you found the C, then find the 6 in the table below.

99999999999999999999999999999999999999999999999

99999999999999999999999999999999999999999999999

99999999999999999999999999999999999999999999999

69999999999999999999999999999999999999999999999

99999999999999999999999999999999999999999999999

99999999999999999999999999999999999999999999999

3- Now find the N in the table below. Attention, it’s a little more difficult!

MMMMMMMMMMMMMMMMMMMMMMMMMMMMNMM

MMMMMMMMMMMMMMMMMMMMMMMMMMMMMMM

MMMMMMMMMMMMMMMMMMMMMMMMMMMMMMM

MMMMMMMMMMMMMMMMMMMMMMMMMMMMMMM

MMMMMMMMMMMMMMMMMMMMMMMMMMMMMMM

If you pass these three tests without problem:

– you can cancel your annual visit to the neurologist.

– your brain is in perfect shape!

– you are far from having any relationship with Alzheimer’s.

CONCLUSIONS: I AM SO RELIEVED!!!!!

JAMES WEBB TELESCOPE

February 4, 2022

Several years ago, our blender bit the dust. It served us well over the years but as with all electromechanical things its time was up. I won’t mention the retail outlet we visited for a new one, that’s not the point. Brought the new medium-priced blender home, read all of the instructions, plugged it in and nothing. The motor would not start. Back to the retailer; another blender; back home; nothing. Back to the retailer for a third machine. This time with success. (Please note the blender is still working.)

What if you had a remarkably difficult engineering project which had to be absolutely, dead-on perfect the first time? No margin for error. No do-overs. No ability to bring about a “fix”. That’s exactly what we have with the James Webb Telescope. The James Webb Space Telescope, the most powerful telescope ever built, has reached its final destination in space. Now comes the fun part.

Thirty days after its launch, the tennis court-size telescope made its way into a parking spot over a million miles away from Earth. From there, it will begin its ambitious mission to better understand the early days of our universe, peer at distant exoplanets and their atmospheres and help answer large-scale questions such as how quickly the universe is expanding. Having departed on December 25, 2021, Webb has so far completed over ninety-six percent (96%) of its journey and scientists are ready to initiate the second Lagrange point (L2), which is one point five (1.5) million kilometers from the Earth. This is as of 22 January 2022.

Controllers expect to spend the next three months adjusting the infrared mirror segments and testing the Webb instruments, added Bill Ochs, Webb project manager at NASA’s Goddard Space Flight Center. WST, as the telescope is called, is more sophisticated than the Hubble Space Telescope and will be capturing pictures of the very first stars created in the universe. Scientists say it will also study the atmospheres of planets orbiting stars outside our own solar system to see if they might be habitable — or even inhabited. “The very first stars and galaxies formed are hurtling away from Earth so fast that the light is shifted from visible wavelengths into the infrared. So the Hubble telescope couldn’t see that light, but JWST can,” NPR’s Joe Palca explained. In its final form, the telescope is about three stories tall with a mirror that’s twenty-one (21) feet across — much too big to fly into space fully assembled. Instead, it was folded into a rocket and painstakingly unfurled by teams sending commands from Earth. Though the monthlong process was a nerve-wracking one, it appeared to have been completed flawlessly. I would restate “flawlessly.” The digital pictures below will give you some idea as to the overall package and components assembled.

If you look at the Human (to scale) portion of the picture at the upper left, you can see just how large the mirrors are. Imagine folding all of these components into one package to be deployed along the trajectory and completely deployed when reaching the end of its trajectory. A truly amazing engineering feat. The JPEG below will give a better picture as to how big the mirror is.

Please note the sunshade. This component is critical to the design to preclude significant overheating of the primary mirror.

As mentioned earlier L2, or the final destination, is approximately one-point five million miles above the Earth.

The cost for the total project is approximately ten billion US dollars. In my opinion, this cost is well worth it because the results will possibly take us back to the time of the “big bang”. Scientists will be examining the results of the investigations years from now and what may be determined is tremendously exciting.

As always, I welcome your comments.

AMUSING OURSELVES TO DEATH

October 18, 2020

Amusing Ourselves to Death is the title of an incredibly good book by Mr. Neil Postman. The book is a marvelous look at the differences between Orwell and Huxley and their forecast as to conditions in the early 20th century. Now, you may think this book, and consequently the theme of this book, will be completely uninteresting and a bit far-fetched but it actually describes our social condition right now.

I’m going to do something a little different with this post. I’m going to present, in bullet form, lines of text and passages from the book so you will get a flavor of what Mr. Postman is trying to say. Keep in mind, these passages are in the book and do not necessarily represent my opinions—although very close and right on in some cases. (Please see the quotes about our Presidential elections.) Here we go.

- What Orwell feared were those who would ban books. What Huxley feared was that there would be no one who wanted to read one.

- The news of the day is a figment of our technological imagination. It is, quite precisely, a media event. We tend to watch fragments of events from all over the world because we have multiple media whose forms are well suited to fragmented conversations. Without a medium to create its form, the news of the day does not exist.

- Beginning in the fourteenth century, the clock made us into time-keepers, and then time-savers, and now time-servers. With the invention of the clock, eternity ceased to serve as the measure and focus of human events.

- A great media shift has taken place in America, with the result that the content of much of our public discourse has become dangerous nonsense. Under the governance of the printing press, discourse in America was different from what it is now—generally coherent, serious and rational; and then how, under the governance of television, it has become shrivelled and absurd. Even the best things on television are its junk and no one and nothing seems to be seriously threatened by it.

- Since intelligence is primarily defined as one’s capacity to grasp the truth of things, it follows that what a culture means by intelligence is derived from the character of its important forms of communication.

- Intelligence implies that one can dwell comfortably without pictures, in a field of concepts and generalizations.

- Epistemology is defined as follows: the theory of knowledge, especially with regard to its methods, validity, and scope. Epistemology is the investigation of what distinguishes justified belief from opinion. With that being the case, epistemology created by television not only is inferior to a print-based epistemology but is dangerous and inferior.

- In the “colonies”, literacy rates were notoriously difficult to assess, but there is sufficient evidence (mostly drawn from signatures) that between 1640 and 1700, the literacy rate for men in Massachusetts and Connecticut was somewhere between eighty-nine (89%) percent and ninety-five (95%) percent. The literacy rate for women in those colonies is estimated to have run as high as sixty-two (62%) percent. The Bible was the central reading matter in all households, for these people were primarily Protestants who shared Martin Luther’s belief that printing was God’s highest and most extreme act of Grace.

- The writers of our Constitution assumed that participation in public life required the capacity to negotiate the printed word. Mature citizenship was not conceivable without sophisticated literacy, which is why the voting age in most states was set at twenty-one and why Jefferson saw in universal education America’s best hope.

- Towards the end of the nineteenth century, the Age of Exposition began to pass, and the early signs of its replacement could be discerned. Its replacement was to be the Age of Show Business.

- The Age of Show Business was facilitated by the advent of photography. The name photography was given by the famous astronomer Sir John F. W. Herschel. It is an odd name since it literally meant “writing with light”.

- Conversations provided by television promote incoherence and triviality: the phrase “serious television” is a contradiction in terms; and that television speaks in only one persistent voice-the voice of entertainment.

- Television has found a significant free-market audience. One result has been that American television programs are in demand all over the world. The total estimate of U.S. television exports is approximately one hundred thousand (100,000) to two hundred thousand (200,000) hours, equally divided among Latin America, Asia and Europe. All of this has occurred simultaneously with the decline of America’s moral and political prestige, worldwide.

- Politicians in today’s world, are less concerned with giving arguments than with giving off impressions, which is what television does best. Post-debate commentary largely avoids any evaluation of the candidate’s ideas, since there were none to evaluate. (Does this sound familiar?)

- The results of too much television—Americans are the best entertained and quite likely the least-informed people in the Western world.

- The New York Times and the The Washington Post are not Pravda; the Associated Press is not Tass. There is no Newspeak here. Lies have not been defined as truth, no truth as lies. All that has happened is that the public has adjusted to incoherence and amused into indifference.

- In the world of television, Big Brother turns out to be Howdy Doody.

- We delude ourselves into believing that most everything a teacher normally does can be replicated with greater efficiency by a micro-computer.

- Most believe that Christianity is a demanding and serious religion. When it is delivered as easy and amusing, it is another kind of religion altogether. It has been estimated that the total revenue of the electric church exceeds five hundred million U.S. Dollars. ($500 million).

- The selling of an American president is an astonishing and degrading thing, it is only part of a larger point: in America, the fundamental metaphor for political discourse is the television commercial. We are not permitted to know who is the best President, or Governor, or Senator, but whose image is best in toughing and soothing the deep reaches of our discontent. “Mirror mirror on the wall, who is the fairest of them all?”

- A perplexed learner is a learner who will turn to another station.

- Television viewing does not significantly increase learning, is inferior to and less likely than print to cultivate higher-order, inferential thinking.

CONCLUSION:

You may agree with some of this, none of this or all of this, but it is Mr. Postman’s opinion after years of research. He has a more-recent book dealing with social media and the effect it has on our population at large. I wanted to purchase and read this book first to get a feel for his beliefs.

A LITTLE MORE INDEPENDENCE

October 7, 2020

Are you married to your digital equipment: i.e. e-mail, cell phones, YouTube, Instagram, Facebook, Twitter, etc etc.? OK, you cannot stand to put your cellphone down during dinner or leave it alone when going to sleep. Right? Many can say yes and many wish this were not true. In her book “Trampled by Unicorns”, Maelle Gavet gives us a plan of attack for breaking the habit or at least not being addicted to the habit. Not only does she mention the addiction but she is very much aware of how these digital giants encroach on our every “click” and know our habits and favorite sites. She indicates we are recorded every second we are online. Let’s take a look at her suggestions on how to take action:

- Make DuckDuckGo our default search engine rather than Google. As you know, Google tracks our every move and saves that data for future use. DuckDuckGo does not. There may be others that do not track, so investigate.

- Swap out Gmail account(s) to ensure privacy-preserving alternatives like Proton-Mail, Tutanota, Runbox or Postero.

- Explore Fairbnb.coop, Innclusive, Homestay, or Vacasa as alternatives to Airbnb and HomeAway. Both owned by Expedia.

- Buy new books from a local independent bookstore and not online. Buying online is a great convenience and during this COVID-19 pandemic probably the best way to go but Amazon, Books-A-Million, Barnes and Nobel all keep track of what we purchase as well as the search engine we use to buy through.

- Call restaurants directly when we want to make a reservation and/or do curb-side pickup. Don’t go online through Grub Hub or another online service. They track your requests.

- Shop with local retail outlets as opposed to shopping online. Sorry Amazon. Once again, really tough during this pandemic but desirable when possible.

- Look for ethical online shopping centers such as Green America or Ethical Consumer.

- Vary the use of Uber or Lyft with myriad local ride-sharing possibilities.

- Use alternative messaging apps like Signal, Wire, or Wickr.

- When social distancing rules are relaxed, shop at local brick and mortar stores.

- Escape the tech up-grade cycle by maintaining and repairing hardware as much as possible. You probably do not have to buy the latest and greatest electronic device offered. I-Phone can wait.

- Declutter mailboxes and empty junk e-mail folders to reduce energy consumed.

- Delete or deactivate old e-mail accounts, social network accounts, apps, and shopping accounts. If you don’t use them-lose them.

- Don’t shop on Black Friday.

- Promote ethical and responsible digital citizenship with children.

- Actively limit data collection

- Support human-centric technology.

- Fight fake news and unethical digital behaviors.

- Pressure big tech into more empathetic behavior

- Lobby for more and better legislation.

I personally would like to add several others as follows:

- As best you can, monitor what your children access online and prohibit, i.e. block, questionable sites.

- Demand time without digital equipment. Breakfast, lunch and certainly dinner should be family time and not time spent on looking at e-mail.

- I think Twitter is complete garbage. It’s equivalent to writing on a bathroom wall, at a third-rate truck stop on Highway 66 between Chicago to San Diageo. I’m really embarrassed that our President uses Twitter for much of his communication. Embarrassing!!!!!!

- Be very careful as to information found on Wikipedia. I have found some of it to be very unreliable and down-right incorrect.

- Use multiple sites when accessing the news. News outlets tell us what they want us to know and not always factual information comes from their broadcasts. Mix it up.

- Have a specific limit for yourself and certainly your children relative to time spent on digital equipment. (Tough one here.)

We all can cut back and probably should. Does anyone read any more? Give it a try.

CAUTIOUS OPTIMISM

September 29, 2020

NOTE: Data from the September 2020 issue of “Microwaves & RF” is used for this post.

Already, it’s been a very tough year. Even though a complete post-COVID-19-recovery is expected, most design engineering groups and manufacturing firms feel “life after COVID” will be greatly different. The following data represents key findings from a new study of the Design Engineering group of Endeavor Business Media. This group includes the publications, Electronic Design, Electronic Sourcebook, Evaluation Engineering, Hydraulics & Pneumatics, Machine Design, Microwaves & RF, and Source Today. The following questions were asked with answers given:

- How optimistic do you feel about the next six (6) months as businesses start to reopen and recover from the COVID-caused recession?

- Optimistic—21.00%

- Somewhat Optimistic—50.80%

- Not Very Optimistic—27.80%

- Since COVID-19-related guidelines, including “stay-at-home” mandates have gone into effect, how has your level of business activity changed?

- Increased—15.40%

- The Same—27.30%

- Slowed, yet picking up—22.40%

- Decreased—35.00%

- How has COVID-19 affected your personal current working status?

- Normal—30.60%

- Already at Home—8.20%

- Part-time at home because of COVID—54.20%

- Out of work—7.10%

- What changes do you anticipate for your company as a result of COVID-19?

- New COVID-related products/services—19.70%

- Social distancing in production—51.90%

- Re-evaluating supply chain—36.00%

- Virtual customer service model—19.10%

- Increased sanitation at work—61.70%

- Work at home policy—38.20%

- Investment in automation or IoT technology—15.30%

- Which of the following cost reductions is your company making in 2020?

- No new equipment purchases—41.50%

- Reducing parts and maintenance—27.90%

- Suspend contract services—25.70%

- Staff reductions—31.10%

- Budget cuts—43.70%

- Marketing budget cuts—27.90%

- Reducing prices—7.60%

- Would you attend a virtual event or digital programming (i.e. webinar, conference, meeting etc.) in lieu of traveling?

- YES—88.30%

- NO—11.70%

One huge area relative to changes for the near future is the way engineering and manufacturing firms seek out and obtain information. While sixty-two-point eight percent (62.80%) said they have no travel plans for the next six (6) months and just eight-point nine percent (8.90%) are back to their pre-COVID travel schedule, eighty-eight-point three percent (88.30%) of the respondents indicated they would definitely attend digital meetings instead of traveling. I do not think this will change over the next few years and maybe never change within this decade. Obviously, those individuals and companies involved with organizing seminars, product shows and meetings centered around dispensing product and service information will be greatly affected by stay-at-home thinking. Airlines, hotels, car rentals will thus be affected directly also. Great changes in process for our country and the world at large.

LIGHTS OUT

August 11, 2020

I retired from General Electric in 2005 after joining the Roper Corporation in 1986. GE bought Roper in 1987 to get the Sears business for cooking products. It was a good marriage; some say very good. Long-term Roper employees eligible for retirement came away with a significant nest egg. Quite a few retired as a result of the company buyout. One of my carpool buddies let me look at a check from GE. It was a little over thirty thousand dollars with more on the way. That was history.

I just read an article in the publication “Assembly, summer 2020, indicating that GE has sold the lighting division to Savant Systems Inc. Savant sells home-automation technology. GE Lighting will remain in Cleveland, Ohio and its seven hundred (700) employees will transfer to Savant, which will also get a long-term license for the GE brand. This one hundred and thirty year (130) old lighting business goes back to the very core of the company, which once used the line “GE: We bring good things to light” in its advertising. In the grand scheme of things, it’s a tiny deal, amounting to just $250 million, according to The Wall Street Journal (actual deal terms weren’t disclosed). This move is a much bigger statement than the dollars involved suggest.

What remained of lighting was largely consumer-focused, an area that GE no longer really plays in. Lightbulbs are just the latest in a long list of things—toasters, fans, radios, televisions, plastics, adhesives, motors, mixers, locomotives, computers, cooking products, that GE no longer makes. GE sold the cooking products to the Hairer Group, a Chinese firm. Lighting is a relatively low-margin business that adds little to the top and bottom lines. From a strategic perspective, it was time to finally sell the business, even if it didn’t bring in much money. More important, perhaps, the move helps set the tone for the future.

The General Electric Company has changed and is changing. The following graphics will show the transition of the conglomerate from 2007 to 2020. It’s possible that slimming down the company’s portfolio will pay off for Flannery and for GE. Selling some (OK, most) of the company’s divisions will offer management the opportunity to streamline the corporate structure and reduce costs. The company’s outperforming healthcare division is likely to be attractive to buyers, which should help Flannery shore up GE’s balance sheet, as will the sale of its stake in Baker Hughes.

But once the dust settles, GE is going to be left with a winning aviation engine business and an energy turbine business in desperate need of a turnaround, with no clear path to overall growth. Maybe GE will continue to radically reshape its portfolio over the next ten (10) years through acquisitions — or maybe it will stick with the current simplified structure. Either way, though, outperformance is uncertain, and there are probably better places for your money. Right now, GE stock is selling for $6.82 per share. Jack Welch “lived” with Wall Street and nursed the stock day in and day out. It was critical to Dr. Welch the “market” was satisfied with the numbers.

CONCLUSION: Things change but, in GE’s case, there must be a reason. There are very strong opinions on this subject.

Former General Electric CEO Jeff Immelt, the scandal-plagued leader who was ousted after years at the helm of the company, is responsible for destroying the sprawling, multinational conglomerate, according to Home Depot co-founder Ken Langone. “He had a big steel ball on a crane and he destroyed it as if he was tearing down an old building,” Langone said on Friday during an interview with FOX Business’ Maria Bartiromo. Immelt was handpicked in 2001 to take over the company by legendary CEO Jack Welch, but in August 2017, Immelt was replaced by John Flannery, who was removed as CEO on Monday and replaced with H. Lawrence Culp Jr., effective immediately.

In January, sources told FOX Business that Welch’s assessment of Immelt’s tenure was “scathing,” and that he’d privately conceded one of the biggest mistakes he made in his wide-spanning career as chief executive was appointing Immelt as his successor. Immelt came under fire last October when it was revealed that he would fly with two private jets when traveling in case the one he was riding in had “mechanical problems.”

I just hope this giant of a company can at some point rebound and redefine itself. Time will tell.

HERE WE GO AGAIN

April 6, 2019

If you read my posts you know that I rarely “do politics”. Politicians are very interesting people only because I find all people interesting. Everyone has a story to tell. Everyone has at least one good book in them and that is their life story. With that being the case, I’m going to break with tradition by taking a look at the “2020” presidential lineup. I think it’s a given that Donald John Trump will run again but have you looked at the Democratic lineup lately? I am assuming with the list below that former Vice President Joe Biden will run so he, even though unannounced to date, will eventually make that probability known.

- Joe Biden—AGE 76

- Bernie Sanders—AGE 77

- Kamala Harris—AGE 54

- Beto O’Rourke—AGE 46

- Elizabeth Warren—AGE 69

- Cory Booker—AGE 49

- Amy Klobuchar—AGE 58

- Pete Buttigieg—AGE 37

- Julian Castro—AGE 44

- Kirsten Gillibrand—AGE 52

- Jay Inslee—AGE 68

- John Hickenlooper—AGE 67

- John Delaney—AGE 55

- Tulsi Gabbard—AGE 37

- Tim Ryan—AGE 45

- Andrew Yang—AGE 44

- Marianne Williamson—AGE 66

- Wayne Messam—AGE 44

CANDIDATES NOW EXPLORING THE POSSIBILITIES:

- William F. Weld—AGE 73

- Michael Bennett—AGE 33

- Eric Swalwell—AGE 38

- Steve Bullock—AGE 52

- Bill DeBlasio—AGE 57

- Terry McAuliffe—AGE 62

- Howard Schultz—AGE 65

Eighteen (18) people have declared already and I’m sure there will be others as time goes by. If we slice and dice, we see the following:

- Six (6) women or 33.33 %—Which is the greatest number to ever declare for a presidential election.

- AGE GROUPS

- 70-80: 2 11 %

- 60-70: 4 22 %

- 50-60: 4 22 %

- 40-50: 6 33 %

- Younger than 40: 2 11 %

I am somewhat amazed that these people, declared and undeclared, feel they can do what is required to be a successful president. In other words, they think they have what it takes to be the Chief Executive of this country. When I look at the list, I see people whose name I do NOT recognize at all and I wonder, just who would want the tremendous headaches the job will certainly bring? And the scrutiny—who needs that? The President of the United States is in the fishbowl from dawn to dusk. Complete loss of privacy. Let’s looks at some of the perks the job provides:

- The job pays $400,000.00 per year.

- The president is also granted a $50,000 annual expense account, $100,000 nontaxable travel account, and $19,000 for entertainment.

- Former presidents receive a pension equal to the pay that the head of an executive department (Executive Level I) would be paid; as of 2017, it is $207,800 per year. The pension begins immediately after a president’s departure from office.

- The Presidents gets to fly on Air Force 1 and Marine 1. (That was 43’s best perk according to him.)

- You get to ride in the “BEAST”.

- Free room and board at 1600 Pennsylvania Avenue

- Access to Camp David

- The hired help is always around catering to your every need.

- Incredible security

- You have access to a personal trainer if so desired

- Free and unfettered medical

- The White House has a movie theater

- You are a life-time member of the “President’s Club”

- The President has access to a great guest house—The Blair House.

- You get a state funeral. (OK this might not be considered a perk relative to our list.)

The real question: Are all of these perks worth the trouble? President George Bush (43) could not wait to move back to Texas. Other than Air Force 1, he really hated the job. President Bill Clinton loved the job and would still be president if our constitution would allow it.

SMARTS

March 17, 2019

Who was the smartest person in the history of our species? Solomon, Albert Einstein, Jesus, Nikola Tesla, Isaac Newton, Leonardo de Vinci, Stephen Hawking—who would you name. We’ve had several individuals who broke the curve relative to intelligence. As defined by the Oxford Dictionary of the English Language, IQ:

“an intelligence test score that is obtained by dividing mental age, which reflects the age-graded level of performance as derived from population norms, by chronological age and multiplying by100: a score of100 thus indicates a performance at exactly the normal level for that age group. Abbreviation: IQ”

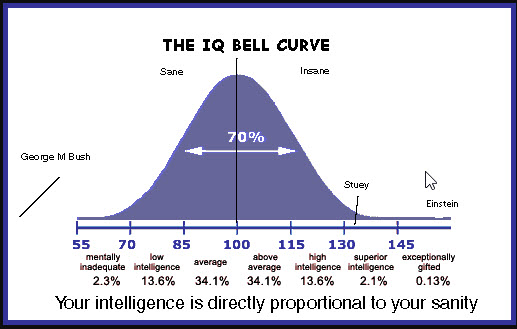

An intelligence quotient or IQ is a score derived from one of several different intelligence measures. Standardized tests are designed to measure intelligence. The term “IQ” is a translation of the German Intellizenz Quotient and was coined by the German psychologist William Stern in 1912. This was a method proposed by Dr. Stern to score early modern children’s intelligence tests such as those developed by Alfred Binet and Theodore Simin in the early twentieth century. Although the term “IQ” is still in use, the scoring of modern IQ tests such as the Wechsler Adult Intelligence Scale is not based on a projection of the subject’s measured rank on the Gaussian Bell curve with a center value of one hundred (100) and a standard deviation of fifteen (15). The Stanford-Binet IQ test has a standard deviation of sixteen (16). As you can see from the graphic below, seventy percent (70%) of the human population has an IQ between eighty-five and one hundred and fifteen. From one hundred and fifteen to one hundred and thirty you are considered to be highly intelligent. Above one hundred and thirty you are exceptionally gifted.

What are several qualities of highly intelligent people? Let’s look.

QUALITIES:

- A great deal of self-control.

- Very curious

- They are avid readers

- They are intuitive

- They love learning

- They are adaptable

- They are risk-takers

- They are NOT over-confident

- They are open-minded

- They are somewhat introverted

You probably know individuals who fit this profile. We are going to look at one right now: John von Neumann.

JON von NEUMANN:

The Financial Times of London celebrated John von Neumann as “The Man of the Century” on Dec. 24, 1999. The headline hailed him as the “architect of the computer age,” not only the “most striking” person of the 20th century, but its “pattern-card”—the pattern from which modern man, like the newest fashion collection, is cut.

The Financial Times and others characterize von Neumann’s importance for the development of modern thinking by what are termed his three great accomplishments, namely:

(1) Von Neumann is the inventor of the computer. All computers in use today have the “architecture” von Neumann developed, which makes it possible to store the program, together with data, in working memory.

(2) By comparing human intelligence to computers, von Neumann laid the foundation for “Artificial Intelligence,” which is taken to be one of the most important areas of research today.

(3) Von Neumann used his “game theory,” to develop a dominant tool for economic analysis, which gained recognition in 1994 when the Nobel Prize for economic sciences was awarded to John C. Harsanyi, John F. Nash, and Richard Selten.

John von Neumann, original name János Neumann, (born December 28, 1903, Budapest, Hungary—died February 8, 1957, Washington, D.C. Hungarian-born American mathematician. As an adult, he appended von to his surname; the hereditary title had been granted his father in 1913. Von Neumann grew from child prodigy to one of the world’s foremost mathematicians by his mid-twenties. Important work in set theory inaugurated a career that touched nearly every major branch of mathematics. Von Neumann’s gift for applied mathematics took his work in directions that influenced quantum theory theory of automation, economics, and defense planning. Von Neumann pioneered game theory, and, along with Alan Turing and Claude Shannon was one of the conceptual inventors of the stored-program digital computer .

Von Neumann did exhibit signs of genius in early childhood: he could joke in Classical Greek and, for a family stunt, he could quickly memorize a page from a telephone book and recite its numbers and addresses. Von Neumann learned languages and math from tutors and attended Budapest’s most prestigious secondary school, the Lutheran Gymnasium . The Neumann family fled Bela Kun’s short-lived communist regime in 1919 for a brief and relatively comfortable exile split between Vienna and the Adriatic resort of Abbazia. Upon completion of von Neumann’s secondary schooling in 1921, his father discouraged him from pursuing a career in mathematics, fearing that there was not enough money in the field. As a compromise, von Neumann simultaneously studied chemistry and mathematics. He earned a degree in chemical engineering from the Swiss Federal Institute in Zurich and a doctorate in mathematics (1926) from the University of Budapest.

OK, that all well and good but do we know the IQ of Dr. John von Neumann?

John Von Neumann IQ is 190, which is considered as a super genius and in top 0.1% of the population in the world.

With his marvelous IQ, he wrote one hundred and fifty (150) published papers in his life; sixty (60) in pure mathematics, twenty (20) in physics, and sixty (60) in applied mathematics. His last work, an unfinished manuscript written while in the hospital and later published in book form as The Computer and the Brain, gives an indication of the direction of his interests at the time of his death. It discusses how the brain can be viewed as a computing machine. The book is speculative in nature, but discusses several important differences between brains and computers of his day (such as processing speed and parallelism), as well as suggesting directions for future research. Memory is one of the central themes in his book.

I told you he was smart!

42,000 SQUARE FEET OF PAINT

March 9, 2019

For most of us, the city where we were born is the “best city on earth”. EXAMPLE: About ten (10) years ago I traveled with three other guys to Sweetwater, Texas. About sixteen (16) hours of nonstop travel, each of us taking four (4) hour shifts. We attended the fifth (50th) “Rattlesnake Roundup”. (You are correct—what were we thinking?) Time of year—March. The winter months are when the critters are less active and their strike is much slower. Summer months, forget it. You will not win that contest. We were there about four (4) days and got to know the great people of Sweetwater. The city itself is very hot, even for March, but most of all windy and dusty. The wind never seems to stop. Ask about Sweetwater— “best little city on the planet”. Wouldn’t leave for all the money in the world. That’s just how I feel about my home town—Chattanooga, Tennessee.

Public Art Chattanooga decided to add a splash of color to the monolithic grey hulk of the AT&T building, located on the Southside of Chattanooga proper. This building is a tall windowless structure resembling the “BORG” habitat detailed in several Star Trek episodes. Not really appealing in any sense of the word. When Public Art received permission to go forward, they called internationally respected artist Meg Saligman. Meg was the obvious choice for the work. This is her largest mural to date covering approximately 42,000 square feet. It is definitely one of the five (5) largest murals in the country and the largest in the Southeastern part of the United States.

The ML King District Mural Project reinforces the critical role public art plays in lending a sense of place to a specific neighborhood, and certainly contributes to future neighborhood beautification and economic development efforts. The images and people in the mural are inspired by real stories, individuals, and the history of the neighborhood. For approximately six (6) months, people living and visiting the Southside were interviewed to obtain their opinion and perspective as to what stories would be displayed by the mural. The proper balance was required, discussed, and met, with the outcome being spectacular.

This is a Meg Saligman Studios project. Co-Principal Artists are Meg Saligman and Lizzie Kripke. Lead Artists Hollie Berry and James Tafel Shuster In 2006, Public Art Review featured Meg Saligman as one of the ten most influential American muralists of the past decade. She has received numerous awards, including the Philadelphia Mural Arts Program’s Visionary Artist Award, and honors from the National Endowment of the Arts, the MidAtlantic Arts Foundation, the Pennsylvania Council on the Arts, and Philadelphia’s Leeway Foundation. Saligman has painted more than fifty murals all over the world, including Philadelphia, Shreveport, Mexico City, and now Chattanooga. She has a way of mixing the classical and contemporary aspects of painting together. Prior to the M.L.K mural, Saligman’s most famous work is “Common Threads” located in the Philadelphia area. It is painted on the west wall of the Stevens Administrative Center at the corner of Broad and Spring Garden Streets. Other major works include “Philadelphia Muses” on 13th and Locust streets, a multimedia “Theatre of Life” on Broad and Lombard streets, “Passing Through” over the Schuylkill Expressway, and the paint and LED light installation at Broad and Vine streets, “Evolving Face of Nursing”. Saligman’s work can be viewed nationally in Shreveport, Louisiana, with “Once in a Millennium Moon”, and in Omaha, Nebraska, with “Fertile Ground.”

A key component of the M.L.K. Mural in Chattanooga was the local apprentice program offering an opportunity for local artists to work with the nationally recognized muralist and to learn techniques and methods for large scale projects such as this. From thirty-three (33) applicants, Meg interviewed and hired a team of six (6) locals who constituted an integral part of the program itself. Each artist was hired for their artistic skill sets and their ability to work collaboratively as team members. Members of the local team are: 1.) Abdul Ahmad, 2.) Anna Carll, 3.) Rondell Crier, 4.) Shaun LaRose, 5.) Mercedes Llanos and 6.) Anier Reina.

Now, with that being said, let’s take a look.

From this digital photograph and the one below, you can get a feel for the scope of the project and the building the artwork is applied to. As you can see, it’s a dull grey, windowless, concrete structure well-suited for such a face-lift. Due to the height and size of the building, bucket trucks were used to apply the paint.

The layout, of course, was developed on paper first with designs applied to quadrants on the building. You can see some of the interacies of the process from the JPEG above.

The planning for this project took the better part of one year due to the complexity and the layout necessary prior to initiating the project. As I traveled down M.L.King Avenue, I would watch the progress in laying out the forms that would accept the colors and shades of paint. In one respect, it was very similar to paint-by-numbers. Really fascinating to watch the development of the artwork even prior to painting.

The completed mural covers all four (4) sides of the AT&T building and as you can see from the JPEG below—it is striking.

This gives you one more reason to visit Chattanooga. As always, I welcome your comments.

PUBLIC SERVICE ANNOUNCEMENT

May 30, 2022

Over the past several years I have had several medical problems that definitely have gotten my attention. I’ve experienced (and it has been a real experience) multiple MRIs, CAT SCANS, EKGs, EEGs, X-rays, cranial bubble procedures I did not even know existed, etc. I could go on and on but for the sake of time will bore you no longer. The doctors thought at one time I may have A-Fabulation, then decided otherwise due to additional testing. Next on the list-sleep apnea. Let’s see if that could be a factor. I took the “at-home” test for a period of time and then was instructed to come in for an over-night stay at the Memorial Sleep Clinic. I was wired to the point of hoping there was no lightening in the local area because I would surely be fried. To my amazement, I stopped breathing one hundred and nine (109) times in an eight (8) hour time period. This is an average of approximately fourteen (14) times per hour. The threshold for sleep apnea is five (5) times per hour. I was diagnosed as having a moderate case but one that could cause significant problems. I ended up with a CPAP device.

A continuous positive airway pressure (CPAP) device is the most commonly prescribed for treating sleep apnea disorders. A CPAP machine’s compressor (motor) generates a continuous stream of pressurized air that travels through an air filter into a flexible tube. This tube delivers purified air into a mask that’s sealed around your nose or mouth. As you sleep, the airstream from the CPAP machine pushes against any blockages, opening your airways so your lungs receive plenty of oxygen. Without anything obstructing this flow of oxygen, your breathing doesn’t pause. As a result, you don’t repeatedly wake up in order to resume breathing.

CPAP devices all have the same basic components:

The benefits in using a CPAP device are as follows:

I have been very pleased to find the CPAP device has definitely made a difference in my ability to gain a good night’s sleep. At one time, I would wake up with a crashing headache. I started my day with three Tylenol in addition to the medications and vitamins I generally take. I have not had a headache since using the CPAP machine. That is definitely a blow for freedom. There is a period of adjustment when using the device and you have to try several mask configurations before you get one that suits you sleep patterns.

Please take a look at the digital above and see if you recognize any issues you presently have. Maybe a CPAP might be the answer.

Share this: