WE’VE COME A LONG WAY

January 24, 2015

Two days ago I had the need to refresh my memory concerning the Second Law of Thermodynamics. Most of the work I do involves designing work cells to automate manufacturing processes but one client asked me to take a look at a problem involving thermodynamic and heat transfer processes. The statement of the second law is as follows:

“It is impossible to extract an amount of heat “Qh” from a hot reservoir and use it all to do work “W”. Some amount of heat “Qc” must be exhausted to a cold reservoir.”

Another way to say this is:

“It is not possible for heat to flow from a cooler body to a warmer body without any work being done to accomplish this flow.”

That refresher took about fifteen (15) minutes but it made me realize just how far we have come relative to teaching and presenting subjects involving technology; i.e. STEM ( Science, Technology, Engineering and Mathematics) related information. Theory does not change. Those giants upon whose shoulders we stand paved the way and set the course for discovery and advancement of so many technical disciplines, but one device has revolutionized teaching methods—the modern day computer with accompanying software.

I would like to stay with thermodynamics to illustrate a point. At the university I attended, we were required to have two semesters of heat transfer and two semesters of thermodynamics. Both subjects were supposedly taken during the sophomore year and both offered in the department of mechanical engineering. These courses were “busters” for many ME majors. More than once they were the determining factors in the decision-making process as to whether or not to stay in engineering or try another field of endeavor. The book was “Thermodynamics” by Gordon van Wylen, copyright 1959. My sophomore year was 1962 so it was well before computers were used at the university level. I remember pouring over the steam tables looking at saturations temperatures, saturation pressures trying to find specific volume, enthalpy, entropy and internal energy information. It seemed as though interpolation was always necessary. Have you ever tried negotiating a Mollier Chart to pick off needed data? WARNING: YOU CAN GO BLIND TRYING. Psychometric charts presented the very same problem. I remember one homework project in which we were expected to design a cooling tower for a commercial heating and air conditioning system. All of the pertinent specifications were given as well as the cooling necessary for transmission into the facility. It was drudgery and even though so long ago, I remember the “all-nighter” I pulled trying to get the final design on paper. Today, this information is readily available through software; obviously saving hours of time and greatly improving productivity. I will say this; by the time these two courses were taken you did understand the basic principles and associated theory for heat systems.

Remember conversion tables? One of the most-used programs by working engineers is found by accessing “onlineconversions.com”. This web site provides conversions between differing measurement systems for length, temperature, weight, area, density, power and even has oddball classifications such as “fun stuff” and miscellaneous. Fun stuff is truly interesting; the Chinese Zodiac, pig Latin, Morse code, dog years—all subheadings and many many more. All possible without an exhaustive search through page after page of printed documentation. All you have to do is log on.

The business courses I took, (yes, we were required to take several non-technical courses) were just as laborious. We constructed spreadsheets and elaborate ones at that for cost accounting and finance; all accomplished today with MS Excel. One great feature of MS Excel is the Σ or sum feature. When you have fifty (50) or more line items and its 2:30 in the morning and all you want to do is wrap things up and go to bed, this becomes a god-send.

I cannot imagine where we will be in twenty (20) years relative to improvements in technology. I just hope I’m around to see them.

MACHINE VISION

January 2, 2015

INTRODUCTION:

Machine vision is an evolving technology used to replace or complement manual inspections and measurements. The technology uses digital cameras and image processing software. This technology is used in a variety of different industries to automate production, increase production speed and yield, and to improve product quality. One primary objective is discerning the quality of a product when high-speed production is required. This industry is knowledge-driven and experiences an ever- increasing complexity of components and modules of machine vision systems. In the last few years, the markets pertaining to machine vision components and systems have grown significantly.

Machine vision, also known as “industrial vision” or “vision systems”, is primarily focused on computer vision in the perspective of industrial manufacturing processes like defect detection and in non-manufacturing processes like traffic control and healthcare purposes. The inspection processes are carried by responsive input needed for control; for example, robot control or default verification. The system setup consists of cameras capturing, interpreting and signaling individual control systems related to some pre-determined tolerance or requirement. These systems have increasingly become more powerful while at the same time easy to use. Recent advancements in machine vision technology, such as smart cameras and embedded machine vision systems, have increased the scope of machine vision markets for a wider application in the industrial and non-industrial sectors.

INDUSTRIAL SPECIFICS:

Let’s take a very quick look at several components and systems used when applying vision to specific applications.

You can see from the graphic above products advancing down a conveyor past cameras mounted on either side of the line. These cameras are processing information relative to specifications pre-loaded into software. One type of specification might be a physical dimension of the product itself. The image for each may look similar to the following:

In this example, 55.85 mm, 41.74 mm, and 13.37 mm are being investigated and represent the critical-to-quality information. The computer program will have the “limits of acceptability”; i.e. maximum and minimum data. Dimensions falling outside these limits will not be accepted. The product will be removed from the conveyor for disposition.

Another usage for machine vision is simple counting, and the following two JPEGs will indicate.

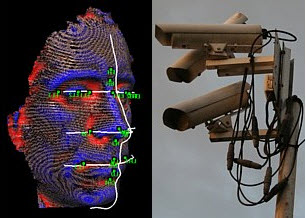

FACIAL RECOGNITION:

One example of a non-industrial application for machine vision is facial recognition. This technology is generally considered to be one facet of the biometrics technology suite. Facial recognition is playing a major role in identifying and apprehending suspected criminals as well as individuals in the process of committing a crime or unwanted activity. Casinos in Las Vegas are using facial recognition to spot “players” with shady records or even employees complicit with individuals trying to get even with “the house”. This technology incorporates visible and infrared modalities face detection, image quality analysis, verification and identification. Many companies use cloud-based image-matching technology to their product range providing the ability to apply theory and innovation to challenging problems in the real world. Facial recognition technology is extremely complex and depends upon many data points relative to the human face.

A grid is constructed of “surface features”;those features are then compared with photographs located in data bases or archives. In this fashion, positive identification can be accomplished.

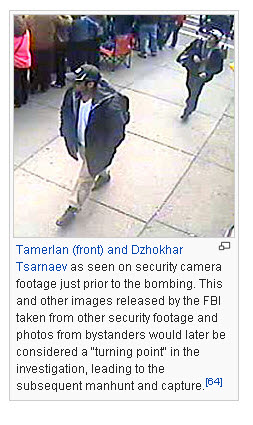

One of the most successful cases for the use of facial recognition was last year’s bombing during the Boston Marathon. Cameras mounted at various locations around the site of the bombing captured photographs of Tamerian and Dzhokhar Tsarnaev prior to their backpack being positioned for both blasts. Even though this is not facial recognition in the truest since of the word, there is no doubt the cameras were instrumental in identifying both criminals.

LAW INFORCEMENT AND TRAFFIC CONTROL:

Remember that last ticket you got for speeding? Maybe, just maybe, that ticket came to you through the mail with a very “neat” picture of your license plate AND the speed at which you were traveling. Probably, there was a warning sign as follows:

OK,so you did not see it. Cameras such as the one below were mounted on the shoulder of the road and snapped a very telling photograph.

You were nailed.

CRITICAL FACTORS:

There are five (5) basic and critical factors for choosing an imaging system. These are as follows:

- Resolution–While a higher resolution camera will help increase accuracy by yielding a clearer, more precise image for analysis, the downside is slower speed.

- Speed of Exposure—Products rapidly moving down a conveyor line will require much faster exposure speed from vision systems. Such applications might be candy or bottled products moving at extremely fast rates.

- Frame Rate–The frame rate of a camera is the number of complete frames that a camera can send to an acquisition system within a predefined time period, which is usually stated as a specific number of frames per second.

- Spectral Response and Responsiveness–All digital cameras that employ electronic sensors are sensitive to light energy. The wavelength of light energy that cameras are sensitive to typically ranges from approximately 400 nanometers to a little beyond 1000 nanometers. There may be instances in imaging when it is desirable to isolate certain wavelengths of light that emanate from an object, and where characteristics of a camera at the desired wavelength may need to be defined. A matching and selection process must be undertaken by application engineers to insure proper usage of equipment relative to the needs at hand.

- Bit Depth–Digital cameras produce digital data, or pixel values. Being digital, this data has a specific number of bits per pixel, known as the pixel bit depth. Each application should be considered carefully to determine whether fine or coarse steps in grayscale are necessary. Machine vision systems commonly use 8-bit pixels, and going to 10 or 12 bits instantly doubles data quantity, as another byte is required to transmit the data. This also results in decreased system speed because two bytes per pixel are used, but not all of the bits are significant. Higher bit depths can also increase the complexity of system integration since higher bit depths necessitate larger cable sizes, especially if a camera has multiple outputs.

SUMMARY:

Machine vision technology will continue to grow as time goes by simply because it is the most efficient and practical, not to mention cost effective, method of obtaining desired results. As always, I welcome your comments.

BIOMETRICS

January 2, 2015

Some data for this post is taken from “Biometrics Newsportal.com”

Biometrics is the science and technology of measuring and analyzing biological data. In information technology, biometrics refers to technologies that measure and analyze human body characteristics, such as DNA, fingerprints, eye retinas and irises, voice patterns, facial patterns and hand measurements, for authentication purposes. Now, the definition of authentication is: the process of determining whether someone or something is, in fact, who or what it is declared to be. The greatest use of biometric data is to prevent identity theft. With this being the case, biometric data is usually encrypted when it’s gathered. Here’s how biometric verification works on the back end: To convert the biometric input, a software application is used to identify specific points of data as match points. The match points in the database are processed using an algorithm that translates that information into a numeric value. The database value is compared with the biometric input the end user has entered into the scanner and authentication is either approved or denied.

Let’s now take a look at the following areas to get a fairly complete picture of how biometrics is used.

DNA:

Humans have twenty-three (23) pairs of chromosomes containing DNA blueprint. One member of each chromosomal pair comes from the mother; the other comes from their father. Every cell in a human body contains a copy of this DNA. The large majority of DNA does not differ from person to person, but 0.10 percent of a person’s entire genome would be unique to each individual. This represents 3 million base pairs of DNA.

Genes make up five percent (5%) percent of the human genome. The other ninety-five percent (95%) percent are non-coding sequences, (which used to be called junk DNA). In non-coding regions there are identical repeat sequences of DNA, which can be repeated anywhere from one to thirty (30) times in a row. These regions are called variable number tandem repeats (VNTRs). The number of tandem repeats at specific places (called loci) on chromosomes varies between individuals. For any given VNTR loci in an individual’s DNA, there will be a certain number of repeats. The higher number of loci are analyzed, the smaller the probability to find two unrelated individuals with the same DNA profile.

DNA profiling determines the number of VNTR repeats at a number of distinctive loci, and use it to create an individual’s DNA profile. The main steps to create a DNA profile are: isolate the DNA (from a sample such as blood, saliva, hair, semen, or tissue), cut the DNA up into shorter fragments containing known VNTR areas, sort the DNA fragments by size, and compare the DNA fragments in different samples.

It’s a big gruesome but forensic identifications using DNA matching provide the most positive methods for determining an individual—living or dead.

FINGERPRINTS:

Fingerprints have been used by law enforcement for year now and offer an infallible means of personal identification. That is the essential explanation for fingerprints having replaced other methods of establishing the identities of criminals reluctant to admit previous arrests. Fingerprints are the oldest and most accurate method of identifying individuals. No two people (not even identical twins) have the same fingerprints, and it is extremely easy for even the most accomplished criminals to leave incriminating fingerprints at the scene of a crime.

A fingerprint is made of a number of ridges and valleys on the surface of each finger. Ridges are the upper skin layer segments of the finger and valleys are the lower segments. The ridges form so-called minutia points: ridge endings (where a ridge end) and ridge bifurcations (where a ridge splits in two). Many types of minutiae exist, including dots (very small ridges), islands (ridges slightly longer than dots, occupying a middle space between two temporarily divergent ridges), ponds or lakes (empty spaces between two temporarily divergent ridges), spurs (a notch protruding from a ridge), bridges (small ridges joining two longer adjacent ridges), and crossovers (two ridges which cross each other).

The uniqueness of a fingerprint can be determined by the pattern of ridges and furrows as well as the minutiae points. There are five basic fingerprint patterns: arch, tented arch, left loop, right loop and whorl. Loops make up sixty percent (60%) of all fingerprints, whorls account for thirty percent (30%), and arches for ten percent (10%).

Fingerprints are usually considered to be unique, with no two fingers having the exact same dermal ridge characteristics.

How does fingerprint biometrics work

The main technologies used to capture the fingerprint image with sufficient detail are optical, silicon, and ultrasound.

There are two main algorithm families to recognize fingerprints:

- Minutia matching compares specific details within the fingerprint ridges. At registration (also called enrollment), the minutia points are located, together with their relative positions to each other and their directions. At the matching stage, the fingerprint image is processed to extract its minutia points, which are then compared with the registered template.

- Pattern matching compares the overall characteristics of the fingerprints, not only individual points. Fingerprint characteristics can include sub-areas of certain interest including ridge thickness, curvature, or density. During enrollment, small sections of the fingerprint and their relative distances are extracted from the fingerprint. Areas of interest are the area around a minutia point, areas with low curvature radius, and areas with unusual combinations of ridges.

EYE RETINAS AND IRISES:

Retina scans require that the person removes their glasses, place their eye close to the scanner, stare at a specific point, and remain still, and focus on a specified location for approximately ten (10) to fifteen (15) seconds while the scan is completed. A retinal scan involves the use of a low-intensity coherent light source, which is projected onto the retina to illuminate the blood vessels which are then photographed and analyzed. A coupler is used to read the blood vessel patterns.

A retina scan cannot be faked as it is currently impossible to forge a human retina. Furthermore, the retina of a deceased person decays too rapidly to be used to deceive a retinal scan.

A retinal scan has an error rate of 1 in 10,000,000, compared to fingerprint identification error being sometimes as high as 1 in 500.

An iris scan will analyze over 200 points of the iris, such as rings, furrows, freckles, the corona and will compare it a previously recorded template.

Glasses, contact lenses, and even eye surgery does not change the characteristics of the iris.

To prevent an image / photo of the iris from being used instead of a real “live” eye, iris scanning systems will vary the light and check that the pupil dilates or contracts.

VIOCE RECOGNITION:

Speech includes two components: a physiological component (the voice tract) and a behavioral component (the accent). It is almost impossible to imitate anyone’s voice perfectly. Voice recognition systems can discriminate between two very similar voices, including twins.

The voiceprint generated upon enrolment is characterized by the vocal tract, which is a unique a physiological trait. A cold does not affect the vocal tract, so there will be no adverse affect on accuracy levels. Only extreme vocal conditions such as laryngitis will prevent the user from using the system.

During enrollment, the user is prompted to repeat a short passphrase or a sequence of numbers. Voice recognition can utilize various audio capture devices (microphones, telephones and PC microphones). The performance of voice recognition systems may vary depending on the quality of the audio signal.

To prevent the risk of unauthorized access via tape recordings, the user is asked to repeat random phrases.

HAND MEASUREMENTS:

Biometric hand recognition systems measure and analyze the overall structure, shape and proportions of the hand, e.g. length, width and thickness of hand, fingers and joints; characteristics of the skin surface such as creases and ridges. Some hand geometry biometrics systems measure up to ninety 90 parameters.

As hand biometrics rely on hand and finger geometry, the system will also work with dirty hands. The only limitation is for people with severe arthritis who cannot spread their hands on the reader.

The user places the palm of his or her hand on the reader’s surface and aligns his or her hand with the guidance pegs which indicate the proper location of the fingers. The device checks its database for verification of the user. The process normally only takes a few seconds.

To enroll, the users place his or her hand palm down on the reader’s surface.

To prevent a mold or a cast of the hand from being used, some hand biometric systems will require the user to move their fingers. Also, hand thermography can be used to record the heat of the hand, or skin conductivity can be measured.

SIGNATURE RECOGNITION:

Biometric signature recognition systems will measure and analyze the physical activity of signing, such as the stroke order, the pressure applied and the speed. Some systems may also compare visual images of signatures, but the core of a signature biometric system is behavioral, i.e. how it is signed rather than visual, i.e. the image of the signature.

CONCLUSION:

As you well know, identity theft is a HUGE problem across the world. Trans Union tells us the following:

- Identity theft is the fastest growing crime in America.

- The number of identity theft incidents has reached 9.9 million a year, according to the Federal Trade Commission.

- Every minute about 19 people fall victim to identity theft.

- It takes the average victim an estimated $500 and 30 hours to resolve each identity theft crime.

- Studies have shown that it’s becoming more common for the ones stealing your identity to be those closest to you. One study found 32% of identity theft victims discovered a family member or relative was responsible for stealing their identity. That same study found 18% were victimized by a friend, neighbor or in-home employee.

- Most cases of identity theft can be resolved if they are caught early.

- Financial institutions – like banks and creditors – usually only hold the victim responsible for the first $50 of fraudulent charges.

- Only 28% of identity theft cases involve credit or financial fraud. Phone, utility, bank and employment fraud make up another 50% of cases.

These are sobering numbers but reflect reality. The incorporation of biometrics just may be a partial answer to theft prevalent today. As always, I welcome your comments.

PRICE OUTLOOK FOR NATURAL GAS

January 25, 2015

The references for this post are derived from the publication NGV America (Natural Gas Vehicles), “Oil Price Volatility and the Continuing Case for Natural Gas as a Transportation Fuel”; 400 North Capitol St. NW, ۰ Washington, D.C. 20001 ۰ phone (202) 824-7360 ۰ fax (202) 824-9160 ۰http://www.ngvamerica.org.

If you have read my posts you realize that I am a staunch supporter of alternate fuels for transportation, specifically the use of LNG (liquefied natural gas) and CNG (compressed natural gas). We all know that oil is a non-renewable resource—a precious resource and one that should be conserved if at all possible. With that said, there has been a significant drop in gasoline prices over the past few weeks which may lull us into thinking the need to continue seeking conservations measures relative to oil-based fuels is no longer necessary. Let’s take a look at several facts, and then we will strive to draw conclusions.

The chart below will indicate the ebb and flow of crude oil vs. natural gas in BTU equivalence. Please remember that BTU is British Thermal Unit. A BTU is the measure of the energy required to raise one pound of water one degree Fahrenheit. As you can see, the price of natural gas per barrel, energy equivalent, has remained fairly stable since 2008 relative to the price of crude per barrel. Natural gas, either LNG or CNG is considerably more “affordable” than crude oil.

There are several reasons for the price of oil per barrel dropping over the past few months. These are as follows:

Over the longterm, oil demand is likely to increase as economic growth returns to more normal levels and economic activity picks up. As has been the case in recent years, the developing countries led by China and India will likely lead the way in driving oil demand. The developed countries, including the U.S., are not expected to experience much growth in overall levels of petroleum use.

According to the International Energy Agency (IEA) and the U.S. Energy Information Administration (EIA), oil markets may turn the corner sometime in late 2015, as that is when these agencies are predicting that oil demand and supply will cross back over. These agencies also are forecasting 2015 prices in the mid- to high-$50 per barrel range. The most recent Short-Term Outlook from EIA (January 2015 SEO) pegs the price of Brent oil at an average of $58 a barrel in 2015. That level reflects averages as low as $49 a barrel and a high of $67 a barrel in the latter part of the year. The WTI price of oil is expected to average $3 less than Brent for a 2015 average of $55 a barrel. For 2016, EIA’s January SEO forecasts average prices of $75 per barrel for Brent oil and $71 for WTI oil.

Another important issue is the current number of U.S. refineries. In the U.S., virtually no new refineries have been built for several years and the number of operable refineries has dropped from 150 to 142 between 2009 and 2014. One question—will diesel prices continue to fall? Will the transportation sector of our economy continue to benefit from lower prices? We must remember also that refineries have several potential markets for diesel fuel other than transportation uses, since it can be used for home heating, industrial purposes and as boiler fuel. The lead up to winter has increased home heating fuel demand, particularly in the northeast, which has likely also contributed to a slower decline in diesel prices.

What is the long-term projection for transportation-grade fuels? The graphic below will indicate the use of natural gas will continue being the lowest cost fuel relative to gasoline and diesel grade petroleum.

This is also supported by the following chart. As you can see, natural gas prices have remained steady over the past few years.

Another key factor in assessing the long-term stability of transportation fuel prices is the cost of the commodity as a portion of its price at the pump. Market volatility and commodity price increases have a much larger impact on the economics of gasoline and diesel fuel prices than they do for natural gas. As shown below, as much as 70 percent of the cost of gasoline and 60 percent of diesel fuel is directly attributable to the commodity cost of oil, while only 20 percent of the cost of CNG is part of the commodity cost of natural gas. This is a key in understanding the volatile price swings of petroleum-based fuels compared to the stability of natural gas.

Proven, abundant and growing domestic reserves of natural gas are another influence on the long-term stability of natural gas prices. The recent estimates provided by the independent and non-partisan Colorado School of Mines’ Potential Gas Committee have included substantial increases to domestic reserves. The U.S. is now the number one producer of natural gas in the world.

Even with today’s lower oil prices, natural gas as a commodity is one-third (3:1) the cost of oil per million Btu of energy supplied. More recently, the price of oil has exceeded natural gas by a factor of 4:1 and as much as 8:1 when oil was $140 a barrel and natural gas was trading at $3 per million Btu. Perhaps most relevant is that the fluctuations in these comparisons have been almost totally based on the volatility of oil prices. As the earlier tables clearly demonstrate, natural gas pricing has been relatively consistent and stable and is projected to be for decades to come.

As you can see from the following chart, the abundance of natural gas is definitely THE key factor relative to insuring the continuation of crude resources for generations to come.

Conclusion

As always, I welcome your comments.

Share this:

Tagged: CNG, Commentary, Education, LNG, natural gas, Oil Reserves, Technology