SMARTS

March 17, 2019

Who was the smartest person in the history of our species? Solomon, Albert Einstein, Jesus, Nikola Tesla, Isaac Newton, Leonardo de Vinci, Stephen Hawking—who would you name. We’ve had several individuals who broke the curve relative to intelligence. As defined by the Oxford Dictionary of the English Language, IQ:

“an intelligence test score that is obtained by dividing mental age, which reflects the age-graded level of performance as derived from population norms, by chronological age and multiplying by100: a score of100 thus indicates a performance at exactly the normal level for that age group. Abbreviation: IQ”

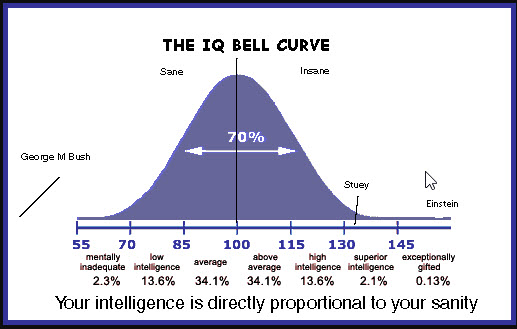

An intelligence quotient or IQ is a score derived from one of several different intelligence measures. Standardized tests are designed to measure intelligence. The term “IQ” is a translation of the German Intellizenz Quotient and was coined by the German psychologist William Stern in 1912. This was a method proposed by Dr. Stern to score early modern children’s intelligence tests such as those developed by Alfred Binet and Theodore Simin in the early twentieth century. Although the term “IQ” is still in use, the scoring of modern IQ tests such as the Wechsler Adult Intelligence Scale is not based on a projection of the subject’s measured rank on the Gaussian Bell curve with a center value of one hundred (100) and a standard deviation of fifteen (15). The Stanford-Binet IQ test has a standard deviation of sixteen (16). As you can see from the graphic below, seventy percent (70%) of the human population has an IQ between eighty-five and one hundred and fifteen. From one hundred and fifteen to one hundred and thirty you are considered to be highly intelligent. Above one hundred and thirty you are exceptionally gifted.

What are several qualities of highly intelligent people? Let’s look.

QUALITIES:

- A great deal of self-control.

- Very curious

- They are avid readers

- They are intuitive

- They love learning

- They are adaptable

- They are risk-takers

- They are NOT over-confident

- They are open-minded

- They are somewhat introverted

You probably know individuals who fit this profile. We are going to look at one right now: John von Neumann.

JON von NEUMANN:

The Financial Times of London celebrated John von Neumann as “The Man of the Century” on Dec. 24, 1999. The headline hailed him as the “architect of the computer age,” not only the “most striking” person of the 20th century, but its “pattern-card”—the pattern from which modern man, like the newest fashion collection, is cut.

The Financial Times and others characterize von Neumann’s importance for the development of modern thinking by what are termed his three great accomplishments, namely:

(1) Von Neumann is the inventor of the computer. All computers in use today have the “architecture” von Neumann developed, which makes it possible to store the program, together with data, in working memory.

(2) By comparing human intelligence to computers, von Neumann laid the foundation for “Artificial Intelligence,” which is taken to be one of the most important areas of research today.

(3) Von Neumann used his “game theory,” to develop a dominant tool for economic analysis, which gained recognition in 1994 when the Nobel Prize for economic sciences was awarded to John C. Harsanyi, John F. Nash, and Richard Selten.

John von Neumann, original name János Neumann, (born December 28, 1903, Budapest, Hungary—died February 8, 1957, Washington, D.C. Hungarian-born American mathematician. As an adult, he appended von to his surname; the hereditary title had been granted his father in 1913. Von Neumann grew from child prodigy to one of the world’s foremost mathematicians by his mid-twenties. Important work in set theory inaugurated a career that touched nearly every major branch of mathematics. Von Neumann’s gift for applied mathematics took his work in directions that influenced quantum theory theory of automation, economics, and defense planning. Von Neumann pioneered game theory, and, along with Alan Turing and Claude Shannon was one of the conceptual inventors of the stored-program digital computer .

Von Neumann did exhibit signs of genius in early childhood: he could joke in Classical Greek and, for a family stunt, he could quickly memorize a page from a telephone book and recite its numbers and addresses. Von Neumann learned languages and math from tutors and attended Budapest’s most prestigious secondary school, the Lutheran Gymnasium . The Neumann family fled Bela Kun’s short-lived communist regime in 1919 for a brief and relatively comfortable exile split between Vienna and the Adriatic resort of Abbazia. Upon completion of von Neumann’s secondary schooling in 1921, his father discouraged him from pursuing a career in mathematics, fearing that there was not enough money in the field. As a compromise, von Neumann simultaneously studied chemistry and mathematics. He earned a degree in chemical engineering from the Swiss Federal Institute in Zurich and a doctorate in mathematics (1926) from the University of Budapest.

OK, that all well and good but do we know the IQ of Dr. John von Neumann?

John Von Neumann IQ is 190, which is considered as a super genius and in top 0.1% of the population in the world.

With his marvelous IQ, he wrote one hundred and fifty (150) published papers in his life; sixty (60) in pure mathematics, twenty (20) in physics, and sixty (60) in applied mathematics. His last work, an unfinished manuscript written while in the hospital and later published in book form as The Computer and the Brain, gives an indication of the direction of his interests at the time of his death. It discusses how the brain can be viewed as a computing machine. The book is speculative in nature, but discusses several important differences between brains and computers of his day (such as processing speed and parallelism), as well as suggesting directions for future research. Memory is one of the central themes in his book.

I told you he was smart!

OUR SHRINKING WORLD

March 16, 2019

We sometimes do not realize how miniaturization has affected our every-day lives. Electromechanical products have become smaller and smaller with one great example being the cell phone we carry and use every day. Before we look at several examples, let’s get a definition of miniaturization.

Miniaturization is the trend to manufacture ever smaller mechanical, optical and electronic products and devices. Examples include miniaturization of mobile phones, computers and vehicle engine downsizing. In electronics, Moore’s Law predicted that the number of transistors on an integrated circuit for minimum component cost doubles every eighteen (18) months. This enables processors to be built in smaller sizes. We can tell that miniaturization refers to the evolution of primarily electronic devices as they become smaller, faster and more efficient. Miniaturization also includes mechanical components although it sometimes is very difficult to reduce the size of a functioning part.

The revolution of electronic miniaturization began during World War II and is continuing to change the world till now. Miniaturization of computer technology has been the source of a seemingly endless battle between technology giants over the world. The market has become so competitive that the companies developing microprocessors are constantly working towards erecting a smaller microchip than that of their competitor, and as a result, computers become obsolete almost as soon as they are commercialized. The concept that underlies technological miniaturization is “the smaller the better”; smaller is faster, smaller is cheaper, smaller is more profitable. It is not just companies that profit from miniaturization advances, but entire nations reap rewards through the capitalization of new developments. Devices such as personal computers, cellular telephones, portable radios, and camcorders have created massive markets through miniaturization, and brought billions of dollars to the countries where they were designed and built. In the 21st century, almost every electronic device has a computer chip inside. The goal of miniaturization is to make these devices smaller and more powerful, and thus made available everywhere. It has been said, however, that the time for continued miniaturization is limited – the smaller the computer chip gets, the more difficult it becomes to shrink the components that fit on the chip. I personally do not think this is the case but I am a mechanical engineer and not an electronic or electrical engineer. I use the products but I do not develop the products.

The world of miniaturization would not be possible at all if it were not for semiconductor technology. Devices made of semiconductors, notably silicon, are essential components of most electronic circuits. A process of lithography is used to create circuitry layered over a silicon substrate. A transistor is a semiconductor device with three connections capable of amplification in addition to rectification. Miniaturization entails increasing the number of transistors that can hold on a single chip, while shrinking the size of the chip. As the surface area of a chip decreases, the task of designing newer and faster circuit designs becomes more difficult, as there is less room left for the components that make the computer run faster and store more data.

There is no better example of miniaturization than cell phone development. The digital picture you see below will give some indication as to the development of the cell phone and how the physical size has decreased over the years. The cell phone to the far left is where it all started. To the right, where we are today. If you look at the modern-day cell phone you see a remarkable difference in size AND ability to communicate. This is all possible due to shrinking computer chips.

One of the most striking changes due to miniaturization is the application of digital equipment into a modern-day aircraft cockpit. The JPEG below is a mockup of an actual Convair 880. With analog gauges, an engineering panel and an exterior shell, this cockpit reads 1960/1970 style design and fabrication. In fact, this is the actual cockpit mock up that was used in the classic comedy film “Airplane”.

Now, let us take a look at a digital cockpit. Notice any differences? Cleaner and fewer. The GUI or graphical user interface can take the place of numerous dials and gauges that clutter and possibly confuse a pilot’s vision.

I think you have the picture so I would challenge you to take a look this upcoming week to discover those electromechanical items, we take for granted, to discover how they have been reduced in size. You just may be surprised.

TELECOMMUTING

March 13, 2019

Our two oldest granddaughters have new jobs. Both, believe it or not, telecommute. That’s right, they do NOT drive to work. They work from home—every day of the week and sometimes on Saturday. Both ladies work for companies not remotely close to their homes in Atlanta. The headquarters for these companies are hundreds of miles away and in other states.

Even the word is fairly new! A few years ago, there was no such “animal” as telecommuting and today it’s considered by progressive companies as “kosher”. Companies such as AT&T, Blue Cross-Blue Shield, Southwest Airlines, The Home Shopping Network, Amazon and even Home Depot allow selected employees to “mail it in”. The interesting thing; efficiency and productivity are not lessened and, in most cases, improve. Let’s look at several very interesting facts regarding this trend in conducting business. This information comes from a website called “Flexjobs.com”.

- Three point three (3.3) million full-time professionals, excluding volunteers and the self-employed, consider their home as their primary workplace.

- Telecommuting saves between six hundred ($600) and one thousand ($1,000) on annual dry-cleaning expenses, more than eight hundred ($800) on coffee and lunch expenses, enjoy a tax break of about seven hundred and fifty ($750), save five hundred and ninety ($590) on their professional wardrobe, save one thousand one hundred and twenty ($1,120) on gas, and avoid over three hundred ( $300 ) dollars in car maintenance costs.

- Telecommuters save two hundred and sixty (260) hours by not commuting on a daily basis.

- Work from home programs help businesses save about two thousand ($2,000) per year help businesses save two thousand ($2,000) per person per year and reduce turnover by fifty (50%) percent.

- Typical telecommuter are college graduates of about forty-nine (49) years old and work with a company with fewer than one hundred (100) employees.

- Seventy-three percent (73%) of remote workers are satisfied with the company they work for and feel that their managers are concerned about their well-being and morale.

- For every one real work-from-home job, there are sixty job scams.

- Most telecommuters (53 percent) work more than forty (40) hours per week.

- Telecommuters work harder to create a friendly, cooperative, and positive work environment for themselves and their teams.

- Work-from-home professionals (82 percent) were able to lower their stress levels by working remotely. Eighty (80) percent have improved morale, seventy (70) percent increase productivity, and sixty-nine (69) percent miss fewer days from work.

- Half of the U.S. workforce have jobs that are compatible with remote work.

- Remote workers enjoy more sleep, eat healthier, and get more physical exercise

- Telecommuters are fifty (50) percent less likely to quit their jobs.

- When looking at in-office workers and telecommuters, forty-five (45) percent of telecommuters love their job, while twenty-four (24) percent of in-office workers love their jobs.

- Four in ten (10) freelancers have completed projects completely from home.

OK, what are the individual and company benefits resulting from this activity. These might be as follows:

- Significant reduction in energy usage by company.

- Reduction in individual carbon footprint. (It has been estimated that 9,500 pounds of CO 2 per year per person could be avoided if the employee works from home. Most of this is avoidance of cranking up the “tin lezzy”.)

- Reduction in office expenses in the form of space, desk, chair, tables, lighting, telephone equipment, and computer connections, etc.

- Reduction in the number of sick days taken due to illnesses from communicable diseases.

- Fewer “in-office” distractions allowing for greater focus on work. These might include: 1.) Monday morning congregation at the water cooler to discuss the game on Saturday, 2.) Birthday parties, 3.) Mary Kay meetings, etc etc. You get the picture!

In the state where I live (Tennessee), the number of telecommuters has risen eighteen (18) percent relative to 2011. 489,000 adults across Tennessee work from home on a regular basis. Most of these employees do NOT work for themselves in family-owned businesses but for large companies that allow the activity. Also, many of these employees work for out-of-state concerns thus creating ideal situations for both worker and employer. At Blue Cross of Tennessee, one in six individuals go to work by staying at home. Working at home definitely does not always mean there is no personal communication with supervisors and peers. These meetings are factored into each work week, some required at least on a monthly basis.

Four point three (4.3) million employees (3.2% of the workforce) now work from home at least half the time. Regular work-at-home, among the non-self-employed population, has grown by 140% since 2005, nearly 10x faster than the rest of the workforce or the self-employed. Of course, this marvelous transition has only been made possible by internet connections and in most cases; the computer technology at home equals or surpasses that found at “work”. We all know this trend will continue as well it should.

I welcome your comments and love to know your “telecommuting” stories. Please send responses to: bobjengr@comcast.net.

ARTIFICIAL INTELLIGENCE

February 12, 2019

Just what do we know about Artificial Intelligence or AI? Portions of this post were taken from Forbes Magazine.

John McCarthy first coined the term artificial intelligence in 1956 when he invited a group of researchers from a variety of disciplines including language simulation, neuron nets, complexity theory and more to a summer workshop called the Dartmouth Summer Research Project on Artificial Intelligence to discuss what would ultimately become the field of AI. At that time, the researchers came together to clarify and develop the concepts around “thinking machines” which up to this point had been quite divergent. McCarthy is said to have picked the name artificial intelligence for its neutrality; to avoid highlighting one of the tracks being pursued at the time for the field of “thinking machines” that included cybernetics, automation theory and complex information processing. The proposal for the conference said, “The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

Today, modern dictionary definitions focus on AI being a sub-field of computer science and how machines can imitate human intelligence (being human-like rather than becoming human). The English Oxford Living Dictionary gives this definition: “The theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

Merriam-Webster defines artificial intelligence this way:

- A branch of computer science dealing with the simulation of intelligent behavior in computers.

- The capability of a machine to imitate intelligent human behavior.

About thirty (30) year ago, a professor at the Harvard Business School (Dr. Shoshana Zuboff) articulated three laws based on research into the consequences that widespread computing would have on society. Dr. Zuboff had degrees in philosophy and social psychology so she was definitely ahead of her time relative to the unknown field of AI. Her document “In the Age of the Smart Machine: The Future of Work and Power”, she postulated the following three laws:

- Everything that can be automated will be automated

- Everything that can be informated will be informated. (NOTE: Informated was coined by Zuboff to describe the process of turning descriptions and measurements of activities, events and objects into information.)

- In the absence of countervailing restrictions and sanctions, every digital application that can be sued for surveillance and control will be used for surveillance and control, irrespective of its originating intention.

At that time there was definitely a significant lack of computing power. That ship has sailed and is no longer a great hinderance to AI advancement that it certainly once was.

WHERE ARE WE?

In recent speech, Russian president Vladimir Putin made an incredibly prescient statement: “Artificial intelligence is the future, not only for Russia, but for all of humankind.” He went on to highlight both the risks and rewards of AI and concluded by declaring that whatever country comes to dominate this technology will be the “ruler of the world.”

As someone who closely monitors global events and studies emerging technologies, I think Putin’s lofty rhetoric is entirely appropriate. Funding for global AI startups has grown at a sixty percent (60%) compound annual growth rate since 2010. More significantly, the international community is actively discussing the influence AI will exert over both global cooperation and national strength. In fact, the United Arab Emirates just recently appointed its first state minister responsible for AI.

Automation and digitalization have already had a radical effect on international systems and structures. And considering that this technology is still in its infancy, every new development will only deepen the effects. The question is: Which countries will lead the way, and which ones will follow behind?

If we look at criteria necessary for advancement, there are the seven countries in the best position to rule the world with the help of AI. These countries are as follows:

- Russia

- The United States of America

- China

- Japan

- Estonia

- Israel

- Canada

The United States and China are currently in the best position to reap the rewards of AI. These countries have the infrastructure, innovations and initiative necessary to evolve AI into something with broadly shared benefits. In fact, China expects to dominate AI globally by 2030. The United States could still maintain its lead if it makes AI a top priority and charges necessary investments while also pulling together all required government and private sector resources.

Ultimately, however, winning and losing will not be determined by which country gains the most growth through AI. It will be determined by how the entire global community chooses to leverage AI — as a tool of war or as a tool of progress.

Ideally, the country that uses AI to rule the world will do it through leadership and cooperation rather than automated domination.

CONCLUSIONS: We dare not neglect this disruptive technology. We cannot afford to lose this battle.

COMPUTER SIMULATION

January 20, 2019

More and more engineers, systems analysist, biochemists, city planners, medical practitioners, individuals in entertainment fields are moving towards computer simulation. Let’s take a quick look at simulation then we will discover several examples of how very powerful this technology can be.

WHAT IS COMPUTER SIMULATION?

Simulation modelling is an excellent tool for analyzing and optimizing dynamic processes. Specifically, when mathematical optimization of complex systems becomes infeasible, and when conducting experiments within real systems is too expensive, time consuming, or dangerous, simulation becomes a powerful tool. The aim of simulation is to support objective decision making by means of dynamic analysis, to enable managers to safely plan their operations, and to save costs.

A computer simulation or a computer model is a computer program that attempts to simulate an abstract model of a particular system. … Computer simulations build on and are useful adjuncts to purely mathematical models in science, technology and entertainment.

Computer simulations have become a useful part of mathematical modelling of many natural systems in physics, chemistry and biology, human systems in economics, psychology, and social science and in the process of engineering new technology, to gain insight into the operation of those systems. They are also widely used in the entertainment fields.

Traditionally, the formal modeling of systems has been possible using mathematical models, which attempts to find analytical solutions to problems enabling the prediction of behavior of the system from a set of parameters and initial conditions. The word prediction is a very important word in the overall process. One very critical part of the predictive process is designating the parameters properly. Not only the upper and lower specifications but parameters that define intermediate processes.

The reliability and the trust people put in computer simulations depends on the validity of the simulation model. The degree of trust is directly related to the software itself and the reputation of the company producing the software. There will considerably more in this course regarding vendors providing software to companies wishing to simulate processes and solve complex problems.

Computer simulations find use in the study of dynamic behavior in an environment that may be difficult or dangerous to implement in real life. Say, a nuclear blast may be represented with a mathematical model that takes into consideration various elements such as velocity, heat and radioactive emissions. Additionally, one may implement changes to the equation by changing certain other variables, like the amount of fissionable material used in the blast. Another application involves predictive efforts relative to weather systems. Mathematics involving these determinations are significantly complex and usually involve a branch of math called “chaos theory”.

Simulations largely help in determining behaviors when individual components of a system are altered. Simulations can also be used in engineering to determine potential effects, such as that of river systems for the construction of dams. Some companies call these behaviors “what-if” scenarios because they allow the engineer or scientist to apply differing parameters to discern cause-effect interaction.

One great advantage a computer simulation has over a mathematical model is allowing a visual representation of events and time line. You can actually see the action and chain of events with simulation and investigate the parameters for acceptance. You can examine the limits of acceptability using simulation. All components and assemblies have upper and lower specification limits a and must perform within those limits.

Computer simulation is the discipline of designing a model of an actual or theoretical physical system, executing the model on a digital computer, and analyzing the execution output. Simulation embodies the principle of “learning by doing” — to learn about the system we must first build a model of some sort and then operate the model. The use of simulation is an activity that is as natural as a child who role plays. Children understand the world around them by simulating (with toys and figurines) most of their interactions with other people, animals and objects. As adults, we lose some of this childlike behavior but recapture it later on through computer simulation. To understand reality and all of its complexity, we must build artificial objects and dynamically act out roles with them. Computer simulation is the electronic equivalent of this type of role playing and it serves to drive synthetic environments and virtual worlds. Within the overall task of simulation, there are three primary sub-fields: model design, model execution and model analysis.

REAL-WORLD SIMULATION:

The following examples are taken from computer screen representing real-world situations and/or problems that need solutions. As mentioned earlier, “what-ifs” may be realized by animating the computer model providing cause-effect and responses to desired inputs. Let’s take a look.

A great host of mechanical and structural problems may be solved by using computer simulation. The example above shows how the diameter of two matching holes may be affected by applying heat to the bracket

The Newtonian and non-Newtonian flow of fluids, i.e. liquids and gases, has always been a subject of concern within piping systems. Flow related to pressure and temperature may be approximated by simulation.

The Newtonian and non-Newtonian flow of fluids, i.e. liquids and gases, has always been a subject of concern within piping systems. Flow related to pressure and temperature may be approximated by simulation.

Electromagnetics is an extremely complex field. The digital above strives to show how a magnetic field reacts to applied voltage.

Chemical engineers are very concerned with reaction time when chemicals are mixed. One example might be the ignition time when an oxidizer comes in contact with fuel.

Acoustics or how sound propagates through a physical device or structure.

The transfer of heat from a colder surface to a warmer surface has always come into question. Simulation programs are extremely valuable in visualizing this transfer.

Equation-based modeling can be simulated showing how a structure, in this case a metal plate, can be affected when forces are applied.

In addition to computer simulation, we have AR or augmented reality and VR virtual reality. Those subjects are fascinating but will require another post for another day. Hope you enjoy this one.

OKAY, TELL ME THE DIFFERENCE

March 21, 2018

Portions of this post are taken from the January 2018 article written by John Lewis of “Vision Systems”.

I feel there is considerable confusion between Artificial Intelligence (AI), Machine Learning and Deep Learning. Seemingly, we use these terms and phrases interchangeably and they certainly have different meanings. Natural Learning is the intelligence displayed by humans and certain animals. Why don’t we do the numbers:

AI:

Artificial Intelligence refers to machines mimicking human cognitive functions such as problem solving or learning. When a machine understands human speech or can compete with humans in a game of chess, AI applies. There are several surprising opinions about AI as follows:

- Sixty-one percent (61%) of people see artificial intelligence making the world a better place

- Fifty-seven percent (57%) would prefer an AI doctor perform an eye exam

- Fifty-five percent (55%) would trust an autonomous car. (I’m really not there as yet.)

The term artificial intelligence was coined in 1956, but AI has become more popular today thanks to increased data volumes, advanced algorithms, and improvements in computing power and storage.

Early AI research in the 1950s explored topics like problem solving and symbolic methods. In the 1960s, the US Department of Defense took interest in this type of work and began training computers to mimic basic human reasoning. For example, the Defense Advanced Research Projects Agency (DARPA) completed street mapping projects in the 1970s. And DARPA produced intelligent personal assistants in 2003, long before Siri, Alexa or Cortana were household names. This early work paved the way for the automation and formal reasoning that we see in computers today, including decision support systems and smart search systems that can be designed to complement and augment human abilities.

While Hollywood movies and science fiction novels depict AI as human-like robots that take over the world, the current evolution of AI technologies isn’t that scary – or quite that smart. Instead, AI has evolved to provide many specific benefits in every industry.

MACHINE LEARNING:

Machine Learning is the current state-of-the-art application of AI and largely responsible for its recent rapid growth. Based upon the idea of giving machines access to data so that they can learn for themselves, machine learning has been enabled by the internet, and the associated rise in digital information being generated, stored and made available for analysis.

Machine learning is the science of getting computers to act without being explicitly programmed. In the past decade, machine learning has given us self-driving cars, practical speech recognition, effective web search, and a vastly improved understanding of the human genome. Machine learning is so pervasive today that you probably use it dozens of times a day without knowing it. Many researchers also think it is the best way to make progress towards human-level understanding. Machine learning is an application of artificial intelligence (AI) that provides systems the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it learn for themselves.

DEEP LEARNING:

Deep Learning concentrates on a subset of machine-learning techniques, with the term “deep” generally referring to the number of hidden layers in the deep neural network. While conventional neural network may contain a few hidden layers, a deep network may have tens or hundreds of layers. In deep learning, a computer model learns to perform classification tasks directly from text, sound or image data. In the case of images, deep learning requires substantial computing power and involves feeding large amounts of labeled data through a multi-layer neural network architecture to create a model that can classify the objects contained within the image.

CONCLUSIONS:

Brave new world we are living in. Someone said that AI is definitely the future of computing power and eventually robotic systems that could possibly replace humans. I just hope the programmers adhere to Dr. Isaac Asimov’s three laws:

- The First Law of Robotics: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- The Second Law of Robotics: A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- The Third Law of Robotics: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

With those words, science-fiction author Isaac Asimov changed how the world saw robots. Where they had largely been Frankenstein-esque, metal monsters in the pulp magazines, Asimov saw the potential for robotics as more domestic: as a labor-saving device; the ultimate worker. In doing so, he continued a literary tradition of speculative tales: What happens when humanity remakes itself in its image?

As always, I welcome your comments.

OPEN SOURCE SOFTWARE AND WHY YOU SHOULD (MUST) AVOID AT ALL COSTS

December 2, 2017

OKAY first, let us define “OPEN SOURCE SOFTWARE” as follows:

Open-source software (OSS) is computer software with its source-code made available with a license in which the copyright holder provides the rights to study, change, and distribute the software to anyone and for any purpose. Open-source software may be developed in a collaborative public manner. The benefits include:

- COST—Generally, open source software if free.

- FLEXIBILITY—Computer specialists can alter the software to fit their needs for the program(s) they are writing code for.

- FREEDOM—Generally, no issues with patents or copyrights.

- SECURITY—The one issue with security is using open source software and embedded code due to compatibility issues.

- ACCOUNTABILITY—Once again, there are no issues with accountability and producers of the code are known.

A very detailed article written by Jacob Beningo has seven (7) excellent points for avoiding, like the plague, open source software. Given below are his arguments.

REASON 1—LACKS TRACEABLE SOFTWARE DEVELOPMENT LIFE CYCLE–Open source software usually starts with an ingenious developer working out their garage or basement hoping to create code that is very functional and useful. Eventually multiple developers with spare time on their hands get involved. The software evolves but it doesn’t really follow a traceable design cycle or even follow best practices. These various developers implement what they want or push the code in the direction that meets their needs. The result is software that works in limited situations and circumstances and users need to cross their fingers and pray that their needs and conditions match them.

REASON 2—DESIGNED FOR FUNCTIONALITY AND NOT ROBUSTNESS–Open source software is often written for functionality only. Accessed and written to an SD card for communication over USB connections. The issue here is that while it functions the code, it generally is not robust and is never designed to anticipate issues. This is rarely the case and while the software is free, very quickly developers can find that their open source software is just functional and can’t stand up to real-world pressures. Developers will find themselves having to dig through unknown terrain trying to figure out how best to improve or handle errors that weren’t expected by the original developers.

REASON 3—ACCIDENTIALLY EXPOSING CONFIDENTIAL INTELLECTURAL PROPERTY–There are several different licensing schemes that open source software developers use. Some really do give away the farm; however, there are also licenses that require any modifications or even associated software to be released as open source. If close attention is not being paid, a developer could find themselves having to release confidential code and algorithms to the world. Free software just cost the company in revealing the code or if they want to be protected, they now need to spend money on attorney fees to make sure that they aren’t giving it all away by using “free” software.

REASON 4—LACKING AUTOMATED AND/OR MANUAL TESTING–A formalized testing process, especially automated tests are critical to ensuring that a code base is robust and has sufficient quality to meet its needs. I’ve seen open source Python projects that include automated testing which is encouraging but for low level firmware and embedded systems we seem to still lag behind the rest of the software industry. Without automated tests, we have no way to know if integrating that open source component broke something in it that we won’t notice until we go to production.

REASON 5—POOR DOCUMENTATION OR DOCUMENTATION THAT IS LACKING COMPLETELY–Documentation has been getting better among open source projects that have been around for a long time or that have strong commercial backing. Smaller projects though that are driven by individuals tend to have little to no documentation. If the open source code doesn’t have documentation, putting it into practice or debugging it is going to be a nightmare and more expensive than just getting commercial or industrial-grade software.

REASON 6—REAL-TIME SUPPORT IS LACKING–There are few things more frustrating than doing everything you can to get something to work or debugged and you just hit the wall. When this happens, the best way to resolve the issue is to get support. The problem with open source is that there is no guarantee that you will get the support you need in a timely manner to resolve any issues. Sure, there are forums and social media to request help but those are manned by people giving up their free time to help solve problems. If they don’t have the time to dig into a problem, or the problem isn’t interesting or is too complex, then the developer is on their own.

REASON 7—INTEGRATION IS NEVER AS EASY AS IT SEEMS–The website was found; the demonstration video was awesome. This is the component to use. Look at how easy it is! The source is downloaded and the integration begins. Months later, integration is still going on. What appeared easy quickly turned complex because the same platform or toolchain wasn’t being used. “Minor” modifications had to be made. The rabbit hole just keeps getting deeper but after this much time has been sunk into the integration, it cannot be for naught.

CONCLUSIONS:

I personally am by no means completely against open source software. It’s been extremely helpful and beneficial in certain circumstances. I have used open source, namely JAVA, as embedded software for several programs I have written. It’s important though not to just use software because it’s free. Developers need to recognize their requirements, needs, and level of robustness that required for their product and appropriately develop or source software that meets those needs rather than blindly selecting software because it’s “free.” IN OTHER WORDS—BE CAREFUL!

AMAZING GRACE

October 3, 2017

There are many people responsible for the revolutionary development and commercialization of the modern-day computer. Just a few of those names are given below. Many of whom you probably have never heard of. Let’s take a look.

COMPUTER REVOLUNTARIES:

- Howard Aiken–Aiken was the original conceptual designer behind the Harvard Mark I computer in 1944.

- Grace Murray Hopper–Hopper coined the term “debugging” in 1947 after removing an actual moth from a computer. Her ideas about machine-independent programming led to the development of COBOL, one of the first modern programming languages. On top of it all, the Navy destroyer USS Hopper is named after her.

- Ken Thompson and David Ritchie–These guys invented Unix in 1969, the importance of which CANNOT be overstated. Consider this: your fancy Apple computer relies almost entirely on their work.

- Doug and Gary Carlson–This team of brothers co-founded Brøderbund Software, a successful gaming company that operated from 1980-1999. In that time, they were responsible for churning out or marketing revolutionary computer games like Myst and Prince of Persia, helping bring computing into the mainstream.

- Ken and Roberta Williams–This husband and wife team founded On-Line Systems in 1979, which later became Sierra Online. The company was a leader in producing graphical adventure games throughout the advent of personal computing.

- Seymour Cray–Cray was a supercomputer architect whose computers were the fastest in the world for many decades. He set the standard for modern supercomputing.

- Marvin Minsky–Minsky was a professor at MIT and oversaw the AI Lab, a hotspot of hacker activity, where he let prominent programmers like Richard Stallman run free. Were it not for his open-mindedness, programming skill, and ability to recognize that important things were taking place, the AI Lab wouldn’t be remembered as the talent incubator that it is.

- Bob Albrecht–He founded the People’s Computer Company and developed a sincere passion for encouraging children to get involved with computing. He’s responsible for ushering in innumerable new young programmers and is one of the first modern technology evangelists.

- Steve Dompier–At a time when computer speech was just barely being realized, Dompier made his computer sing. It was a trick he unveiled at the first meeting of the Homebrew Computer Club in 1975.

- John McCarthy–McCarthy invented Lisp, the second-oldest high-level programming language that’s still in use to this day. He’s also responsible for bringing mathematical logic into the world of artificial intelligence — letting computers “think” by way of math.

- Doug Engelbart–Engelbart is most noted for inventing the computer mouse in the mid-1960s, but he’s made numerous other contributions to the computing world. He created early GUIs and was even a member of the team that developed the now-ubiquitous hypertext.

- Ivan Sutherland–Sutherland received the prestigious Turing Award in 1988 for inventing Sketchpad, the predecessor to the type of graphical user interfaces we use every day on our own computers.

- Tim Paterson–He wrote QDOS, an operating system that he sold to Bill Gates in 1980. Gates rebranded it as MS-DOS, selling it to the point that it became the most widely-used operating system of the day. (How ‘bout them apples.?)

- Dan Bricklin–He’s “The Father of the Spreadsheet. “Working in 1979 with Bob Frankston, he created VisiCalc, a predecessor to Microsoft Excel. It was the killer app of the time — people were buying computers just to run VisiCalc.

- Bob Kahn and Vint Cerf–Prolific internet pioneers, these two teamed up to build the Transmission Control Protocol and the Internet Protocol, better known as TCP/IP. These are the fundamental communication technologies at the heart of the Internet.

- Nicklus Wirth–Wirth designed several programming languages, but is best known for creating Pascal. He won a Turing Award in 1984 for “developing a sequence of innovative computer languages.”

ADMIREL GRACE MURRAY HOPPER:

At this point, I want to highlight Admiral Grace Murray Hopper or “amazing Grace” as she is called in the computer world and the United States Navy. Admiral Hopper’s picture is shown below.

Born in New York City in 1906, Grace Hopper joined the U.S. Navy during World War II and was assigned to program the Mark I computer. She continued to work in computing after the war, leading the team that created the first computer language compiler, which led to the popular COBOL language. She resumed active naval service at the age of 60, becoming a rear admiral before retiring in 1986. Hopper died in Virginia in 1992.

Born Grace Brewster Murray in New York City on December 9, 1906, Grace Hopper studied math and physics at Vassar College. After graduating from Vassar in 1928, she proceeded to Yale University, where, in 1930, she received a master’s degree in mathematics. That same year, she married Vincent Foster Hopper, becoming Grace Hopper (a name that she kept even after the couple’s 1945 divorce). Starting in 1931, Hopper began teaching at Vassar while also continuing to study at Yale, where she earned a Ph.D. in mathematics in 1934—becoming one of the first few women to earn such a degree.

After the war, Hopper remained with the Navy as a reserve officer. As a research fellow at Harvard, she worked with the Mark II and Mark III computers. She was at Harvard when a moth was found to have shorted out the Mark II, and is sometimes given credit for the invention of the term “computer bug”—though she didn’t actually author the term, she did help popularize it.

Hopper retired from the Naval Reserve in 1966, but her pioneering computer work meant that she was recalled to active duty—at the age of 60—to tackle standardizing communication between different computer languages. She would remain with the Navy for 19 years. When she retired in 1986, at age 79, she was a rear admiral as well as the oldest serving officer in the service.

Saying that she would be “bored stiff” if she stopped working entirely, Hopper took another job post-retirement and stayed in the computer industry for several more years. She was awarded the National Medal of Technology in 1991—becoming the first female individual recipient of the honor. At the age of 85, she died in Arlington, Virginia, on January 1, 1992. She was laid to rest in the Arlington National Cemetery.

CONCLUSIONS:

In 1997, the guided missile destroyer, USS Hopper, was commissioned by the Navy in San Francisco. In 2004, the University of Missouri has honored Hopper with a computer museum on their campus, dubbed “Grace’s Place.” On display are early computers and computer components to educator visitors on the evolution of the technology. In addition to her programming accomplishments, Hopper’s legacy includes encouraging young people to learn how to program. The Grace Hopper Celebration of Women in Computing Conference is a technical conference that encourages women to become part of the world of computing, while the Association for Computing Machinery offers a Grace Murray Hopper Award. Additionally, on her birthday in 2013, Hopper was remembered with a “Google Doodle.”

In 2016, Hopper was posthumously honored with the Presidential Medal of Freedom by Barack Obama.

Who said women could not “do” STEM (Science, Technology, Engineering and Mathematics)?

ENGINEERING SALARIES AND JOBS KEEP EXPANDING—2019

August 8, 2019

We all love to see where we are relative to others within our same profession especially when it comes to salaries. Are we ahead—behind—saying even? That is one question whose answer is good to know. Also, and possibly more importantly, where will the engineering profession be in a few years. Is this a profession I would recommend to my son or daughter? Let’s take a look at the engineering profession to discover where we are and where we are going. All “numbers” come from the Bureau of Labor Statistics (BLS). Graphics are taken from Design News Daily. I’m going to describe the individual disciplines with digitals. I think that makes more sense.

The BLS projects growth in all engineering jobs through the middle of the next decade. For the engineering profession as a whole, BLS projects 194,300 new jobs during the coming ten (10) years. The total number of current engineering jobs is 1,681,000. I think that’s low compared to the number of engineers required. (NOTE: I may state right now we are talking about degreed engineers; i.e. BS, MS, and PhD engineers.)

The average salary for an engineer is $91,010. The average across all engineering disciplines may not be particularly meaningful. The following slides who the average salaries for individual engineering disciplines.

These fields cover the major areas of engineering. Hope you enjoyed this one. Show it to your kids and grandkids.

Share this:

Tagged: Commentary, COMPUTERS, Electrical Engineering, electronic products, Energy, Engineering Education, ENVIRONMENTAL, Industrial Safety, Materials Engineering, Mechanical Engineering, Nuclear Enginering, Petroleum Engineering