8 ATTRIBUTES OF GREAT ACHIEVERS

July 19, 2013

This book was written by Cameron C.Taylor; published by the Does Your Bag Have Holes Foundation, copyright 2009

In looking at the lives of the greatest achievers, we see they have very similar attributes foundational to their endeavors. We see those who have achieved great success and think they are somehow uniquely gifted or talented and we could never duplicate their success; however, great achievers are not simply born, they are developed. Cameron Taylor gives us insights as to those characteristics common to all individuals who achieve greatly. He takes a look at the following individuals: Winston Churchill, Steve Young, Mahatma Gandhi, Sam Walton, Hyrum Smith, Benjamin Franklin, Abraham Lincoln, the Wright Brothers, George Washington, Dwight D. Eisenhower, Jon M. Huntsman, Walt Disney, Steven R. Covey, Sylvester Stallone, and Colonel Sanders.

Mr. Taylor develops his book around the following principals:

- RESPONSIBILITY—Each choice has a consequence. “Each choice carries a consequence. For better or worse, each choice is the unavoidable consequence of its predecessor. There are no exceptions. If you can accept that a bad choice carries the seed of its own punishment, why not accept the fact that a good choice yields desirable fruit?—Gary Ryan Blair.

- CREATIVITY—“I do not think there is any thrill that can go through the human heart like that felt by the inventor”—Nikola Tesla.

- INDEPENDENCE—Every handout has a price and that price is a loss of freedom. We must preserve our talents of self-sufficiency, our ability to create things for ourselves, and our true love of independence”.—Cameron C. Taylor.

- HUMILITY—“ The quality for a leader is unquestionably integrity. Without it, no real success is possible”.—Dwight D. Eisenhower.

- HONESTY—“Trust is the glue of life”. –Stephen R. Covey.

- OPTIMISM—“The world is moving so fast these days that the man who says it can’t be done is generally interrupted by someone doing it.”—Elbert Hubbard.

- VISION—“You are never too old to set another goal or to dream a new dream.” C.S. Lewis.

- PERSISTENCE—“Like most overnight successes, it was about twenty years in the making.”—Sam Walton.

One excellent chapter indicates how to uplift others. How to make them KNOW they are contributors to a specific effort, a great cause, if you will. He details these as follows:

- Give Sincere Compliments

- Smile

- Remember Names

- Value Differences

- Don’t Gossip

- If Offended, Take the Initiative

- Return Good for Evil

- Accept the Person for Who They Are

- Tell the Individual “I Love You”

- Serve Others

- Listen and Be Understanding

He sums up his book by indicating:

- The root of our actions is our attributes

- Attributes can be developed

I can and do recommend this book to you. It’s a very “readable” and one that hit more than one nerve.

SOLAR IMPULSE

July 2, 2013

The following post used as reference the Design Engineering Daily-ECN publication detailing the flight of the Solar Impulse solar-powered airplane. Also noted: An AP press release detailing the touchdown at Dullas in Washington D.C.

I would like to start by providing several JPEGs that show the configuration of the “heavier-than-air” solar-powered plane as well as several performance specifications. This will give you some idea as to the complexity of the project.

Solar Impulse is a Swiss long-range solar powered aircraft project developed at the École Polytechnique Fédérale de Lausanne. The following specifications may be seen:

General characteristics

- Crew: 1

- Length: 21.85 m (71.7 ft)

- Wingspan: 63.4 m (208 ft)

- Height: 6.40 m (21.0 ft)

- Wing area: 11,628 photovoltaic cells rated at 45 kW peak: 200 m2 (2,200 sq ft)

- Loaded weight: 1,600 kg (3,500 lb)

- Max. takeoff weight: 2,000 kg (4,400 lb)

- Powerplant: 4 × electric motors, powered by 4 x 21 kWh lithium-ion batteries (450 kg), providing 7.5 kW (10 HP) each

- Take-off speed: 35 kilometers per hour (22 mph)

Performance

- Cruise speed: 70 kilometers per hour (43 mph)

- Endurance: 36 hours (projected)

- Service ceiling: 8,500 m (27,900 ft) with a maximum altitude of 12,000 meters (39,000 ft)

The aircraft itself is designed with massive wings and thousands of photovoltaic cells placed strategically across the flight surfaces thus allowing maximum acceptance of solar energy.

Pilot Bertrand Piccard was at the controls for the last time on the multi-leg “Across America” journey that began May 3 in San Francisco. His fellow Swiss pilot, Andre Borschberg, is expected to fly the last leg from Washington to New York City’s John F. Kennedy International Airport in early July, the web site added. Captain Borschberg is show below being congratulated by “Impulse” team.

It’s the first bid by a solar plane capable of being airborne day and night without fuel to fly across the U.S, at speeds reaching about 40 mph. The plane opened by flying from San Francisco via Arizona, Texas, Missouri and Ohio onward to Dulles with stops of several days in cities along the way.

Organizers indicated Piccard soared across the Appalachian mountains on a 435-mile (700-kilometer) course from Cincinnati to the Washington area, averaging 31 mph (50 kph). It was the second phase of a leg that began in St. Louis.

The plane, considered the world’s most advanced sun-powered aircraft, is powered by about 12,000 photovoltaic cells that cover its enormous wings and charge its batteries during the day. The single-seat Solar Impulse flies around 40 mph and can’t go through clouds; weighing about as much as a car, the aircraft also took longer than a car to complete the journey from Ohio to the East Coast.

Despite its vulnerabilities to bad weather, Piccard said in a statement that the conclusion of all but the final leg showed that sun-powered cross-continent travel “proves the reliability and potential of clean technologies.”

Organizers said fog at Cincinnati Municipal Lunken Airport was a concern that required the ground crew’s attention before takeoff just after 10 a.m. Saturday. The crew gave the plane a gentle wipe-down with cloths because of condensation that had formed on the wings.

“The solar airplane was in great shape despite the quasi-shower it experienced” before takeoff from Cincinnati, the web site added.

Washington was the first East Coast stop before the final planned leg to New York.

Organizers said the flight into the nation’s capital was an emotional one for Piccard as it was his last on the cross-country flight before Borschberg has the controls on the final trek to New York.

At each stop along the way, the plane has stayed several days, wowing visitors. Organizers said a public viewing of the aircraft would be held Sunday afternoon at Dulles.

As the plane’s creators, Piccard and Borschberg, have said their trip taking turns flying the aircraft solo was the first attempt by a solar airplane capable of flying day and night without fuel to fly across America. They also called it another aviation milestone in hopes that the journey would whet greater interest in clean technologies and renewable energy.

The Swiss pilots said in a statement that they expected to participate in an energy roundtable and news conference Monday with U.S. Energy Secretary Ernest Moniz about the technology. They have said the project’s ultimate goal is to fly a sun-powered aircraft around the world with a second-generation plane now in development.

Borschberg also said in a statement Sunday that the pilots are eyeing 2015 for a worldwide attempt, adding their `Across America’ voyage had taught them much as they prepare.

One of my favorite people in history is George Bernard Shaw. He said: “You see things; and you say, ‘Why?’ But I dream things that never were; and I say, “Why not?”

I can definitely say the dreamer in all of us hopes for the very best during the 2015 flight around the world. Well done.

HERSCHEL SPACE OBSERATORY

July 1, 2013

“Two men look out of the same prison bars; one sees mud and the other stars.”

For centuries and centuries men have looked up—seen the stars, wondered about their creation and pondered traveling to distant planets and star systems. The action of the two prisoners above indicates interest by one and no interest from the other in “all things celestial”. The real truth is, just about everyone in every country is fascinated with the cosmos. Events leading up to the creation lie in the stars. No doubt about it.

One significant effort to unwrap the truth was undertaken by the ESA (European Space Agency) in launching the Herschel Space Observatory on May 14, 2009. The Herschel Space Observatory is named after Sir William Herschel and is the fourth Cornerstone mission in the European Space Agency’s Horizon 2000 program. Ten countries, including the United States, participated in its design and implementation. Sir William and his sister, Caroline collaborated in discovering the infrared spectrum in 1800 and the planet Uranus. That spectrum extends beyond visible light into the region that we today call “infrared.” The far-infrared and sub-millimeter wavelengths at which Herschel observations are made are considerably longer than the familiar rainbow of colors that the human eye can perceive. Yet, this is a critically important portion of the spectrum to scientists because it is the frequency range at which a large part of the universe radiates. Much of the universe consists of gas and dust far too cold to radiate in visible light or at shorter wavelengths such as x-rays. However, even at temperatures well below the most frigid spot on earth, they do radiate at far-infrared and sub-millimeter wavelengths.

Stars and other cosmic objects not hot enough to shine at optical wavelengths are often hidden behind vast dust clouds that absorb visible light and reradiate that light in the far-infrared and sub-millimeter.

There is a great deal to see at these wavelengths, and much of it has been virtually unexplored. Earthbound telescopes are largely unable to observe this portion of the spectrum because most of this light is absorbed by moisture in the atmosphere before it can reach the ground. Previous space-based infrared telescopes have had neither the sensitivity of Herschel’s large mirror, nor the ability of Herschel’s three detectors to do such a comprehensive job of sensing this important part of the spectrum.

Two-thirds of Herschel’s observation time has been made available to the world scientific community, with the remainder reserved for the spacecraft’s science and instrument teams. The flood of data from Herschel makes it impractical for multiple websites to provide up-to-date or reasonably complete information about all of the observations that have been carried out and published in scientific journals.

Well—we knew it was coming but, it is still sad to see the end of a mission. Controllers for the Herschel space telescope sent final commands today to put the observatory into a heliocentric parking orbit. Commands were sent at 12:25 GMT on June 17, 2013, marking the official end of operations for Herschel. But expect more news from this spacecraft’s observations, as there is still a treasure trove of data that that will keep astronomers busy for many years to come. Additionally, maneuvers done by the spacecraft allowed engineers to test control techniques that can’t normally be tested in-flight. Herschel’s science mission had already ended in April when the liquid helium that cooled the observatory’s instruments ran out.

Herschel will now be parked indefinitely in a heliocentric orbit, as a way of “disposing” of the spacecraft. It should be stable for hundreds of years, but perhaps scientists will figure out another use for it in the future. One original idea for disposing of the spacecraft was to have it impact the moon, a la the LCROSS mission that slammed into the Moon in 2009, and it would kick up volatiles at one of the lunar poles for observation by another spacecraft, such as the Lunar Reconnaissance Orbiter. But that idea has been nixed in favor of the parking orbit.

Some of the maneuvers that were tested before the spacecraft was put into its final orbit were some in-orbit validations and analysis of hardware and software.

On May 13-14, engineers commanded Herschel to fire its thrusters for a record 7-hours and 45-minutes. This ensured the satellite was boosted away from its operational orbit around the L2 Sun–Earth Lagrange Point and into a heliocentric orbit, further out and slower than earth’s orbit. This depleted most of the fuel, and the final thruster command today used up all of the remaining fuel. Today’s final command was the last step in a complex series of flight control activities and thruster maneuvers designed to take Herschel into a safe disposal orbit around the sun; additionally all its systems were turned off.

“Herschel has not only been an immensely successful scientific mission, it has also served as a valuable flight operations test platform in its final weeks of flight. This will help us increase the robustness and flexibility of future missions operations,” said Paolo Ferri, ESA’s Head of Mission Operations. “Europe really received excellent value from this magnificent satellite.”

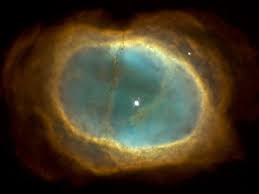

Let’s now take a look at the results from the years of observation. Hope you enjoy just a very few of the pictures beamed back to Earth. I welcome your comments.

CRYOGENICS

July 27, 2013

History

When we consider the history of cryogenics we see that this technology, like most others, has been evolutionary and necessarily revolutionary. Steady progress in the field has brought us to where we are today. The cryogenics industry is flourishing with new applications found every year. For this reason, it remains viable and has given us processes that certainly benefit our daily lives.

The Invention

The invention of the thermometer by Galileo in 1592 may be considered as the start of the science of thermodynamics. Thermodynamics lies at the heart of temperature measurement and certainly measuring temperature of substances of extremely low temperature. A Galileo thermometer (or Galilean thermometer) is a device made with a sealed glass cylinder containing a clear liquid and several glass vessels of varying densities. As temperature changes, the individual floats rise or fall in proportion to their respective density. Galileo discovered the principle on which this thermometer is based. That principle being, the density of a liquid changes in proportion to its temperature.

Although named after Galileo, the thermometer described above was not invented by him. Galileo did invent a thermometer, called Galileo’s air thermometer (more accurately termed a thermoscope), in or before the year 1603. The so-called ‘Galileo thermometer’ was actually invented by a group of academics and technicians known as the Accademia del Cimento of Florence. This group included one of Galileo’s pupils, Evangelista Torricelli and Torricelli’s pupil Viviani.Torricelli was an Italian physicist and mathematician, best known for his invention of the barometer even though he contributed to inventing the thermometer. Details of the thermometer were published in the Saggi di naturali esperienze fatte nell’Academia del Cimento sotto la protezione del Serenissimo Principe Leopoldo di Toscan e descrittedal segretario di essa Accademia (1666), the Academy’s main publication. The English translation of this work (1684) describes the device (‘The Fifth Thermometer’) as ‘slow and lazy’, a description that is reflected in an alternative Italian name for the invention, the termometro lento (slow thermometer). The outer vessel was filled with ‘rectified spirits of wine’ (a concentrated solution of ethanol in water); the weights of the glass bubbles were adjusted by grinding a small amount of glass from the sealed end; and a small air space was left at the top of the main vessel to allow ‘for the Liquor to rarefie’.

Guillaume Amontons actually predicted for the first time the existence of an absolute zero in 1702, which marks the beginning of the science of low temperatures. Around 1780, the liquefaction of a gas was achieved for the first time. It took almost 100 years before a so-called “permanent “gas, i.e. oxygen, was successfully liquefied. Thereafter Linde and Claude founded the cryogenic industry, which today has annual sales of more than 30 billion US $. Kamerlingh Onnes and his Cryogenic Laboratory in Leiden worked in the field of low temperature physics, which contributed to the experimental proof of the quantum theory. Heike Kamerlingh Onnes (1853-1926; 1913 Nobel Prize winner for physics) liquefied the most difficult gas of all, helium. He liquefied it at the lowest temperature ever achieved in a laboratory to that date, 4.2 Kelvin’s (the Kelvin measurement is a scale of temperatures measured in degrees Celsius from absolute zero). We will discuss the Kelvin temperature scale in depth later on in this course. This marked a significant milestone in the history of cryogenics. Since that achievement, increased attention has been devoted to the study of physical phenomena of substances at very low temperatures.

Early Research

British chemists Michael Faraday (1791-1867) and Sir Humphry Davy (1778-1829) did pioneering work in low-temperature physics that led to the ongoing development of cryogenics. In the early to middle 1800s they were able to produce gases by heating mixtures at one end of a sealed tube in the shape of an inverted “V.” A salt and ice mixture was used to cool the other end of the tube. This combination of reduced temperature and increased pressure caused the gas that was produced to liquefy (turn to a liquid). When they opened the tube, the liquid quickly evaporated and cooled to its normal boiling point.

In 1877, French mining engineer Louis Paul Cailletet announced that he had liquefied oxygen and nitrogen. Cailletet was able to produce only a few droplets of these liquefied gases, however. In his research with oxygen, Cailletet collected the oxygen in a sturdy container and cooled it by evaporating sulphur dioxide in contact with the container. He then compressed the oxygen as much as possible with his equipment. Next he reduced the pressure suddenly, causing the oxygen to expand. The sudden cooling that resulted caused a few drops of liquid oxygen to form.

The need to store liquefied gases led to another important development in cryogenics. In 1891 Scottish chemist James Dewar (1842-1923) introduced the container known today as the “Dewar flask.” The Dewar flask is actually two flasks, one within the other, separated by an evacuated space (a vacuum). The inside of the outer flask and the outside of the inner flask are coated with silver. The vacuum and the silvered sides of the container are designed to prevent heat passage.

Dewar was also the first person to liquefy hydrogen in 1898. Cryogenics, as we recognize it today, started in the late 1800’s when Sir James Dewar (1842 – 1923) perfected a technique for compressing and storage of gases from the atmosphere into liquids. (Some credit a Belgian team as being first to separate and liquefy gasses but being British we’ll stay with Sir James Dewar for now). These compressed gases were super cold and any metal that came in contact with the ultra low temperatures showed some interesting changes in their characteristics.

The first liquefied hydrogen by Sir James was in 1898 and a year later he managed to solidify hydrogen – just think on that for a moment… This is before electricity was common in houses, cars and buses a rare find and photography a rich mans hobby. By pure persistence and fantastic mental ability a whole generation of ‘Gentleman Scientists’ managed to bring into existence many things we both rely on and take for granted today.

Sir James managed to study, and lay the corner stones for the production of a wide range of gases that we use in our everyday lives, mostly without even realizing it. He also invented the Thermos flask (how else was he to save his liquid gas samples), the industrial version of which still uses his name – ‘Dewar’.

Later Accomplishments

In the 1940’s, scientists discovered that by immersing some metals in liquid nitrogen they could increase the ware resistance of motor parts, particularly in aircraft engines, giving a longer in service life. At the time this was little more than dipping a part into a flask of liquid nitrogen, leaving there for an hour or two and then letting it return to room temperature. They managed to get the hardness they wanted but parts became brittle. As some benefits could be found in this crude method, further research into the process was conducted. The applications at this stage were mostly military.

NASA led the way and perfected a method to gain the best results, consistently, for a whole range of metals. The performance increase in parts was significant but so was the cost of performing the process.

Work continued over the years to perfect the process, insulation materials improved, the method of moving the gas around the process developed and most importantly the ability to tightly control the rate of temperature change.

Technology enabled scientists to look deeper into the very structure of metals and better understand what was happening to the atoms and how they bond with other carbons. They also started to better understand the role that temperature plays in the treatment of metals to affect the final characteristics (more information in the ‘How it works’ section).

As with most everything in our lives today, the microprocessor enabled a steady but continual reduction in size of the control equipment required as well as increasing the accuracy of that part of the process.

Since the mid 1990’s, the process has started to become a commercially viable treatment in terms of cost of process Vs benefits in performance.

COMMERCIAL USES OF CRYOGENICS

FOOD PROCESSING

Freezing is one of the oldest and most widely used methods of food preservation. This process allows preservation of taste, texture, and nutritional value in foods better than any other method. The freezing process is a combination of the beneficial effects of low temperatures at which microorganisms cannot grow, chemical reactions are reduced, and cellular metabolic reactions are delayed. Cryogenics is most-often used method used to accomplish this food preservation.

The importance of freezing as a preservation method

Freezing preservation retains the quality of agricultural products over long storage periods. As a method of long-term preservation for fruits and vegetables, freezing is generally regarded as superior to canning and dehydration, with respect to retention in sensory attributes and nutritive properties (Fennema, 1977). The safety and nutrition quality of frozen products are emphasized when high quality raw materials are used, good manufacturing practices are employed in the preservation process, and the products are kept in accordance with specified temperatures.

The need for freezing and frozen storage

Freezing has been successfully employed for the long-term preservation of many foods, providing a significantly extended shelf life. The process involves lowering the product temperature generally to -18 °C or below (Fennema et al., 1973). The physical state of food material is changed when energy is removed by cooling below freezing temperature. The extreme cold simply retards the growth of microorganisms and slows down the chemical changes that affect quality or cause food to spoil (George, 1993).

Competing with new technologies of minimal processing of foods, industrial freezing is the most satisfactory method for preserving quality during long storage periods (Arthey, 1993). When compared in terms of energy use, cost, and product quality, freezing requires the shortest processing time. Any other conventional method of preservation focused on fruits and vegetables, including dehydration and canning, requires less energy when compared with energy consumption in the freezing process and storage. However, when the overall cost is estimated, freezing costs can be kept as low (or lower) as any other method of food preservation (Harris and Kramer, 1975).

Current status of frozen food industry in U.S. and other countries

The frozen food market is one of the largest and most dynamic sectors of the food industry. In spite of considerable competition between the frozen food industry and other sectors, extensive quantities of frozen foods are being consumed all over the world. The industry has recently grown to a value of over US$ 75 billion in the U.S. and Europe combined. This number has reached US$ 27.3 billion in 2001 for total retail sales of frozen foods in the U.S. alone (AFFI, 2003). In Europe, based on U.S. currency, frozen food consumption also reached 11.1 million tons in 13 countries in the year 2000 (Quick Frozen Foods International, 2000).

Advantages of freezing technology in developing countries

Developed countries, mostly the U.S., dominate the international trade of fruits and vegetables. The U.S. is ranked number one as both importer and exporter, accounting for the highest percent of fresh produce in world trade. However, many developing countries still lead in the export of fresh exotic fruits and vegetables to developed countries (Mallett, 1993).

For developing countries, the application of freezing preservation is favorable with several main considerations. From a technical point of view, the freezing process is one of the most convenient and easiest of food preservation methods, compared with other commercial preservation techniques. The availability of different types of equipment for several different food products results in a flexible process in which degradation of initial food quality is minimal with proper application procedures. As mentioned earlier, the high capital investment of the freezing industry usually plays an important role in terms of economic feasibility of the process in developing countries. As for cost distribution, the freezing process and storage in terms of energy consumption constitute approximately 10 percent of the total cost (Person and Lohndal, 1993). Depending on the government regulations, especially in developing countries, energy cost for producers can be subsidized by means of lowering the unit price or reducing the tax percentage in order to enhance production. Therefore, in determining the economical convenience of the process, the cost related to energy consumption (according to energy tariffs) should be considered.

Frozen food industry in terms of annual sales in 2001

(Source: Information Resources)

(million)

vs. 2000

Market share of frozen fruits and vegetables

Today in modern society, frozen fruits and vegetables constitute a large and important food group among other frozen food products (Arthey, 1993). The historical development of commercial freezing systems designed for special food commodities helped shape the frozen food market. Technological innovations as early as 1869 led to the commercial development and marketing of some frozen foods. Early products saw limited distribution through retail establishments due to insufficient supply of mechanical refrigeration. Retail distribution of frozen foods gained importance with the development of commercially frozen vegetables in 1929.

The frozen vegetable industry mostly grew after the development of scientific methods for blanching and processing in the 1940s. Only after the achievement of success in stopping enzymatic degradation, did frozen vegetables gain a strong retail and institutional appeal. Today, market studies indicate that considering overall consumption of frozen foods, frozen vegetables constitute a very significant proportion of world frozen-food categories (excluding ice cream) in Austria, Denmark, Finland, France, Germany, Italy, Netherlands, Norway, Sweden, Switzerland, UK, and the USA. The division of frozen vegetables in terms of annual sales in 2001 is shown in Table 3.

Commercialization history of frozen fruits is older than frozen vegetables. The commercial freezing of small fruits and berries began in the eastern part of the U.S. in about 1905 (Desrosier and Tressler, 1977). The main advantage of freezing preservation of fruits is the extended usage of frozen fruits during off-season. Additionally, frozen fruits can be transported to remote markets that could not be accessed with fresh fruit. Also, freezing preservation makes year-round further processing of fruit products possible, such as jams, juice, and syrups from frozen whole fruit, slices, or pulps. In summary, the preservation of fruits by freezing has clearly become one the most important preservation methods.

SUPERCONDUCTIVITY

Superconductivity: Properties, History, Applications and Challenges

Superconductors differ fundamentally in quantum physics behavior from conventional materials in the manner by which electrons, or electric currents, move through the material. It is these differences that give rise to the unique properties and performance benefits that differentiate superconductors from all other known conductors. Superconductivity is accomplished by using cryogenic methodology.

Unique Properties

• Zero resistance to direct current

• Extremely high current carrying density

• Extremely low resistance at high frequencies

• Extremely low signal dispersion

• High sensitivity to magnetic field

• Exclusion of externally applied magnetic field

• Rapid single flux quantum transfer

• Close to speed of light signal transmission

Zero resistance and high current density have a major impact on electric power transmission and also enable much smaller or more powerful magnets for motors, generators, energy storage, medical equipment and industrial separations. Low resistance at high frequencies and extremely low signal dispersion are key aspects in microwave components, communications technology and several military applications. Low resistance at higher frequencies also reduces substantially the challenges inherent to miniaturization brought about by resistive, or I2R, heating. The high sensitivity of superconductors to magnetic field provides a unique sensing capability, in many cases 1000x superior to today’s best conventional measurement technology. Magnetic field exclusion is important in multi-layer electronic component miniaturization, provides a mechanism for magnetic levitation and enables magnetic field containment of charged particles. The final two properties form the basis for digital electronics and – computing well beyond the theoretical limits projected for semiconductors. All of these materials properties have been extensively demonstrated throughout the world.

STEM CELL RESEARCH AND USAGE

Cryopreservation of Haematopoietic Stem Cells

This routine procedure generally involves slow cooling in the presence of a cryoprotectant to avoid the damaging effects of intracellular ice formation. The cryoprotectant in popular use is dimethyl sulphoxide (DMSO), and the use of a controlled rate freezing technique at 1 to 2 °C/min and rapid thawing is considered standard. Passive cooling devices that employ mechanical refrigerators, generally at −80 °C, to cool the cells (so-called dump-freezing) generate cooling rates similar to those adopted in controlled rate freezing. Generally, the outcome from such protocols has been comparable to controlled rate freezing been undertaken in order to replace the largely empirical approach to developing an optimized protocol with a methodological one that takes into account the sequence of damaging events that occur during the freezing and thawing process.

A Sterling Cycle Cryocooler has been developed as an alternative to conventional liquid nitrogen controlled rate freezers. Unlike liquid nitrogen systems, the Sterling Cycle freezer does not pose a contamination risk, can be used in sterile conditions and has no need for a constant supply of cryogen. Three types of samples from two species (murine embryos, human spermatozoa and embryonic stem cells), each requiring different cooling protocols, were cryopreserved in the Sterling Cycle freezer. For comparison, cells were also frozen in a conventional liquid nitrogen controlled rate freezer. Upon thawing, the rates of survival of viable cells were generally greater than 50% for mouse embryos and human embryonic stem cells, based on morphology (mouse embryos) and staining and colony formation (human embryonic stem cells). Survival rates of human spermatozoa frozen in the Sterling Cycle freezer, based on motility and dead cell staining, were similar to those of samples frozen in a conventional controlled rate freezer using liquid nitrogen.

HEAT TREATING AND CRYOCOOLING OF METALS

There are many benefits of sub-cooling metals, including:

Cryogenic processing makes changes to the structure of materials being treated; dependent on the composition of the material it performs three things:

1. Turns retained austenite into martensite

2. Refines the carbide structure

3. Stress relieves

Cryogenic treatment of ferrous metals converts retained austenite to martensite and promotes the precipitation of very fine carbides.

Most heat treatments at best will leave somewhere between ten and twenty percent retained austenite in ferrous metals. Because austenite and martensite have different size crystal structures, there will be stresses built into the crystal structure where the two co-exist. Cryogenic processing eliminates these stresses by converting the majority of the retained austenite to martensite.

An important factor to keep in mind is that Cryogenic Processing is not a substitute for heat-treating if the product is poorly treated cryogenic treatment can’t help it, also if the product is overheat during re-manufacture or over stressed during use, you may destroy the temper of the steel which is developed during the heat treatment process rendering the cryogenic process useless by default. Cryogenic processing will not in itself harden metal like quenching and tempering. It is an additional treatment to heat-treating.

This transformation itself can cause a problem in poorly heat –

treated items that have too much retained austenite it may result in dimensional change and possible stress points in the product being treated. This is why Cryogen Industries will not treat poorly heat treated items.

The cryogenic metal treatment process also promotes the precipitation of small carbide particles in tool steels and suitable alloying metals. The fine carbides act as hard areas with a low coefficient of friction in the metal that greatly adds to the wear resistance of the metals.

A Japanese study in the role of carbides in the wear resistance improvements of tool steel by cryogenic treatment; concluded the precipitation of fine carbides has more influence on the wear resistance increase than does the removal of the retained austenite.

The process also relieves residual stresses in metals and some forms of plastics; this has been proven by field studies conducted on product in high impact scenarios where stress fractures are evident.

Cryogenic Processing is not a coating; it changes the structure of the material being treated to the core and in reality works in synergy with coatings. As cryogenics is a once only treatment you will never wear off the process like a coating but you will be able to sharpen, dress, or modify your tooling without damaging the process.

Tool Failure – Another good reason to cryogenically treat

Tooling failures that can occur are abrasive and adhesive wear, chipping, deformation, galling, catastrophic failure and stress fracture.

Abrasive wear results from friction between the tool and the work material. Adhesive wear occurs when the action of the tool being used exceeds the material’s ductile strength or the material is simple too hard to process.

Adhesive wear causes the formation of micro cracks (stress fractures). These micro cracks eventually interconnect, or network, and form fragments that pull out. This “pullout” looks like excessive abrasive wear on cutting edges when actually they are stress fracture failures. When fragments form, both abrasive and adhesive wear occurs because the fragments become wedged between the tool and the work piece, causing friction this can then lead to poor finish or at worst catastrophic tool failure.

Catastrophic tooling failures can cause thousands of dollars in machine damage and production loss. This type of tool failure can cause warping and stress fractures to tool heads and decks as well rotating and load bearing assemblies.

OF GASSES

Most of the world’s Natural Gas resources are remote from the market and their exploitation is constrained by factors such as transportation costs and market outlets. To increase the economic utilization of Natural Gas, techniques other than pipeline transmission or LNG shipment have been developed. Chemical conversion (liquefaction) of gas to make gas transportable as a liquid and add value to the products is now a proven technology.

Share this:

Tagged: Evangelista Torricelli, Galileo, Galileo thermometer, Guillaume Amontons, Heike Kamerlingh Onnes, Humphry Davy, Kelvin, Louis Paul Cailletet