THE TECHNOLOGY:

Humans have always had the innate ability to recognize and distinguish between faces, yet computers only recently have shown the same ability and that ability results from proper software being installed into PCs with memory adequate to manipulate the mapping process.

In the mid 1960s, scientists began working to us computers to recognize human faces. This certainly was not easy at first. Facial recognition software and hardware have come a long way since those fledgling early days and definitely involve mathematical algorithms.

ALGORITHMS;

An algorithm is defined by Merriam-Webster as follows:

“a procedure for solving a mathematical problem (as of finding the greatest common divisor) in a finite number of steps that frequently involves repetition of an operation; broadly : a step-by-step procedure for solving a problem or accomplishing some end especially by a computer.”

Some facial recognition algorithms identify facial features by extracting landmarks, or features, from an image of the subject’s face. For example, an algorithm may analyze the relative position, size, and/or shape of the eyes, nose, cheekbones, and jaw. These features are then used to search for other images with matching features. Other algorithms normalize a gallery of face images and then compress the face data, only saving the data in the image that is useful for face recognition. A probe image is then compared with the face data. One of the earliest successful systems is based on template matching techniques applied to a set of salient facial features, providing a sort of compressed face representation.

Recognition algorithms can be divided into two main approaches, geometric, which looks at distinguishing features, or photometric, which is a statistical approach that distills an image into values and compares the values with templates to eliminate variances.

Every face has numerous, distinguishable landmarks, the different peaks and valleys that make up facial features. These landmarks are defined as nodal points. Each human face has approximately 80 nodal points. Some of these measured by the software are:

- Distance between the eyes

- Width of the nose

- Depth of the eye sockets

- The shape of the cheekbones

- The length of the jaw line

These nodal points are measured thereby creating a numerical code, called a face-print, representing the face in the database.

In the past, facial recognition software has relied on a 2D image to compare or identify another 2D image from the database. To be effective and accurate, the image captured needed to be of a face that was looking almost directly at the camera, with little variance of light or facial expression from the image in the database. This created quite a problem.

In most instances the images were not taken in a controlled environment. Even the smallest changes in light or orientation could reduce the effectiveness of the system, so they couldn’t be matched to any face in the database, leading to a high rate of failure. In the next section, we will look at ways to correct the problem.

A newly-emerging trend in facial recognition software uses a 3D model, which claims to provide more accuracy. Capturing a real-time 3D image of a person’s facial surface, 3D facial recognition uses distinctive features of the face — where rigid tissue and bone is most apparent, such as the curves of the eye socket, nose and chin — to identify the subject. These areas are all unique and don’t change over time.

Using depth and an axis of measurement that is not affected by lighting, 3D facial recognition can even be used in darkness and has the ability to recognize a subject at different view angles with the potential to recognize up to 90 degrees (a face in profile).

Using the 3D software, the system goes through a series of steps to verify the identity of an individual.

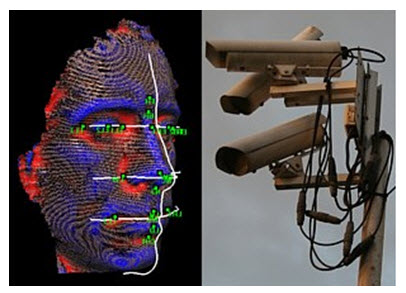

The nodal points or recognition points are demonstrated with the following graphic.

This is where Machine Vision or MV comes into the picture. Without MV, facial recognition would not be possible. An image must first be taken, then that image is digitized and processed.

MACHINE VISION:

Facial recognition is one example of a non-industrial application for machine vision (MV). This technology is generally considered to be one facet in the biometrics technology suite. Facial recognition is playing a major role in identifying and apprehending suspected criminals as well as individuals in the process of committing a crime or unwanted activity. Casinos in Las Vegas are using facial recognition to spot “players” with shady records or even employees complicit with individuals trying to get even with “the house”. This technology incorporates visible and infrared modalities face detection, image quality analysis, verification and identification. Many companies use cloud-based image-matching technology to their product range providing the ability to apply theory and innovation to challenging problems in the real world. Facial recognition technology is extremely complex and depends upon many data points relative to the human face.

Facial recognition has a very specific methodology associated with it. You can see from the graphic above points of recognition are “mapped” highlighting very specific characteristics of the human face. Tattoos, scars, feature shapes, etc. all play into identifying an individual. A grid is constructed of “surface features”; those features are then compared with photographs located in data bases or archives. In this fashion, positive identification can be accomplished. The graphic below will indicate the grid developed and used for the mapping process. Cameras are also shown that receive the image and send that image to software used for comparisons.

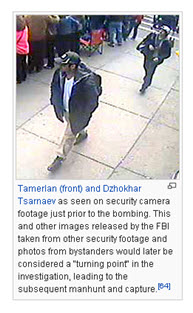

One of the most successful cases for the use of facial recognition was last year’s bombing during the Boston Marathon. Cameras mounted at various locations around the site of the bombing captured photographs of Tamerian and Dzhokhar Tsarnaev prior to their backpack being positioned for both blasts. Even though this is not facial recognition in the truest since of the word, there is no doubt the cameras were instrumental in identifying both criminals.

Dzhokhar Tsarnaev is now the only of the court case that will determine life or death. There is no doubt, thanks to MV, concerning his guilt or innocence. He is guilty. Jurors in Boston heard harrowing testimony this week in his trial. Survivors, as well as police and first responders, recounted often-disturbing accounts of their suffering and the suffering of runners and spectators as a result of the attack. Facial recognition was paramount in his identification and ultimate capture.

As always, your comments are very welcome.

FACIAL RECOGNITION

March 6, 2015

THE TECHNOLOGY:

Humans have always had the innate ability to recognize and distinguish between faces, yet computers only recently have shown the same ability and that ability results from proper software being installed into PCs with memory adequate to manipulate the mapping process.

In the mid 1960s, scientists began working to us computers to recognize human faces. This certainly was not easy at first. Facial recognition software and hardware have come a long way since those fledgling early days and definitely involve mathematical algorithms.

ALGORITHMS;

An algorithm is defined by Merriam-Webster as follows:

“a procedure for solving a mathematical problem (as of finding the greatest common divisor) in a finite number of steps that frequently involves repetition of an operation; broadly : a step-by-step procedure for solving a problem or accomplishing some end especially by a computer.”

Some facial recognition algorithms identify facial features by extracting landmarks, or features, from an image of the subject’s face. For example, an algorithm may analyze the relative position, size, and/or shape of the eyes, nose, cheekbones, and jaw. These features are then used to search for other images with matching features. Other algorithms normalize a gallery of face images and then compress the face data, only saving the data in the image that is useful for face recognition. A probe image is then compared with the face data. One of the earliest successful systems is based on template matching techniques applied to a set of salient facial features, providing a sort of compressed face representation.

Recognition algorithms can be divided into two main approaches, geometric, which looks at distinguishing features, or photometric, which is a statistical approach that distills an image into values and compares the values with templates to eliminate variances.

Every face has numerous, distinguishable landmarks, the different peaks and valleys that make up facial features. These landmarks are defined as nodal points. Each human face has approximately 80 nodal points. Some of these measured by the software are:

These nodal points are measured thereby creating a numerical code, called a face-print, representing the face in the database.

In the past, facial recognition software has relied on a 2D image to compare or identify another 2D image from the database. To be effective and accurate, the image captured needed to be of a face that was looking almost directly at the camera, with little variance of light or facial expression from the image in the database. This created quite a problem.

In most instances the images were not taken in a controlled environment. Even the smallest changes in light or orientation could reduce the effectiveness of the system, so they couldn’t be matched to any face in the database, leading to a high rate of failure. In the next section, we will look at ways to correct the problem.

A newly-emerging trend in facial recognition software uses a 3D model, which claims to provide more accuracy. Capturing a real-time 3D image of a person’s facial surface, 3D facial recognition uses distinctive features of the face — where rigid tissue and bone is most apparent, such as the curves of the eye socket, nose and chin — to identify the subject. These areas are all unique and don’t change over time.

Using depth and an axis of measurement that is not affected by lighting, 3D facial recognition can even be used in darkness and has the ability to recognize a subject at different view angles with the potential to recognize up to 90 degrees (a face in profile).

Using the 3D software, the system goes through a series of steps to verify the identity of an individual.

The nodal points or recognition points are demonstrated with the following graphic.

This is where Machine Vision or MV comes into the picture. Without MV, facial recognition would not be possible. An image must first be taken, then that image is digitized and processed.

MACHINE VISION:

Facial recognition is one example of a non-industrial application for machine vision (MV). This technology is generally considered to be one facet in the biometrics technology suite. Facial recognition is playing a major role in identifying and apprehending suspected criminals as well as individuals in the process of committing a crime or unwanted activity. Casinos in Las Vegas are using facial recognition to spot “players” with shady records or even employees complicit with individuals trying to get even with “the house”. This technology incorporates visible and infrared modalities face detection, image quality analysis, verification and identification. Many companies use cloud-based image-matching technology to their product range providing the ability to apply theory and innovation to challenging problems in the real world. Facial recognition technology is extremely complex and depends upon many data points relative to the human face.

Facial recognition has a very specific methodology associated with it. You can see from the graphic above points of recognition are “mapped” highlighting very specific characteristics of the human face. Tattoos, scars, feature shapes, etc. all play into identifying an individual. A grid is constructed of “surface features”; those features are then compared with photographs located in data bases or archives. In this fashion, positive identification can be accomplished. The graphic below will indicate the grid developed and used for the mapping process. Cameras are also shown that receive the image and send that image to software used for comparisons.

One of the most successful cases for the use of facial recognition was last year’s bombing during the Boston Marathon. Cameras mounted at various locations around the site of the bombing captured photographs of Tamerian and Dzhokhar Tsarnaev prior to their backpack being positioned for both blasts. Even though this is not facial recognition in the truest since of the word, there is no doubt the cameras were instrumental in identifying both criminals.

Dzhokhar Tsarnaev is now the only of the court case that will determine life or death. There is no doubt, thanks to MV, concerning his guilt or innocence. He is guilty. Jurors in Boston heard harrowing testimony this week in his trial. Survivors, as well as police and first responders, recounted often-disturbing accounts of their suffering and the suffering of runners and spectators as a result of the attack. Facial recognition was paramount in his identification and ultimate capture.

As always, your comments are very welcome.

Share this:

Related